Merge pull request #35 from naturalett/add-local-deploy-flag

add local deploy flag

diff --git a/README.md b/README.md

index 9328474..a92daff 100644

--- a/README.md

+++ b/README.md

@@ -154,7 +154,15 @@

You'll see that a number of outputs indicating various docker images built.

-3. Deploy the pipeline:

+3. Create a kubernetes local volume \

+In case your Yaml includes working with [volumes](https://github.com/apache/incubator-liminal/blob/6253f8b2c9dc244af032979ec6d462dc3e07e170/docs/getting_started.md#mounted-volumes)

+please first run the following command:

+```bash

+cd </path/to/your/liminal/code>

+liminal create

+```

+

+4. Deploy the pipeline:

```bash

cd </path/to/your/liminal/code>

liminal deploy

@@ -166,17 +174,17 @@

This will rebuild the airlfow docker containers from scratch with a fresh version of liminal, ensuring consistency.

-4. Start the server

+5. Start the server

```bash

liminal start

```

-5. Stop the server

+6. Stop the server

```bash

liminal stop

```

-6. Display the server logs

+7. Display the server logs

```bash

liminal logs --follow/--tail

@@ -187,12 +195,12 @@

liminal logs --follow

```

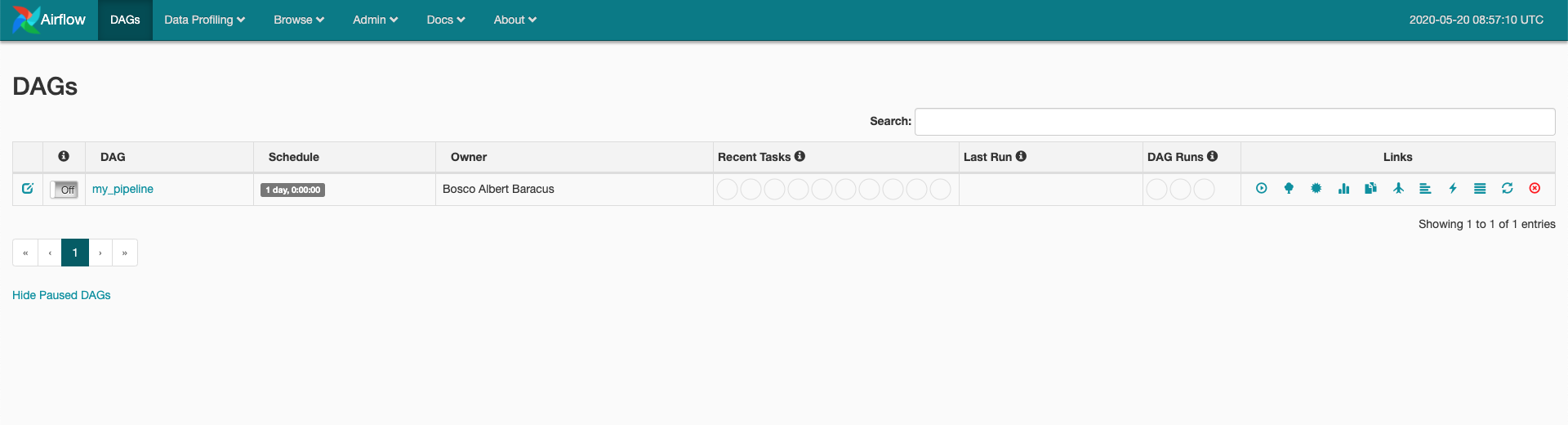

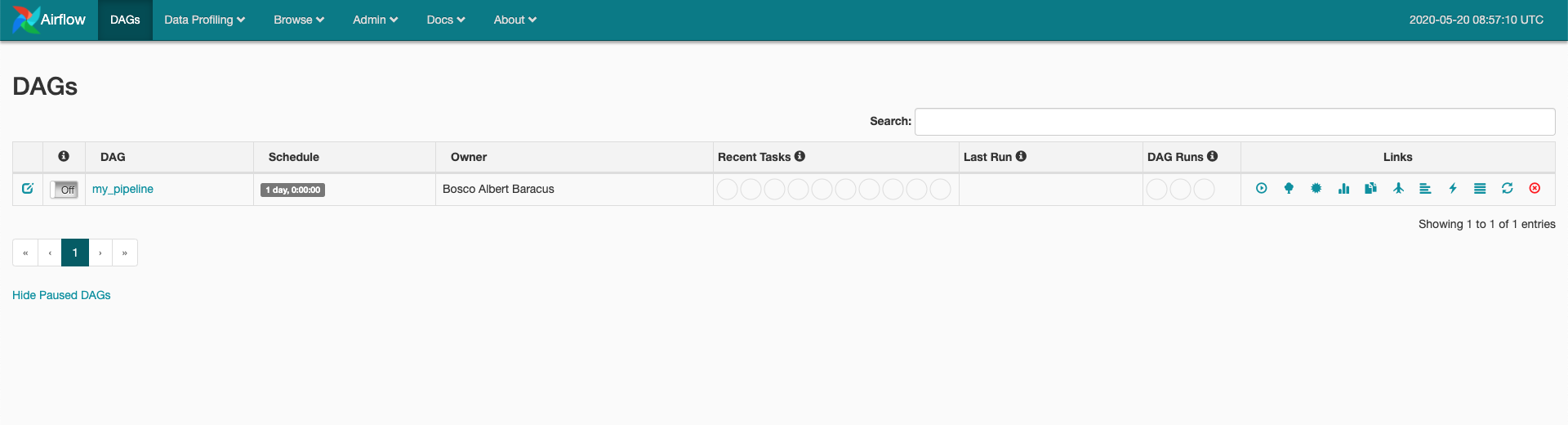

-6. Navigate to [http://localhost:8080/admin](http://localhost:8080/admin)

+8. Navigate to [http://localhost:8080/admin](http://localhost:8080/admin)

-7. You should see your

+9. You should see your

The pipeline is scheduled to run according to the ```json schedule: 0 * 1 * *``` field in the .yml file you provided.

-8. To manually activate your pipeline:

+10. To manually activate your pipeline:

Click your pipeline and then click "trigger DAG"

Click "Graph view"

You should see the steps in your pipeline getting executed in "real time" by clicking "Refresh" periodically.

diff --git a/liminal/kubernetes/volume_util.py b/liminal/kubernetes/volume_util.py

index eff1fd0..0d00eb4 100644

--- a/liminal/kubernetes/volume_util.py

+++ b/liminal/kubernetes/volume_util.py

@@ -18,11 +18,16 @@

import logging

import os

+import sys

from kubernetes import client, config

from kubernetes.client import V1PersistentVolume, V1PersistentVolumeClaim

-config.load_kube_config()

+try:

+ config.load_kube_config()

+except:

+ msg = "Kubernetes is not running\n"

+ sys.stdout.write(f"INFO: {msg}")

_LOG = logging.getLogger('volume_util')

_LOCAL_VOLUMES = set([])

diff --git a/scripts/liminal b/scripts/liminal

index 71c322d..7c694da 100755

--- a/scripts/liminal

+++ b/scripts/liminal

@@ -131,10 +131,6 @@

yml_name = os.path.basename(config_file)

target_yml_name = os.path.join(environment.get_dags_dir(), yml_name)

shutil.copyfile(config_file, target_yml_name)

- with open(config_file) as stream:

- config = yaml.safe_load(stream)

- volume_util.create_local_volumes(config, os.path.dirname(config_file))

-

def liminal_is_running():

stdout, stderr = docker_compose_command('ps', [])

@@ -163,6 +159,15 @@

stdout, stderr = docker_compose_command('logs', [f'--tail={tail}'])

logging.info(stdout)

+@cli.command("create", short_help="create a kubernetes local volume")

+@click.option('--local-volume-path', default=os.getcwd(), help="folder containing liminal.yaml files")

+def create(local_volume_path):

+ click.echo("creating a kubernetes local volume")

+ config_files = files_util.find_config_files(local_volume_path)

+ for config_file in config_files:

+ with open(config_file) as stream:

+ config = yaml.safe_load(stream)

+ volume_util.create_local_volumes(config, os.path.dirname(config_file))

@cli.command("start",

short_help="starts a local airflow in docker compose. should be run after deploy. " +