| { |

| "cells": [ |

| { |

| "cell_type": "markdown", |

| "id": "d926a756", |

| "metadata": {}, |

| "source": [ |

| "<!--- Licensed to the Apache Software Foundation (ASF) under one -->\n", |

| "<!--- or more contributor license agreements. See the NOTICE file -->\n", |

| "<!--- distributed with this work for additional information -->\n", |

| "<!--- regarding copyright ownership. The ASF licenses this file -->\n", |

| "<!--- to you under the Apache License, Version 2.0 (the -->\n", |

| "<!--- \"License\"); you may not use this file except in compliance -->\n", |

| "<!--- with the License. You may obtain a copy of the License at -->\n", |

| "\n", |

| "<!--- http://www.apache.org/licenses/LICENSE-2.0 -->\n", |

| "\n", |

| "<!--- Unless required by applicable law or agreed to in writing, -->\n", |

| "<!--- software distributed under the License is distributed on an -->\n", |

| "<!--- \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->\n", |

| "<!--- KIND, either express or implied. See the License for the -->\n", |

| "<!--- specific language governing permissions and limitations -->\n", |

| "<!--- under the License. -->\n", |

| "\n", |

| "# Quantize with MKL-DNN backend\n", |

| "\n", |

| "This document is to introduce how to quantize the customer models from FP32 to INT8 with Apache/MXNet toolkit and APIs under Intel CPU.\n", |

| "\n", |

| "If you are not familiar with Apache/MXNet quantization flow, please reference [quantization blog](https://medium.com/apache-mxnet/model-quantization-for-production-level-neural-network-inference-f54462ebba05) first, and the performance data is shown in [Apache/MXNet C++ interface](https://github.com/apache/mxnet/tree/master/cpp-package/example/inference) and [GluonCV](https://gluon-cv.mxnet.io/build/examples_deployment/int8_inference.html). \n", |

| "\n", |

| "## Installation and Prerequisites\n", |

| "\n", |

| "Installing MXNet with MKLDNN backend is an easy and essential process. You can follow [How to build and install MXNet with MKL-DNN backend](/api/python/docs/tutorials/performance/backend/mkldnn/mkldnn_readme.html) to build and install MXNet from source. Also, you can install the release or nightly version via PyPi and pip directly by running:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "75805dc2", |

| "metadata": {}, |

| "source": [ |

| "```\n", |

| "# release version\n", |

| "pip install mxnet\n", |

| "\n", |

| "# latest nightly development version\n", |

| "pip install --pre \"mxnet<2\" -f https://dist.mxnet.io/python\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "3c04411f", |

| "metadata": {}, |

| "source": [ |

| "## Image Classification Demo\n", |

| "\n", |

| "A quantization script [imagenet_gen_qsym_mkldnn.py](https://github.com/apache/mxnet/blob/master/example/quantization/imagenet_gen_qsym_mkldnn.py) has been designed to launch quantization for image-classification models. This script is integrated with [Gluon-CV modelzoo](https://gluon-cv.mxnet.io/model_zoo/classification.html), so that all pre-trained models can be downloaded from Gluon-CV and then converted for quantization. For details, you can refer [Model Quantization with Calibration Examples](https://github.com/apache/mxnet/blob/master/example/quantization/README.md).\n", |

| "\n", |

| "## Integrate Quantization Flow to Your Project\n", |

| "\n", |

| "Quantization flow works for both symbolic and Gluon models. If you're using Gluon, you can first refer [Saving and Loading Gluon Models](/api/python/docs/tutorials/packages/gluon/blocks/save_load_params.html) to hybridize your computation graph and export it as a symbol before running quantization.\n", |

| "\n", |

| "In general, the quantization flow includes 4 steps. The user can get the acceptable accuracy from step 1 to 3 with minimum effort. Most of thing in this stage is out-of-box and the data scientists and researchers only need to focus on how to represent data and layers in their model. After a quantized model is generated, you may want to deploy it online and the performance will be the next key point. Thus, step 4, calibration, can improve the performance a lot by reducing lots of runtime calculation.\n", |

| "\n", |

| "\n", |

| "\n", |

| "Now, we are going to take Gluon ResNet18 as an example to show how each step work.\n", |

| "\n", |

| "### Initialize Model" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "883b38c3", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "import logging\n", |

| "import mxnet as mx\n", |

| "from mxnet.gluon.model_zoo import vision\n", |

| "from mxnet.contrib.quantization import *\n", |

| "\n", |

| "logging.basicConfig()\n", |

| "logger = logging.getLogger('logger')\n", |

| "logger.setLevel(logging.INFO)\n", |

| "\n", |

| "batch_shape = (1, 3, 224, 224)\n", |

| "resnet18 = vision.resnet18_v1(pretrained=True)\n", |

| "resnet18.hybridize()\n", |

| "resnet18.forward(mx.nd.zeros(batch_shape))\n", |

| "resnet18.export('resnet18_v1')\n", |

| "sym, arg_params, aux_params = mx.model.load_checkpoint('resnet18_v1', 0)\n", |

| "# (optional) visualize float32 model\n", |

| "mx.viz.plot_network(sym)\n", |

| "```\n" |

| ] |

| }, |

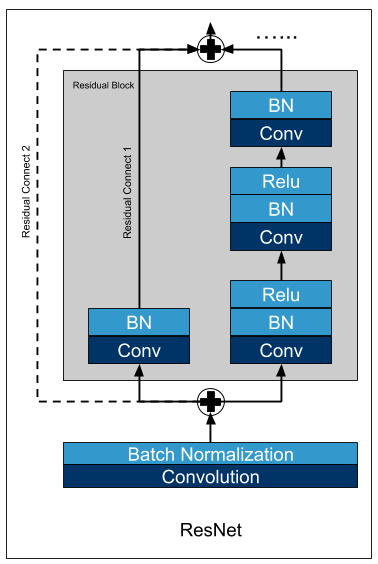

| { |

| "cell_type": "markdown", |

| "id": "c6d9b2bc", |

| "metadata": {}, |

| "source": [ |

| "First, we download resnet18-v1 model from gluon modelzoo and export it as a symbol. You can visualize float32 model. Below is a raw residual block.\n", |

| "\n", |

| "\n", |

| "\n", |

| "#### Model Fusion" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "9075309f", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "sym = sym.get_backend_symbol('MKLDNN_QUANTIZE')\n", |

| "# (optional) visualize fused float32 model\n", |

| "mx.viz.plot_network(sym)\n", |

| "```\n" |

| ] |

| }, |

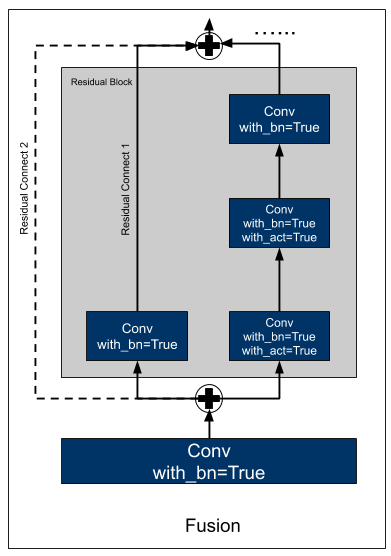

| { |

| "cell_type": "markdown", |

| "id": "7a0037e5", |

| "metadata": {}, |

| "source": [ |

| "It's important to add this line to enable graph fusion before quantization to get better performance. Below is a fused residual block. Batchnorm, Activation and elemwise_add are fused into Convolution.\n", |

| "\n", |

| "\n", |

| "\n", |

| "### Quantize Model\n", |

| "\n", |

| "A python interface `quantize_graph` is provided for the user. Thus, it is very flexible for the data scientist to construct the expected models based on different requirements in a real deployment." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "887d967c", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "# quantize configs\n", |

| "# set exclude layers\n", |

| "excluded_names = []\n", |

| "# set calib mode.\n", |

| "calib_mode = 'none'\n", |

| "# set calib_layer\n", |

| "calib_layer = None\n", |

| "# set quantized_dtype\n", |

| "quantized_dtype = 'auto'\n", |

| "logger.info('Quantizing FP32 model Resnet18-V1')\n", |

| "qsym, qarg_params, aux_params, collector = quantize_graph(sym=sym, arg_params=arg_params, aux_params=aux_params,\n", |

| " excluded_sym_names=excluded_names,\n", |

| " calib_mode=calib_mode, calib_layer=calib_layer,\n", |

| " quantized_dtype=quantized_dtype, logger=logger)\n", |

| "# (optional) visualize quantized model\n", |

| "mx.viz.plot_network(qsym)\n", |

| "# save quantized model\n", |

| "mx.model.save_checkpoint('quantized-resnet18_v1', 0, qsym, qarg_params, aux_params)\n", |

| "```\n" |

| ] |

| }, |

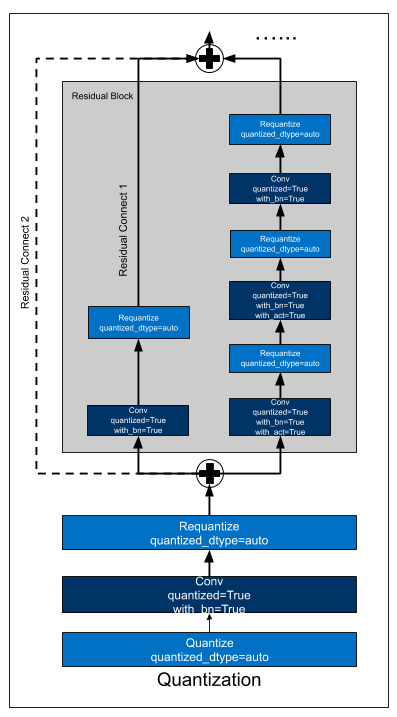

| { |

| "cell_type": "markdown", |

| "id": "23afc562", |

| "metadata": {}, |

| "source": [ |

| "By applying `quantize_graph` to the symbolic model, a new quantized model can be generated, named `qsym` along with its parameters. We can see `_contrib_requantize` operators are inserted after `Convolution` to convert the INT32 output to FP32. \n", |

| "\n", |

| "\n", |

| "\n", |

| "Below table gives some descriptions.\n", |

| "\n", |

| "| param | type | description|\n", |

| "|--------------------|-----------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|\n", |

| "| excluded_sym_names | list of strings | A list of strings representing the names of the symbols that users want to excluding from being quantized.|\n", |

| "| calib_mode | str | If calib_mode='none', no calibration will be used and the thresholds for requantization after the corresponding layers will be calculated at runtime by calling min and max operators. The quantized models generated in this mode are normally 10-20% slower than those with calibrations during inference.<br>If calib_mode='naive', the min and max values of the layer outputs from a calibration dataset will be directly taken as the thresholds for quantization.<br>If calib_mode='entropy', the thresholds for quantization will be derived such that the KL divergence between the distributions of FP32 layer outputs and quantized layer outputs is minimized based upon the calibration dataset. |\n", |

| "| calib_layer | function | Given a layer's output name in string, return True or False for deciding whether to calibrate this layer.<br>If yes, the statistics of the layer's output will be collected; otherwise, no information of the layer's output will be collected.<br>If not provided, all the layers' outputs that need requantization will be collected.|\n", |

| "| quantized_dtype | str | The quantized destination type for input data. Currently support 'int8', 'uint8' and 'auto'.<br>'auto' means automatically select output type according to calibration result.|\n", |

| "\n", |

| "### Evaluate & Tune\n", |

| "\n", |

| "Now, you get a pair of quantized symbol and params file for inference. For Gluon inference, only difference is to load model and params by a SymbolBlock as below example:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "a9328738", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "quantized_net = mx.gluon.SymbolBlock.imports('quantized-resnet18_v1-symbol.json', 'data', 'quantized-resnet18_v1-0000.params')\n", |

| "quantized_net.hybridize(static_shape=True, static_alloc=True)\n", |

| "batch_size = 1\n", |

| "data = mx.nd.ones((batch_size,3,224,224))\n", |

| "quantized_net(data)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "21ac0632", |

| "metadata": {}, |

| "source": [ |

| "Now, you can get the accuracy from a quantized network. Furthermore, you can try to select different layers or OPs to be quantized by `excluded_sym_names` parameter and figure out an acceptable accuracy.\n", |

| "\n", |

| "### Calibrate Model (optional for performance)\n", |

| "\n", |

| "The quantized model generated in previous steps can be very slow during inference since it will calculate min and max at runtime. We recommend using offline calibration for better performance by setting `calib_mode` to `naive` or `entropy`. And then calling `set_monitor_callback` api to collect layer information with a subset of the validation datasets before int8 inference." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "71257cb9", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "# quantization configs\n", |

| "# set exclude layers\n", |

| "excluded_names = []\n", |

| "# set calib mode.\n", |

| "calib_mode = 'naive'\n", |

| "# set calib_layer\n", |

| "calib_layer = None\n", |

| "# set quantized_dtype\n", |

| "quantized_dtype = 'auto'\n", |

| "logger.info('Quantizing FP32 model resnet18-V1')\n", |

| "cqsym, cqarg_params, aux_params, collector = quantize_graph(sym=sym, arg_params=arg_params, aux_params=aux_params,\n", |

| " excluded_sym_names=excluded_names,\n", |

| " calib_mode=calib_mode, calib_layer=calib_layer,\n", |

| " quantized_dtype=quantized_dtype, logger=logger)\n", |

| "\n", |

| "# download imagenet validation dataset\n", |

| "mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'dataset.rec')\n", |

| "# set rgb info for data\n", |

| "mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939, 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}\n", |

| "# set batch size\n", |

| "batch_size = 16\n", |

| "# create DataIter\n", |

| "data = mx.io.ImageRecordIter(path_imgrec='dataset.rec', batch_size=batch_size, data_shape=batch_shape[1:], rand_crop=False, rand_mirror=False, **mean_std)\n", |

| "# create module\n", |

| "mod = mx.mod.Module(symbol=sym, label_names=None, context=mx.cpu())\n", |

| "mod.bind(for_training=False, data_shapes=data.provide_data, label_shapes=None)\n", |

| "mod.set_params(arg_params, aux_params)\n", |

| "\n", |

| "# calibration configs\n", |

| "# set num_calib_batches\n", |

| "num_calib_batches = 5\n", |

| "max_num_examples = num_calib_batches * batch_size\n", |

| "# monitor FP32 Inference\n", |

| "mod._exec_group.execs[0].set_monitor_callback(collector.collect, monitor_all=True)\n", |

| "num_batches = 0\n", |

| "num_examples = 0\n", |

| "for batch in data:\n", |

| " mod.forward(data_batch=batch, is_train=False)\n", |

| " num_batches += 1\n", |

| " num_examples += batch_size\n", |

| " if num_examples >= max_num_examples:\n", |

| " break\n", |

| "if logger is not None:\n", |

| " logger.info(\"Collected statistics from %d batches with batch_size=%d\"\n", |

| " % (num_batches, batch_size))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "6d0f4f48", |

| "metadata": {}, |

| "source": [ |

| "After that, layer information will be filled into the `collector` returned by `quantize_graph` api. Then, you need to write the layer information into int8 model by calling `calib_graph` api." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "671ecb50", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "# write scaling factor into quantized symbol\n", |

| "cqsym, cqarg_params, aux_params = calib_graph(qsym=cqsym, arg_params=arg_params, aux_params=aux_params,\n", |

| " collector=collector, calib_mode=calib_mode,\n", |

| " quantized_dtype=quantized_dtype, logger=logger)\n", |

| "# (optional) visualize quantized model\n", |

| "mx.viz.plot_network(cqsym)\n", |

| "```\n" |

| ] |

| }, |

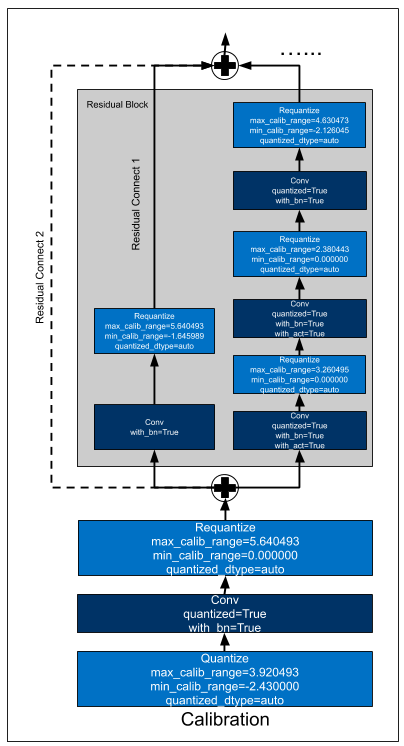

| { |

| "cell_type": "markdown", |

| "id": "d8949593", |

| "metadata": {}, |

| "source": [ |

| "Below is a quantized residual block with naive calibration. We can see `min_calib_range` and `max_calib_range` are written into `_contrib_requantize` operators.\n", |

| "\n", |

| "\n", |

| "\n", |

| "When you get a quantized model with calibration, keeping sure to call fusion api again since this can fuse some `requantize` or `dequantize` operators for further performance improvement." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "a3e48cc2", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "# perform post-quantization fusion\n", |

| "cqsym = cqsym.get_backend_symbol('MKLDNN_QUANTIZE')\n", |

| "# (optional) visualize post-quantized model\n", |

| "mx.viz.plot_network(cqsym)\n", |

| "# save quantized model\n", |

| "mx.model.save_checkpoint('quantized-resnet18_v1', 0, cqsym, cqarg_params, aux_params)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "944a0b52", |

| "metadata": {}, |

| "source": [ |

| "Below is a post-quantized residual block. We can see `_contrib_requantize` operators are fused into `Convolution` operators.\n", |

| "\n", |

| "\n", |

| "\n", |

| "BTW, You can also modify the `min_calib_range` and `max_calib_range` in the JSON file directly." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "b5ce6d87", |

| "metadata": {}, |

| "source": [ |

| "```\n", |

| " {\n", |

| " \"op\": \"_sg_mkldnn_conv\", \n", |

| " \"name\": \"quantized_sg_mkldnn_conv_bn_act_6\", \n", |

| " \"attrs\": {\n", |

| " \"max_calib_range\": \"3.562147\", \n", |

| " \"min_calib_range\": \"0.000000\", \n", |

| " \"quantized\": \"true\", \n", |

| " \"with_act\": \"true\", \n", |

| " \"with_bn\": \"true\"\n", |

| " }, \n", |

| "......\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "af683e5d", |

| "metadata": {}, |

| "source": [ |

| "### Tips for Model Calibration\n", |

| "\n", |

| "#### Accuracy Tuning\n", |

| "\n", |

| "- Try to use `entropy` calib mode;\n", |

| "\n", |

| "- Try to exclude some layers which may cause obvious accuracy drop;\n", |

| "\n", |

| "- Change calibration dataset by setting different `num_calib_batches` or shuffle your validation dataset;\n", |

| "\n", |

| "- Use Intel® Neural Compressor ([see below](#Improving-accuracy-with-Intel-Neural-Compressor))\n", |

| "\n", |

| "#### Performance Tuning\n", |

| "\n", |

| "- Keep sure to perform graph fusion before quantization;\n", |

| "\n", |

| "- If lots of `requantize` layers exist, keep sure to perform post-quantization fusion after calibration;\n", |

| "\n", |

| "- Compare the MXNet profile or `MKLDNN_VERBOSE` of float32 and int8 inference;\n", |

| "\n", |

| "## Deploy with Python/C++\n", |

| "\n", |

| "MXNet also supports deploy quantized models with C++. Refer [MXNet C++ Package](https://github.com/apache/mxnet/blob/master/cpp-package/README.md) for more details.\n", |

| "\n", |

| "# Improving accuracy with Intel® Neural Compressor\n", |

| "\n", |

| "The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement. \n", |

| "\n", |

| "**NOTE:**\n", |

| "\n", |

| "Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).\n", |

| "\n", |

| "## Installation and Prerequisites\n", |

| "\n", |

| "- Install MXNet with MKLDNN enabled as described in the [previous section](#Installation-and-Prerequisites).\n", |

| "\n", |

| "- Install Intel® Neural Compressor:\n", |

| " \n", |

| " Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):\n", |

| "\n", |

| " ```bash\n", |

| " # install stable version from pip\n", |

| " pip install neural-compressor\n", |

| "\n", |

| " # install nightly version from pip\n", |

| " pip install -i https://test.pypi.org/simple/ neural-compressor\n", |

| "\n", |

| " # install stable version from conda\n", |

| " conda install neural-compressor -c conda-forge -c intel\n", |

| " ```\n", |

| "\n", |

| "## Configuration file\n", |

| "\n", |

| "Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "ce0292a1", |

| "metadata": {}, |

| "source": [ |

| "```yaml\n", |

| "# cnn.yaml\n", |

| "\n", |

| "version: 1.0\n", |

| "\n", |

| "model:\n", |

| " name: cnn\n", |

| " framework: mxnet\n", |

| "\n", |

| "quantization:\n", |

| " calibration:\n", |

| " sampling_size: 160 # number of samples for calibration\n", |

| "\n", |

| "tuning:\n", |

| " strategy:\n", |

| " name: basic\n", |

| " accuracy_criterion:\n", |

| " relative: 0.01\n", |

| " exit_policy:\n", |

| " timeout: 0\n", |

| " random_seed: 9527\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "6cedf7fa", |

| "metadata": {}, |

| "source": [ |

| "We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.\n", |

| "\n", |

| "Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.\n", |

| "\n", |

| "For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.\n", |

| "\n", |

| "## Model quantization and tuning\n", |

| "\n", |

| "In general, Intel® Neural Compressor requires 4 elements in order to run: \n", |

| "1. Config file - like the example above\n", |

| "2. Model to be quantized\n", |

| "3. Calibration dataloader\n", |

| "4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.\n", |

| "\n", |

| "### Quantizing ResNet\n", |

| "\n", |

| "The previous sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the same (with the auto-tuning) using INC.\n", |

| "\n", |

| "1. Get the model" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "56385439", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "import logging\n", |

| "import mxnet as mx\n", |

| "from mxnet.gluon.model_zoo import vision\n", |

| "\n", |

| "logging.basicConfig()\n", |

| "logger = logging.getLogger('logger')\n", |

| "logger.setLevel(logging.INFO)\n", |

| "\n", |

| "batch_shape = (1, 3, 224, 224)\n", |

| "resnet18 = vision.resnet18_v1(pretrained=True)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "3c2c69ad", |

| "metadata": {}, |

| "source": [ |

| "2. Prepare the dataset:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "2f5cbfff", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')\n", |

| "\n", |

| "batch_size = 16\n", |

| "mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,\n", |

| " 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}\n", |

| "\n", |

| "data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec', \n", |

| " batch_size=batch_size,\n", |

| " data_shape=batch_shape[1:], \n", |

| " rand_crop=False, \n", |

| " rand_mirror=False,\n", |

| " shuffle=False, \n", |

| " **mean_std)\n", |

| "data.batch_size = batch_size\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "bbf77cce", |

| "metadata": {}, |

| "source": [ |

| "3. Prepare the evaluation function:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "038c9ebd", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "eval_samples = batch_size*10\n", |

| "\n", |

| "def eval_func(model):\n", |

| " data.reset()\n", |

| " metric = mx.metric.Accuracy()\n", |

| " for i, batch in enumerate(data):\n", |

| " if i * batch_size >= eval_samples:\n", |

| " break\n", |

| " x = batch.data[0].as_in_context(mx.cpu())\n", |

| " label = batch.label[0].as_in_context(mx.cpu())\n", |

| " outputs = model.forward(x)\n", |

| " metric.update(label, outputs)\n", |

| " return metric.get()[1]\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "a4e8f737", |

| "metadata": {}, |

| "source": [ |

| "4. Run Intel® Neural Compressor:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "8d0efa69", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "from neural_compressor.experimental import Quantization\n", |

| "quantizer = Quantization(\"./cnn.yaml\")\n", |

| "quantizer.model = resnet18\n", |

| "quantizer.calib_dataloader = data\n", |

| "quantizer.eval_func = eval_func\n", |

| "qnet = quantizer.fit().model\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "f78074c5", |

| "metadata": {}, |

| "source": [ |

| "Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.\n", |

| "\n", |

| "### Quantizing BERT\n", |

| "\n", |

| "This example shows how to use INC to quantize BERT-base for MRPC. In this case, the native MXNet quantization usually introduce a significant accuracy drop (2% - 5% using `naive` calibration mode). To simplify the code, model and task specific boilerplate has been moved to the `details.py` file.\n", |

| "\n", |

| "This is the configuration file for this example:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "07325b6f", |

| "metadata": {}, |

| "source": [ |

| "```yaml\n", |

| "version: 1.0\n", |

| "\n", |

| "model:\n", |

| " name: bert\n", |

| " framework: mxnet\n", |

| "\n", |

| "quantization:\n", |

| " calibration:\n", |

| " sampling_size: 320 # number of samples for calibration\n", |

| "\n", |

| "tuning:\n", |

| " strategy:\n", |

| " name: basic\n", |

| " accuracy_criterion:\n", |

| " relative: 0.01\n", |

| " exit_policy:\n", |

| " timeout: 0\n", |

| " max_trials: 9999 # default is 100\n", |

| " random_seed: 9527\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "625ec1d1", |

| "metadata": {}, |

| "source": [ |

| "And here is the script:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "3c41c739", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "from pathlib import Path\n", |

| "from functools import partial\n", |

| "\n", |

| "import details\n", |

| "from neural_compressor.experimental import Quantization, common\n", |

| "\n", |

| "# constants\n", |

| "INC_CONFIG_PATH = Path('./bert.yaml').resolve()\n", |

| "PARAMS_PATH = Path('./bert_mrpc.params').resolve()\n", |

| "OUTPUT_DIR_PATH = Path('./output/').resolve()\n", |

| "OUTPUT_MODEL_PATH = OUTPUT_DIR_PATH/'quantized_model'\n", |

| "OUTPUT_DIR_PATH.mkdir(parents=True, exist_ok=True)\n", |

| "\n", |

| "# Prepare the dataloaders (calib_dataloader is same as train_dataloader but without shuffling)\n", |

| "train_dataloader, dev_dataloader, calib_dataloader = details.preprocess_data()\n", |

| "\n", |

| "# Get the model\n", |

| "model = details.BERTModel(details.BACKBONE, dropout=0.1, num_classes=details.NUM_CLASSES)\n", |

| "model.hybridize(static_alloc=True)\n", |

| "\n", |

| "# finetune or load the parameters of already finetuned model\n", |

| "if not PARAMS_PATH.exists():\n", |

| " model = details.finetune(model, train_dataloader, dev_dataloader, OUTPUT_DIR_PATH)\n", |

| " model.save_parameters(str(PARAMS_PATH))\n", |

| "else:\n", |

| " model.load_parameters(str(PARAMS_PATH), ctx=details.CTX, cast_dtype=True)\n", |

| "\n", |

| "# run INC\n", |

| "calib_dataloader.batch_size = details.BATCH_SIZE\n", |

| "eval_func = partial(details.evaluate, dataloader=dev_dataloader)\n", |

| "\n", |

| "quantizer = Quantization(str(INC_CONFIG_PATH)) # 1. Config file\n", |

| "quantizer.model = common.Model(model) # 2. Model to be quantized\n", |

| "quantizer.calib_dataloader = calib_dataloader # 3. Calibration dataloader\n", |

| "quantizer.eval_func = eval_func # 4. Evaluation function\n", |

| "quantized_model = quantizer.fit().model\n", |

| "\n", |

| "# save the quantized model\n", |

| "quantized_model.export(str(OUTPUT_MODEL_PATH))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "ddfa613a", |

| "metadata": {}, |

| "source": [ |

| "With the evaluation function hidden in the `details.py` file:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "2f0c598f", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "def evaluate(model, dataloader):\n", |

| " metric = METRIC()\n", |

| " for batch in dataloader:\n", |

| " input_ids, segment_ids, valid_length, label = batch\n", |

| " input_ids = input_ids.as_in_context(CTX)\n", |

| " segment_ids = segment_ids.as_in_context(CTX)\n", |

| " valid_length = valid_length.as_in_context(CTX)\n", |

| " label = label.as_in_context(CTX).reshape((-1))\n", |

| "\n", |

| " out = model(input_ids, segment_ids, valid_length)\n", |

| " metric.update([label], [out])\n", |

| "\n", |

| " metric_name, metric_val = metric.get()\n", |

| " return metric_val\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "614b0651", |

| "metadata": {}, |

| "source": [ |

| "For comparision, this is how one could quantize this model using MXNet native quantization (this function is also located in the `details.py` file):" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "04f4839e", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "def native_quantization(model, calib_dataloader, dev_dataloader):\n", |

| " quantized_model = quantize_net_v2(model,\n", |

| " quantize_mode='smart',\n", |

| " calib_data=calib_dataloader,\n", |

| " calib_mode='naive',\n", |

| " num_calib_examples=BATCH_SIZE*10)\n", |

| " print('Native quantization results: {}'.format(evaluate(quantized_model, dev_dataloader)))\n", |

| " return quantized_model\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "96661093", |

| "metadata": {}, |

| "source": [ |

| "For complete code, see this example on the [official GitHub repository](https://github.com/apache/mxnet/tree/v1.x/example/quantization_inc/BERT_MRPC).\n", |

| "\n", |

| "#### Results:\n", |

| "\n", |

| "Environment:\n", |

| "- c6i.16xlarge Amazon EC2 instance (Intel(R) Xeon(R) Platinum 8375C CPU @ 2.90GHz)\n", |

| "- Ubuntu 20.04 LTS\n", |

| "- MXNet 1.9\n", |

| "- INC 1.9.1\n", |

| "\n", |

| "Results on the validation dataset:\n", |

| "\n", |

| "| Quantization method | Accuracy | F1 | Relative accuracy loss [%] | Calibration/tuning time [s] | Speedup |\n", |

| "|:----------------------------:|:--------:|:------:|:--------------------------:|:----------------------------:|:-------:|\n", |

| "| **No quantization (f32)** | **0.8529** | **0.8956** | **0** | **0** | **1.0** |\n", |

| "| Native 'naive', 10 batches | 0.8259 | 0.8775 | 3.1657 | 31 | 1.3811 |\n", |

| "| Native 'naive', 20 batches | 0.8210 | 0.8731 | 3.7402 | 58 | 1.3866 |\n", |

| "| Native 'entropy', 10 batches | 0.8064 | 0.8557 | 5.4520 | 37 | 1.3789 |\n", |

| "| Native 'entropy', 20 batches | 0.8137 | 0.8624 | 4.5961 | 67 | 1.3460 |\n", |

| "| INC, 'basic' | 0.8456 | 0.8889 | 0.8559 | 197 | 1.4418 |\n", |

| "| INC, 'bayesian' | 0.8529 | 0.8888 | 0 | 129 | 1.4275 | \n", |

| "| INC, 'mse' | 0.8480 | 0.8954 | 0.5745 | 974 | 0.9642 |\n", |

| "\n", |

| "All INC strategies found configurations meeting the 1% relative accuracy loss criterion. Only the `mse` strategy struggled, taking the longest time and generating configuration that is slower than the f32 model. Although these results may suggest that the `mse` strategy is the worst and the `bayesian` strategy is the best, different strategies may give better results for specific models and tasks. Usually the `basic` strategy is the most stable one.\n", |

| "\n", |

| "Here is an example of a configuration generated by INC with the `basic` strategy:\n", |

| "\n", |

| "- Layers quantized using min-max (`naive`) calibration algorithm: \n", |

| " ```\n", |

| " {'bertclassifier0_dropout0_fwd', 'bertencoder0_layernorm0_layernorm0', 'bertencoder0_transformer0_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer0_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer0_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer0_layernorm0_layernorm0', 'bertencoder0_transformer0_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer10_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer10_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer10_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer10_layernorm0_layernorm0', 'bertencoder0_transformer10_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer11_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer11_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer11_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer11_layernorm0_layernorm0', 'bertencoder0_transformer1_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer1_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer1_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer1_layernorm0_layernorm0', 'bertencoder0_transformer1_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer2_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer2_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer2_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer2_layernorm0_layernorm0', 'bertencoder0_transformer2_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer3_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer3_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer3_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer3_layernorm0_layernorm0', 'bertencoder0_transformer3_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer4_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer4_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer4_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer4_layernorm0_layernorm0', 'bertencoder0_transformer4_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer5_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer5_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer5_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer5_layernorm0_layernorm0', 'bertencoder0_transformer5_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer6_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer6_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer6_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer6_layernorm0_layernorm0', 'bertencoder0_transformer6_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer7_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer7_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer7_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer7_layernorm0_layernorm0', 'bertencoder0_transformer7_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer8_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer8_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer8_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer8_layernorm0_layernorm0', 'bertencoder0_transformer8_positionwiseffn0_layernorm0_layernorm0', 'bertencoder0_transformer9_dotproductselfattentioncell0_dropout0_fwd', 'bertencoder0_transformer9_dotproductselfattentioncell0_reshape3', 'bertencoder0_transformer9_dotproductselfattentioncell0_reshape7', 'bertencoder0_transformer9_layernorm0_layernorm0', 'bertencoder0_transformer9_positionwiseffn0_layernorm0_layernorm0', 'bertmodel0_reshape0', 'sg_mkldnn_fully_connected_0', 'sg_mkldnn_fully_connected_1', 'sg_mkldnn_fully_connected_11', 'sg_mkldnn_fully_connected_12', 'sg_mkldnn_fully_connected_13', 'sg_mkldnn_fully_connected_15', 'sg_mkldnn_fully_connected_16', 'sg_mkldnn_fully_connected_17', 'sg_mkldnn_fully_connected_19', 'sg_mkldnn_fully_connected_20', 'sg_mkldnn_fully_connected_21', 'sg_mkldnn_fully_connected_23', 'sg_mkldnn_fully_connected_24', 'sg_mkldnn_fully_connected_25', 'sg_mkldnn_fully_connected_27', 'sg_mkldnn_fully_connected_28', 'sg_mkldnn_fully_connected_29', 'sg_mkldnn_fully_connected_3', 'sg_mkldnn_fully_connected_31', 'sg_mkldnn_fully_connected_32', 'sg_mkldnn_fully_connected_33', 'sg_mkldnn_fully_connected_35', 'sg_mkldnn_fully_connected_36', 'sg_mkldnn_fully_connected_37', 'sg_mkldnn_fully_connected_39', 'sg_mkldnn_fully_connected_4', 'sg_mkldnn_fully_connected_40', 'sg_mkldnn_fully_connected_41', 'sg_mkldnn_fully_connected_43', 'sg_mkldnn_fully_connected_44', 'sg_mkldnn_fully_connected_45', 'sg_mkldnn_fully_connected_47', 'sg_mkldnn_fully_connected_48', 'sg_mkldnn_fully_connected_49', 'sg_mkldnn_fully_connected_5', 'sg_mkldnn_fully_connected_7', 'sg_mkldnn_fully_connected_8', 'sg_mkldnn_fully_connected_9', 'sg_mkldnn_fully_connected_eltwise_10', 'sg_mkldnn_fully_connected_eltwise_14', 'sg_mkldnn_fully_connected_eltwise_18', 'sg_mkldnn_fully_connected_eltwise_2', 'sg_mkldnn_fully_connected_eltwise_22', 'sg_mkldnn_fully_connected_eltwise_26', 'sg_mkldnn_fully_connected_eltwise_30', 'sg_mkldnn_fully_connected_eltwise_34', 'sg_mkldnn_fully_connected_eltwise_38', 'sg_mkldnn_fully_connected_eltwise_42', 'sg_mkldnn_fully_connected_eltwise_46', 'sg_mkldnn_fully_connected_eltwise_6'}\n", |

| " ```\n", |

| "\n", |

| "- Layers quantized using KL (`entropy`) calibration algorithm: \n", |

| " ```\n", |

| " {'sg_mkldnn_selfatt_qk_0', 'sg_mkldnn_selfatt_qk_10', 'sg_mkldnn_selfatt_qk_12', 'sg_mkldnn_selfatt_qk_14', 'sg_mkldnn_selfatt_qk_16', 'sg_mkldnn_selfatt_qk_18', 'sg_mkldnn_selfatt_qk_2', 'sg_mkldnn_selfatt_qk_20', 'sg_mkldnn_selfatt_qk_22', 'sg_mkldnn_selfatt_qk_4', 'sg_mkldnn_selfatt_qk_6', 'sg_mkldnn_selfatt_qk_8', 'sg_mkldnn_selfatt_valatt_1', 'sg_mkldnn_selfatt_valatt_11', 'sg_mkldnn_selfatt_valatt_13', 'sg_mkldnn_selfatt_valatt_15', 'sg_mkldnn_selfatt_valatt_17', 'sg_mkldnn_selfatt_valatt_19', 'sg_mkldnn_selfatt_valatt_21', 'sg_mkldnn_selfatt_valatt_23', 'sg_mkldnn_selfatt_valatt_3', 'sg_mkldnn_selfatt_valatt_5', 'sg_mkldnn_selfatt_valatt_7', 'sg_mkldnn_selfatt_valatt_9'}\n", |

| " ```\n", |

| "\n", |

| "- Layers excluded from quantization: \n", |

| " ```\n", |

| " {'sg_mkldnn_fully_connected_43'}\n", |

| " ```\n", |

| "\n", |

| "## Tips\n", |

| "- In order to get a solution that generalizes well, evaluate the model (in eval_func) on a representative dataset.\n", |

| "- With `history.snapshot` file (generated by INC) you can recover any model that was generated during the tuning process:\n", |

| " ```python\n", |

| " from neural_compressor.utils.utility import recover\n", |

| "\n", |

| " quantized_model = recover(f32_model, 'nc_workspace/<tuning date>/history.snapshot', configuration_idx).model\n", |

| " ```\n", |

| "\n", |

| "<!-- INSERT SOURCE DOWNLOAD BUTTONS -->" |

| ] |

| } |

| ], |

| "metadata": { |

| "language_info": { |

| "name": "python" |

| } |

| }, |

| "nbformat": 4, |

| "nbformat_minor": 5 |

| } |