| { |

| "cells": [ |

| { |

| "cell_type": "markdown", |

| "id": "cc18ba7d", |

| "metadata": {}, |

| "source": [ |

| "<!--- Licensed to the Apache Software Foundation (ASF) under one -->\n", |

| "<!--- or more contributor license agreements. See the NOTICE file -->\n", |

| "<!--- distributed with this work for additional information -->\n", |

| "<!--- regarding copyright ownership. The ASF licenses this file -->\n", |

| "<!--- to you under the Apache License, Version 2.0 (the -->\n", |

| "<!--- \"License\"); you may not use this file except in compliance -->\n", |

| "<!--- with the License. You may obtain a copy of the License at -->\n", |

| "\n", |

| "<!--- http://www.apache.org/licenses/LICENSE-2.0 -->\n", |

| "\n", |

| "<!--- Unless required by applicable law or agreed to in writing, -->\n", |

| "<!--- software distributed under the License is distributed on an -->\n", |

| "<!--- \"AS IS\" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->\n", |

| "<!--- KIND, either express or implied. See the License for the -->\n", |

| "<!--- specific language governing permissions and limitations -->\n", |

| "<!--- under the License. -->\n", |

| "\n", |

| "# Image Augmentation\n", |

| "\n", |

| "Augmentation is the process of randomly adjusting the dataset samples used for training. As a result, a greater diversity of samples will be seen by the network and it is therefore less likely to overfit the training dataset. Some of the spurious characteristics of the dataset can be reduced using this technique. One example would be a dataset of images from the same camera having the same color tint: it's unhelpful when you want to apply this model to images from other cameras. You can avoid this by randomly shifting the colours of each image slightly and training your network on these augmented images.\n", |

| "\n", |

| "Although this technique can be applied in a variety of domains, it's very common in Computer Vision, and we will focus on image augmentations in this tutorial. Some example image augmentations include random crops and flips, and adjustments to the brightness and contrast.\n", |

| "\n", |

| "#### What are the prerequisites?\n", |

| "\n", |

| "You should be familiar with the concept of a transform and how to apply it to a dataset before reading this tutorial. Check out the [Data Transforms tutorial]() if this is new to you or you need a quick refresher.\n", |

| "\n", |

| "#### Where can I find the augmentation transforms?\n", |

| "\n", |

| "You can find them in the `mxnet.gluon.data.vision.transforms` module, alongside the deterministic transforms we've seen previously, such as `ToTensor`, `Normalize`, `CenterCrop` and `Resize`. Augmentations involve an element of randomness and all the augmentation transforms are prefixed with `Random`, such as `RandomResizedCrop` and `RandomBrightness`. We'll start by importing MXNet and the `transforms`." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "a3737cc6", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "import matplotlib.pyplot as plt\n", |

| "import mxnet as mx\n", |

| "from mxnet.gluon.data.vision import transforms\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "ba930f9c", |

| "metadata": {}, |

| "source": [ |

| "#### Sample Image\n", |

| "\n", |

| "So that we can see the effects of all the vision augmentations, we'll take a sample image of a giraffe and apply various augmentations to it. We can see what it looks like to begin with." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "669fcb7f", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "image_url = 'https://raw.githubusercontent.com/dmlc/web-data/master/mxnet/doc/tutorials/data_aug/inputs/0.jpg'\n", |

| "mx.test_utils.download(image_url, \"giraffe.jpg\")\n", |

| "example_image = mx.image.imread(\"giraffe.jpg\")\n", |

| "plt.imshow(example_image.asnumpy())\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "1d05841b", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "Since these augmentations are random, we'll apply the same augmentation a few times and plot all of the outputs. We define a few utility functions to help with this." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "f6212ee5", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "def show_images(imgs, num_rows, num_cols, scale=2):\n", |

| " # show augmented images in a grid layout \n", |

| " aspect_ratio = imgs[0].shape[0]/imgs[0].shape[1]\n", |

| " figsize = (num_cols * scale, num_rows * scale * aspect_ratio)\n", |

| " _, axes = plt.subplots(num_rows, num_cols, figsize=figsize)\n", |

| " for i in range(num_rows):\n", |

| " for j in range(num_cols):\n", |

| " axes[i][j].imshow(imgs[i * num_cols + j].asnumpy())\n", |

| " axes[i][j].axes.get_xaxis().set_visible(False)\n", |

| " axes[i][j].axes.get_yaxis().set_visible(False)\n", |

| " plt.subplots_adjust(hspace=0.1, wspace=0)\n", |

| " return axes\n", |

| "\n", |

| "def apply(img, aug, num_rows=2, num_cols=4, scale=3):\n", |

| " # apply augmentation multiple times to obtain different samples\n", |

| " Y = [aug(img) for _ in range(num_rows * num_cols)]\n", |

| " show_images(Y, num_rows, num_cols, scale)\n", |

| "```\n" |

| ] |

| }, |

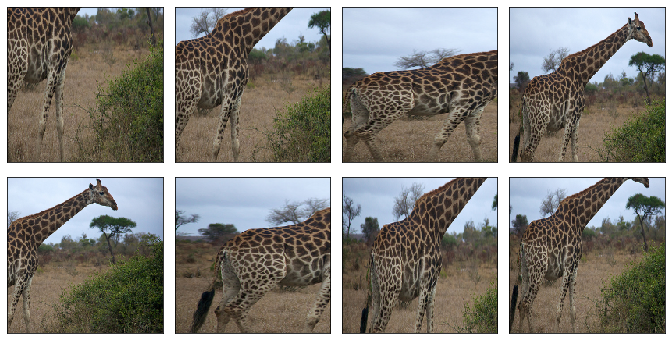

| { |

| "cell_type": "markdown", |

| "id": "f1056210", |

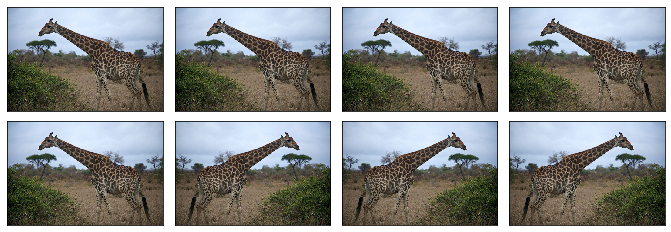

| "metadata": {}, |

| "source": [ |

| "# Spatial Augmentation\n", |

| "\n", |

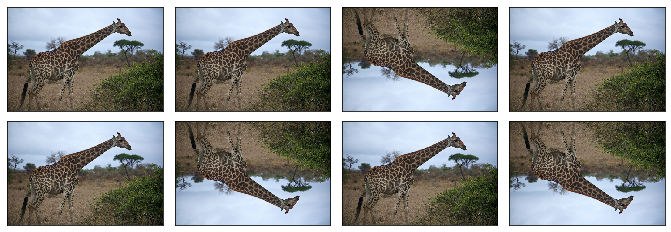

| "One form of augmentation affects the spatial position of pixel values. Using combinations of slicing, scaling, translating, rotating and flipping the values of the original image can be shifted to create new images. Some operations (like scaling and rotation) require interpolation as pixels in the new image are combinations of pixels in the original image.\n", |

| "\n", |

| "### `RandomResizedCrop`\n", |

| "\n", |

| "Many Computer Visions tasks, such as image classification and object detection, should be robust to changes in the scale and position of objects in the image. You can incorporate this into the network using pooling layers, but an alternative method is to crop random regions of the original image. \n", |

| "\n", |

| "As an example, we randomly (using a uniform distribution) crop a region of the image with:\n", |

| "\n", |

| "* an area of 10% to 100% of the original area\n", |

| "* a ratio of width to height between 0.5 and 2\n", |

| "\n", |

| "And then we resize this cropped region to 200 by 200 pixels." |

| ] |

| }, |

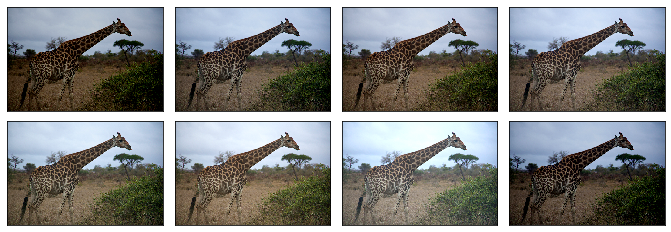

| { |

| "cell_type": "markdown", |

| "id": "469718de", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "shape_aug = transforms.RandomResizedCrop(size=(200, 200),\n", |

| " scale=(0.1, 1),\n", |

| " ratio=(0.5, 2))\n", |

| "apply(example_image, shape_aug)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "1846ced7", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomFlipLeftRight`\n", |

| "\n", |

| "A simple augmentation technique is flipping. Usually flipping horizontally doesn't change the category of object and results in an image that's still plausible in the real world. Using `RandomFlipLeftRight`, we randomly flip the image horizontally 50% of the time." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "eba97cb3", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomFlipLeftRight())\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "716ddb04", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomFlipTopBottom`\n", |

| "\n", |

| "Although it's not as common as flipping left and right, you can flip the image vertically 50% of the time with `RandomFlipTopBottom`. With our giraffe example, we end up with less plausible samples that horizontal flipping, with the ground above the sky in some cases." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "326f8e6e", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomFlipTopBottom())\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "2fbca9cc", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "# Color Augmentation\n", |

| "\n", |

| "Usually, exact coloring doesn't play a significant role in the classification or detection of objects, so augmenting the colors of images is a good technique to make the network invariant to color shifts. Color properties that can be changed include brightness, contrast, saturation and hue.\n", |

| "\n", |

| "### `RandomBrightness`\n", |

| "\n", |

| "Use `RandomBrightness` to add a random brightness jitter to images. Use the `brightness` parameter to control the amount of jitter in brightness, with value from 0 (no change) to 1 (potentially large change). `brightness` doesn't specify whether the brightness of the augmented image will be lighter or darker, just the potential strength of the effect. Specifically the augmentation is given by:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "769e557c", |

| "metadata": {}, |

| "source": [ |

| "```\n", |

| "alpha = 1.0 + random.uniform(-brightness, brightness)\n", |

| "image *= alpha\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "32ce0b36", |

| "metadata": {}, |

| "source": [ |

| "So by setting this to 0.5 we randomly change the brightness of the image to a value between 50% ($1-0.5$) and 150% ($1+0.5$) of the original image." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "a3e38928", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomBrightness(0.5))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "151b6c14", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomContrast`\n", |

| "\n", |

| "Use `RandomContrast` to add a random contrast jitter to an image. Contrast can be thought of as the degree to which light and dark colors in the image differ. Use the `contrast` parameter to control the amount of jitter in contrast, with value from 0 (no change) to 1 (potentially large change). `contrast` doesn't specify whether the contrast of the augmented image will be higher or lower, just the potential strength of the effect. Specifically, the augmentation is given by:" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "b1400cab", |

| "metadata": {}, |

| "source": [ |

| "```\n", |

| "coef = nd.array([[[0.299, 0.587, 0.114]]])\n", |

| "alpha = 1.0 + random.uniform(-contrast, contrast)\n", |

| "gray = image * coef\n", |

| "gray = (3.0 * (1.0 - alpha) / gray.size) * nd.sum(gray)\n", |

| "image *= alpha\n", |

| "image += gray\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "6abc27d8", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomContrast(0.5))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "ae9092ef", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomSaturation`\n", |

| "\n", |

| "Use `RandomSaturation` to add a random saturation jitter to an image. Saturation can be thought of as the 'amount' of color in an image. Use the `saturation` parameter to control the amount of jitter in saturation, with value from 0 (no change) to 1 (potentially large change). `saturation` doesn't specify whether the saturation of the augmented image will be higher or lower, just the potential strength of the effect. Specifically the augmentation is using the method detailed [here](https://beesbuzz.biz/code/16-hsv-color-transforms)." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "13eba3f1", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomSaturation(0.5))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "b0d18946", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomHue`\n", |

| "\n", |

| "Use `RandomHue` to add a random hue jitter to images. Hue can be thought of as the 'shade' of the colors in an image. Use the `hue` parameter to control the amount of jitter in hue, with value from 0 (no change) to 1 (potentially large change). `hue` doesn't specify whether the hue of the augmented image will be shifted one way or the other, just the potential strength of the effect. Specifically the augmentation is using the method detailed [here](https://beesbuzz.biz/code/16-hsv-color-transforms)." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "ff990fcc", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomHue(0.5))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "faef63cf", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomColorJitter`\n", |

| "\n", |

| "`RandomColorJitter` is a convenience transform that can be used to perform multiple color augmentations at once. You can set the `brightness`, `contrast`, `saturation` and `hue` jitters, that function the same as above for their individual transforms." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "8ccd8649", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "color_aug = transforms.RandomColorJitter(brightness=0.5,\n", |

| " contrast=0.5,\n", |

| " saturation=0.5,\n", |

| " hue=0.5)\n", |

| "apply(example_image, color_aug)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "6f253420", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "\n", |

| "### `RandomLighting`\n", |

| "\n", |

| "Use `RandomLighting` for an AlexNet-style PCA-based noise augmentation." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "3a7c6b15", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "apply(example_image, transforms.RandomLighting(alpha=1))\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "255db115", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "# Composed Augmentations\n", |

| "\n", |

| "In practice, we apply multiple augmentation techniques to an image to increase the variety of images in the dataset. Using the `Compose` transform that was introduced in the [Data Transforms tutorial](), we can apply 3 of the transforms we previously used above." |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "0b2940c2", |

| "metadata": {}, |

| "source": [ |

| "```python\n", |

| "augs = transforms.Compose([\n", |

| " transforms.RandomFlipLeftRight(), color_aug, shape_aug])\n", |

| "apply(example_image, augs)\n", |

| "```\n" |

| ] |

| }, |

| { |

| "cell_type": "markdown", |

| "id": "8fd5344b", |

| "metadata": {}, |

| "source": [ |

| "\n", |

| "\n", |

| "<!-- INSERT SOURCE DOWNLOAD BUTTONS -->" |

| ] |

| } |

| ], |

| "metadata": { |

| "language_info": { |

| "name": "python" |

| } |

| }, |

| "nbformat": 4, |

| "nbformat_minor": 5 |

| } |