Add 'webindex/' from commit '91dc7cb6fc72c79a53c6b7d0a6c0599cd8eacb9b'

git-subtree-dir: webindex

git-subtree-mainline: f762da6d8f93dec655741632dd534d1287d1a6ec

git-subtree-split: 91dc7cb6fc72c79a53c6b7d0a6c0599cd8eacb9b

diff --git a/LICENSE b/LICENSE

index 8f71f43..d645695 100644

--- a/LICENSE

+++ b/LICENSE

@@ -1,3 +1,4 @@

+

Apache License

Version 2.0, January 2004

http://www.apache.org/licenses/

@@ -178,7 +179,7 @@

APPENDIX: How to apply the Apache License to your work.

To apply the Apache License to your work, attach the following

- boilerplate notice, with the fields enclosed by brackets "{}"

+ boilerplate notice, with the fields enclosed by brackets "[]"

replaced with your own identifying information. (Don't include

the brackets!) The text should be enclosed in the appropriate

comment syntax for the file format. We also recommend that a

@@ -186,7 +187,7 @@

same "printed page" as the copyright notice for easier

identification within third-party archives.

- Copyright {yyyy} {name of copyright owner}

+ Copyright [yyyy] [name of copyright owner]

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

@@ -199,4 +200,3 @@

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-

diff --git a/README.md b/README.md

index f869d20..6a9cfba 100644

--- a/README.md

+++ b/README.md

@@ -1,76 +1 @@

-![Webindex][logo]

----

-[![Build Status][ti]][tl] [![Apache License][li]][ll]

-

-Webindex is an example [Apache Fluo][fluo] application that incrementally indexes links to web pages

-in multiple ways. If you are new to Fluo, you may want start with the [Fluo tour][tour] as the

-WebIndex application is more complicated. For more information on how the WebIndex application

-works, view the [tables](docs/tables.md) and [code](docs/code-guide.md) documentation.

-

-Webindex utilizes multiple projects. [Common Crawl][cc] web crawl data is used as the input.

-[Apache Spark][spark] is used to initialize Fluo and incrementally load data into Fluo. [Apache

-Accumulo][accumulo] is used to hold the indexes and Fluo's data. Fluo is used to continuously

-combine new and historical information about web pages and update an external index when changes

-occur. Webindex has simple UI built using [Spark Java][sparkjava] that allows querying the indexes.

-

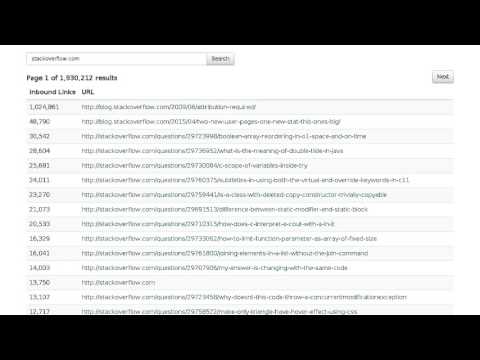

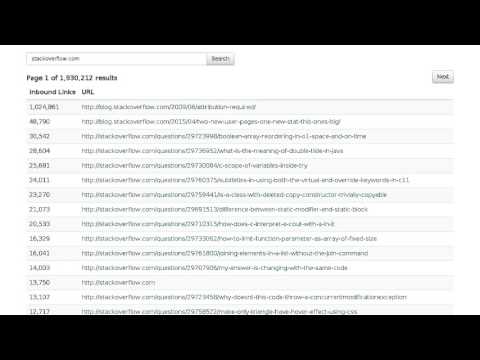

-Below is a video showing repeatedly querying stackoverflow.com while Webindex was running for three

-days on EC2. The video was made by querying the Webindex instance periodically and taking a

-screenshot. More details about this video are available in this [blog post][bp].

-

-[](http://www.youtube.com/watch?v=mJJNJbPN2EI)

-

-## Running WebIndex

-

-If you are new to WebIndex, the simplest way to run the application is to run the development

-server. First, clone the WebIndex repo:

-

- git clone https://github.com/astralway/webindex.git

-

-Next, on a machine where Java and Maven are installed, run the development server using the

-`webindex` command:

-

- cd webindex/

- ./bin/webindex dev

-

-This will build and start the development server which will log to the console. This 'dev' command

-has several command line options which can be viewed by running with `-h`. When you want to

-terminate the server, press `CTRL-c`.

-

-The development server starts a MiniAccumuloCluster and runs MiniFluo on top of it. It parses a

-CommonCrawl data file and creates a file at `data/1000-pages.txt` with 1000 pages that are loaded

-into MiniFluo. The number of pages loaded can be changed to 5000 by using the command below:

-

- ./bin/webindex dev --pages 5000

-

-The pages are processed by Fluo which exports indexes to Accumulo. The development server also

-starts a web application at [http://localhost:4567](http://localhost:4567) that queries indexes in

-Accumulo.

-

-If you would like to run WebIndex on a cluster, follow the [install] instructions.

-

-### Viewing metrics

-

-Metrics can be sent from the development server to InfluxDB and viewed in Grafana. You can either

-setup InfluxDB+Grafana on you own or use [Uno] command `uno setup metrics`. After a metrics server

-is started, start the development server the option `--metrics` to start sending metrics:

-

- ./bin/webindex dev --metrics

-

-Fluo metrics can be viewed in Grafana. To view application-specific metrics for Webindex, import

-the WebIndex Grafana dashboard located at `contrib/webindex-dashboard.json`.

-

-[tour]: https://fluo.apache.org/tour/

-[sparkjava]: http://sparkjava.com/

-[spark]: https://spark.apache.org/

-[accumulo]: https://accumulo.apache.org/

-[fluo]: https://fluo.apache.org/

-[pc]: https://github.com/astralway/phrasecount

-[Uno]: https://github.com/astralway/uno

-[cc]: https://commoncrawl.org/

-[install]: docs/install.md

-[ti]: https://travis-ci.org/astralway/webindex.svg?branch=master

-[tl]: https://travis-ci.org/astralway/webindex

-[li]: http://img.shields.io/badge/license-ASL-blue.svg

-[ll]: https://github.com/astralway/webindex/blob/master/LICENSE

-[logo]: contrib/webindex.png

-[bp]: https://fluo.apache.org/blog/2016/01/11/webindex-long-run/#videos-from-run

+Examples for Apache Fluo

diff --git a/phrasecount/.gitignore b/phrasecount/.gitignore

new file mode 100644

index 0000000..93eea5d

--- /dev/null

+++ b/phrasecount/.gitignore

@@ -0,0 +1,6 @@

+.classpath

+.project

+.settings

+target

+.idea

+*.iml

diff --git a/phrasecount/.travis.yml b/phrasecount/.travis.yml

new file mode 100644

index 0000000..e36964e

--- /dev/null

+++ b/phrasecount/.travis.yml

@@ -0,0 +1,12 @@

+language: java

+jdk:

+ - oraclejdk8

+script: mvn verify

+notifications:

+ irc:

+ channels:

+ - "chat.freenode.net#fluo"

+ on_success: always

+ on_failure: always

+ use_notice: true

+ skip_join: true

diff --git a/phrasecount/LICENSE b/phrasecount/LICENSE

new file mode 100644

index 0000000..e06d208

--- /dev/null

+++ b/phrasecount/LICENSE

@@ -0,0 +1,202 @@

+Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "{}"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright {yyyy} {name of copyright owner}

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

+

diff --git a/phrasecount/README.md b/phrasecount/README.md

new file mode 100644

index 0000000..74a6509

--- /dev/null

+++ b/phrasecount/README.md

@@ -0,0 +1,164 @@

+# Phrase Count

+

+[](https://travis-ci.org/astralway/phrasecount)

+

+An example application that computes phrase counts for unique documents using Apache Fluo. Each

+unique document that is added causes phrase counts to be incremented. Unique documents have

+reference counts based on the number of locations that point to them. When a unique document is no

+longer referenced by any location, then the phrase counts will be decremented appropriately.

+

+After phrase counts are incremented, export transactions send phrase counts to an Accumulo table.

+The purpose of exporting data is to make it available for query. Percolator is not designed to

+support queries, because its transactions are designed for throughput and not responsiveness.

+

+This example uses the Collision Free Map and Export Queue from [Apache Fluo Recipes][11]. A

+Collision Free Map is used to calculate phrase counts. An Export Queue is used to update the

+external Accumulo table in a fault tolerant manner. Before using Fluo Recipes, this example was

+quite complex. Switching to Fluo Recipes dramatically simplified this example.

+

+## Schema

+

+### Fluo Table Schema

+

+This example uses the following schema for the table used by Apache Fluo.

+

+Row | Column | Value | Purpose

+-------------|---------------|-------------------|---------------------------------------------------------------------

+uri:\<uri\> | doc:hash | \<hash\> | Contains the hash of the document found at the URI

+doc:\<hash\> | doc:content | \<document\> | The contents of the document

+doc:\<hash\> | doc:refCount | \<int\> | The number of URIs that reference this document

+doc:\<hash\> | index:check | empty | Setting this columns triggers the observer that indexes the document

+doc:\<hash\> | index:status | INDEXED or empty | Used to track the status of whether this document was indexed

+

+Additionally the two recipes used by the example store their data in the table

+under two row prefixes. Nothing else should be stored within these prefixes.

+The collision free map used to compute phrasecounts stores data within the row

+prefix `pcm:`. The export queue stores data within the row prefix `aeq:`.

+

+### External Table Schema

+

+This example uses the following schema for the external Accumulo table.

+

+Row | Column | Value | Purpose

+-----------|-----------------|------------|---------------------------------------------------------------------

+\<phrase\> | stat:totalCount | \<count\> | For a given phrase, the value is the total number of times that phrase occurred in all documents.

+\<phrase\> | stat:docCount | \<count\> | For a given phrase, the values is the number of documents in which that phrase occurred.

+

+[PhraseCountTable][14] encapsulates all of the code for interacting with this

+external table.

+

+## Code Overview

+

+Documents are loaded into the Fluo table by [DocumentLoader][1] which is

+executed by [Load][2]. [DocumentLoader][1] handles reference counting of

+unique documents and may set a notification for [DocumentObserver][3].

+[DocumentObserver][3] increments or decrements global phrase counts by

+inserting `+1` or `-1` into a collision free map for each phrase in a document.

+[PhraseMap][4] contains the code called by the collision free map recipe. The

+code in [PhraseMap][4] does two things. First it computes the phrase counts by

+summing the updates. Second it places the newly computed phrase count on an

+export queue. [PhraseExporter][5] is called by the export queue recipe to

+generate mutations to update the external Accumulo table.

+

+All observers and recipes are configured by code in [Application][10]. All

+observers are run by the Fluo worker processes when notifications trigger them.

+

+## Building

+

+After cloning this repository, build with following command.

+

+```

+mvn package

+```

+

+## Running via Maven

+

+If you do not have Accumulo, Hadoop, Zookeeper, and Fluo setup, then you can

+start an MiniFluo instance with the [mini.sh](bin/mini.sh) script. This script

+will run [Mini.java][12] using Maven. The command will create a

+`fluo.properties` file that can be used by the other commands in this section.

+

+```bash

+./bin/mini.sh /tmp/mac fluo.properties

+```

+

+After the mini command prints out `Wrote : fluo.properties` then its ready to

+use. Run `tail -f mini.log` and look for the message about writing

+fluo.properties.

+

+This command will automatically configure [PhraseExporter][5] to export phrases

+to an Accumulo table named `pcExport`.

+

+The reason `-Dexec.classpathScope=test` is set is because it adds the test

+[log4j.properties][7] file to the classpath.

+

+### Adding documents

+

+The [load.sh](bin/load.sh) runs [Load.java][2] which scans the directory

+`$TXT_DIR` looking for .txt files to add. The scan is recursive.

+

+```bash

+./bin/load.sh fluo.properties $TXT_DIR

+```

+

+### Printing phrases

+

+After documents are added, [print.sh](bin/print.sh) will run [Print.java][13]

+which prints out phrase counts. Try modifying a document you added and running

+the load command again, you should eventually see the phrase counts change.

+

+```bash

+./bin/print.sh fluo.properties pcExport

+```

+

+The command will print out the number of unique documents and the number

+of processed documents. If the number of processed documents is less than the

+number of unique documents, then there is still work to do. After the load

+command runs, the documents will have been added or updated. However the

+phrase counts will not update until the Observer runs in the background.

+

+### Killing mini

+

+Make sure to kill mini when finished testing. The following command will kill it.

+

+```bash

+pkill -f phrasecount.cmd.Mini

+```

+

+## Deploying example

+

+The following script can run this example on a cluster using the Fluo

+distribution and serves as executable documentation for deployment. The

+previous maven commands using the exec plugin are convenient for a development

+environment, using the following scripts shows how things would work in a

+production environment.

+

+ * [run.sh] (bin/run.sh) : Runs this example with YARN using the Fluo tar

+ distribution. Running in this way requires setting up Hadoop, Zookeeper,

+ and Accumulo instances separately. The [Uno][8] and [Muchos][9]

+ projects were created to ease setting up these external dependencies.

+

+## Generating data

+

+Need some data? Use `elinks` to generate text files from web pages.

+

+```

+mkdir data

+elinks -dump 1 -no-numbering -no-references http://accumulo.apache.org > data/accumulo.txt

+elinks -dump 1 -no-numbering -no-references http://hadoop.apache.org > data/hadoop.txt

+elinks -dump 1 -no-numbering -no-references http://zookeeper.apache.org > data/zookeeper.txt

+```

+

+[1]: src/main/java/phrasecount/DocumentLoader.java

+[2]: src/main/java/phrasecount/cmd/Load.java

+[3]: src/main/java/phrasecount/DocumentObserver.java

+[4]: src/main/java/phrasecount/PhraseMap.java

+[5]: src/main/java/phrasecount/PhraseExporter.java

+[7]: src/test/resources/log4j.properties

+[8]: https://github.com/astralway/uno

+[9]: https://github.com/astralway/muchos

+[10]: src/main/java/phrasecount/Application.java

+[11]: https://github.com/apache/fluo-recipes

+[12]: src/main/java/phrasecount/cmd/Mini.java

+[13]: src/main/java/phrasecount/cmd/Print.java

+[14]: src/main/java/phrasecount/query/PhraseCountTable.java

diff --git a/phrasecount/bin/copy-jars.sh b/phrasecount/bin/copy-jars.sh

new file mode 100755

index 0000000..a92ac5f

--- /dev/null

+++ b/phrasecount/bin/copy-jars.sh

@@ -0,0 +1,24 @@

+#!/bin/bash

+

+#This script will copy the phrase count jar and its dependencies to the Fluo

+#application lib dir

+

+

+if [ "$#" -ne 2 ]; then

+ echo "Usage : $0 <FLUO HOME> <PHRASECOUNT_HOME>"

+ exit

+fi

+

+FLUO_HOME=$1

+PC_HOME=$2

+

+PC_JAR=$PC_HOME/target/phrasecount-0.0.1-SNAPSHOT.jar

+

+#build and copy phrasecount jar

+(cd $PC_HOME; mvn package -DskipTests)

+

+FLUO_APP_LIB=$FLUO_HOME/apps/phrasecount/lib/

+

+cp $PC_JAR $FLUO_APP_LIB

+(cd $PC_HOME; mvn dependency:copy-dependencies -DoutputDirectory=$FLUO_APP_LIB)

+

diff --git a/phrasecount/bin/load.sh b/phrasecount/bin/load.sh

new file mode 100755

index 0000000..4c9a904

--- /dev/null

+++ b/phrasecount/bin/load.sh

@@ -0,0 +1,3 @@

+#!/bin/bash

+

+mvn exec:java -Dexec.mainClass=phrasecount.cmd.Load -Dexec.args="${*:1}" -Dexec.classpathScope=test

diff --git a/phrasecount/bin/mini.sh b/phrasecount/bin/mini.sh

new file mode 100755

index 0000000..b8b60a4

--- /dev/null

+++ b/phrasecount/bin/mini.sh

@@ -0,0 +1,4 @@

+#!/bin/bash

+

+mvn exec:java -Dexec.mainClass=phrasecount.cmd.Mini -Dexec.args="${*:1}" -Dexec.classpathScope=test &>mini.log &

+echo "Started Mini in background. Writing output to mini.log."

diff --git a/phrasecount/bin/print.sh b/phrasecount/bin/print.sh

new file mode 100755

index 0000000..198fad9

--- /dev/null

+++ b/phrasecount/bin/print.sh

@@ -0,0 +1,4 @@

+#!/bin/bash

+

+mvn exec:java -Dexec.mainClass=phrasecount.cmd.Print -Dexec.args="${*:1}" -Dexec.classpathScope=test

+

diff --git a/phrasecount/bin/run.sh b/phrasecount/bin/run.sh

new file mode 100755

index 0000000..8f6e46a

--- /dev/null

+++ b/phrasecount/bin/run.sh

@@ -0,0 +1,69 @@

+#!/bin/bash

+

+BIN_DIR=$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )

+PC_HOME=$( cd "$( dirname "$BIN_DIR" )" && pwd )

+

+# stop if any command fails

+set -e

+

+if [ "$#" -ne 1 ]; then

+ echo "Usage : $0 <TXT FILES DIR>"

+ exit

+fi

+

+#set the following to a directory containing text files

+TXT_DIR=$1

+if [ ! -d $TXT_DIR ]; then

+ echo "Document directory $TXT_DIR does not exist"

+ exit 1

+fi

+

+#ensure $FLUO_HOME is set

+if [ -z "$FLUO_HOME" ]; then

+ echo '$FLUO_HOME must be set!'

+ exit 1

+fi

+

+#Set application name. $FLUO_APP_NAME is set by fluo-dev and zetten

+APP=${FLUO_APP_NAME:-phrasecount}

+

+#derived variables

+APP_PROPS=$FLUO_HOME/apps/$APP/conf/fluo.properties

+

+if [ ! -f $FLUO_HOME/conf/fluo.properties ]; then

+ echo "Fluo is not configured, exiting."

+ exit 1

+fi

+

+#remove application if it exists

+if [ -d $FLUO_HOME/apps/$APP ]; then

+ echo "Restarting '$APP' application. Errors may be printed if it's not running..."

+ $FLUO_HOME/bin/fluo kill $APP || true

+ rm -rf $FLUO_HOME/apps/$APP

+fi

+

+#create new application dir

+$FLUO_HOME/bin/fluo new $APP

+

+#copy phrasecount jars to Fluo application lib dir

+$PC_HOME/bin/copy-jars.sh $FLUO_HOME $PC_HOME

+

+#Create export table and output Fluo configuration

+$FLUO_HOME/bin/fluo exec $APP phrasecount.cmd.Setup $APP_PROPS pcExport >> $APP_PROPS

+

+$FLUO_HOME/bin/fluo init $APP -f

+$FLUO_HOME/bin/fluo exec $APP org.apache.fluo.recipes.accumulo.cmds.OptimizeTable

+$FLUO_HOME/bin/fluo start $APP

+$FLUO_HOME/bin/fluo info $APP

+

+#Load data

+$FLUO_HOME/bin/fluo exec $APP phrasecount.cmd.Load $APP_PROPS $TXT_DIR

+

+#wait for all notifications to be processed.

+$FLUO_HOME/bin/fluo wait $APP

+

+#print phrase counts

+$FLUO_HOME/bin/fluo exec $APP phrasecount.cmd.Print $APP_PROPS pcExport

+

+$FLUO_HOME/bin/fluo stop $APP

+

diff --git a/phrasecount/pom.xml b/phrasecount/pom.xml

new file mode 100644

index 0000000..bb9afde

--- /dev/null

+++ b/phrasecount/pom.xml

@@ -0,0 +1,98 @@

+<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

+ xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

+ <modelVersion>4.0.0</modelVersion>

+

+ <groupId>io.github.astralway</groupId>

+ <artifactId>phrasecount</artifactId>

+ <version>0.0.1-SNAPSHOT</version>

+ <packaging>jar</packaging>

+

+ <name>phrasecount</name>

+ <url>https://github.com/astralway/phrasecount</url>

+

+ <properties>

+ <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

+ <accumulo.version>1.7.2</accumulo.version>

+ <fluo.version>1.0.0-incubating</fluo.version>

+ <fluo-recipes.version>1.0.0-incubating</fluo-recipes.version>

+ </properties>

+

+ <build>

+ <plugins>

+ <plugin>

+ <artifactId>maven-compiler-plugin</artifactId>

+ <version>3.1</version>

+ <configuration>

+ <source>1.8</source>

+ <target>1.8</target>

+ <optimize>true</optimize>

+ <encoding>UTF-8</encoding>

+ </configuration>

+ </plugin>

+ <plugin>

+ <artifactId>maven-dependency-plugin</artifactId>

+ <version>2.10</version>

+ <configuration>

+ <!--define the specific dependencies to copy into the Fluo application dir-->

+ <includeArtifactIds>fluo-recipes-core,fluo-recipes-accumulo,fluo-recipes-kryo,kryo,minlog,reflectasm,objenesis</includeArtifactIds>

+ </configuration>

+ </plugin>

+ </plugins>

+ </build>

+

+ <dependencies>

+ <dependency>

+ <groupId>junit</groupId>

+ <artifactId>junit</artifactId>

+ <version>4.11</version>

+ <scope>test</scope>

+ </dependency>

+ <dependency>

+ <groupId>com.beust</groupId>

+ <artifactId>jcommander</artifactId>

+ <version>1.32</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-api</artifactId>

+ <version>${fluo.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-core</artifactId>

+ <version>${fluo.version}</version>

+ <scope>runtime</scope>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-recipes-core</artifactId>

+ <version>${fluo-recipes.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-recipes-accumulo</artifactId>

+ <version>${fluo-recipes.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-recipes-kryo</artifactId>

+ <version>${fluo-recipes.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.accumulo</groupId>

+ <artifactId>accumulo-core</artifactId>

+ <version>${accumulo.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.fluo</groupId>

+ <artifactId>fluo-mini</artifactId>

+ <version>${fluo.version}</version>

+ <scope>test</scope>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.accumulo</groupId>

+ <artifactId>accumulo-minicluster</artifactId>

+ <version>${accumulo.version}</version>

+ </dependency>

+ </dependencies>

+</project>

diff --git a/phrasecount/src/main/java/phrasecount/Application.java b/phrasecount/src/main/java/phrasecount/Application.java

new file mode 100644

index 0000000..30d7c3a

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/Application.java

@@ -0,0 +1,71 @@

+package phrasecount;

+

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.fluo.api.config.ObserverSpecification;

+import org.apache.fluo.recipes.accumulo.export.AccumuloExporter;

+import org.apache.fluo.recipes.core.export.ExportQueue;

+import org.apache.fluo.recipes.core.map.CollisionFreeMap;

+import org.apache.fluo.recipes.kryo.KryoSimplerSerializer;

+import phrasecount.pojos.Counts;

+import phrasecount.pojos.PcKryoFactory;

+

+import static phrasecount.Constants.EXPORT_QUEUE_ID;

+import static phrasecount.Constants.PCM_ID;

+

+public class Application {

+

+ public static class Options {

+ public Options(int pcmBuckets, int eqBuckets, String instance, String zooKeepers, String user,

+ String password, String eTable) {

+ this.phraseCountMapBuckets = pcmBuckets;

+ this.exportQueueBuckets = eqBuckets;

+ this.instance = instance;

+ this.zookeepers = zooKeepers;

+ this.user = user;

+ this.password = password;

+ this.exportTable = eTable;

+

+ }

+

+ public int phraseCountMapBuckets;

+ public int exportQueueBuckets;

+

+ public String instance;

+ public String zookeepers;

+ public String user;

+ public String password;

+ public String exportTable;

+ }

+

+ /**

+ * Sets Fluo configuration needed to run the phrase count application

+ *

+ * @param fluoConfig FluoConfiguration

+ * @param opts Options

+ */

+ public static void configure(FluoConfiguration fluoConfig, Options opts) {

+ // set up an observer that watches the reference counts of documents. When a document is

+ // referenced or dereferenced, it will add or subtract phrase counts from a collision free map.

+ fluoConfig.addObserver(new ObserverSpecification(DocumentObserver.class.getName()));

+

+ // configure which KryoFactory recipes should use

+ KryoSimplerSerializer.setKryoFactory(fluoConfig, PcKryoFactory.class);

+

+ // set up a collision free map to combine phrase counts

+ CollisionFreeMap.configure(fluoConfig,

+ new CollisionFreeMap.Options(PCM_ID, PhraseMap.PcmCombiner.class,

+ PhraseMap.PcmUpdateObserver.class, String.class, Counts.class,

+ opts.phraseCountMapBuckets));

+

+ AccumuloExporter.Configuration accumuloConfig =

+ new AccumuloExporter.Configuration(opts.instance, opts.zookeepers, opts.user, opts.password,

+ opts.exportTable);

+

+ // setup an Accumulo export queue to to send phrase count updates to an Accumulo table

+ ExportQueue.Options exportQueueOpts =

+ new ExportQueue.Options(EXPORT_QUEUE_ID, PhraseExporter.class.getName(),

+ String.class.getName(), Counts.class.getName(),

+ opts.exportQueueBuckets).setExporterConfiguration(accumuloConfig);

+ ExportQueue.configure(fluoConfig, exportQueueOpts);

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/Constants.java b/phrasecount/src/main/java/phrasecount/Constants.java

new file mode 100644

index 0000000..1f73bee

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/Constants.java

@@ -0,0 +1,21 @@

+package phrasecount;

+

+import org.apache.fluo.api.data.Column;

+import org.apache.fluo.recipes.core.types.StringEncoder;

+import org.apache.fluo.recipes.core.types.TypeLayer;

+

+public class Constants {

+

+ // set the encoder to use in once place

+ public static final TypeLayer TYPEL = new TypeLayer(new StringEncoder());

+

+ public static final Column INDEX_CHECK_COL = TYPEL.bc().fam("index").qual("check").vis();

+ public static final Column INDEX_STATUS_COL = TYPEL.bc().fam("index").qual("status").vis();

+ public static final Column DOC_CONTENT_COL = TYPEL.bc().fam("doc").qual("content").vis();

+ public static final Column DOC_HASH_COL = TYPEL.bc().fam("doc").qual("hash").vis();

+ public static final Column DOC_REF_COUNT_COL = TYPEL.bc().fam("doc").qual("refCount").vis();

+

+ public static final String EXPORT_QUEUE_ID = "aeq";

+ //phrase count map id

+ public static final String PCM_ID = "pcm";

+}

diff --git a/phrasecount/src/main/java/phrasecount/DocumentLoader.java b/phrasecount/src/main/java/phrasecount/DocumentLoader.java

new file mode 100644

index 0000000..8384b35

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/DocumentLoader.java

@@ -0,0 +1,73 @@

+package phrasecount;

+

+import org.apache.fluo.api.client.Loader;

+import org.apache.fluo.api.client.TransactionBase;

+import org.apache.fluo.recipes.core.types.TypedTransactionBase;

+import phrasecount.pojos.Document;

+

+import static phrasecount.Constants.DOC_CONTENT_COL;

+import static phrasecount.Constants.DOC_HASH_COL;

+import static phrasecount.Constants.DOC_REF_COUNT_COL;

+import static phrasecount.Constants.INDEX_CHECK_COL;

+import static phrasecount.Constants.TYPEL;

+

+/**

+ * Executes document load transactions which dedupe and reference count documents. If needed, the

+ * observer that updates phrase counts is triggered.

+ */

+public class DocumentLoader implements Loader {

+

+ private Document document;

+

+ public DocumentLoader(Document doc) {

+ this.document = doc;

+ }

+

+ @Override

+ public void load(TransactionBase tx, Context context) throws Exception {

+

+ // TODO Need a strategy for dealing w/ large documents. If a worker processes many large

+ // documents concurrently, it could cause memory exhaustion. Could break up large documents

+ // into pieces, However, not sure if the example should be complicated with this.

+

+ TypedTransactionBase ttx = TYPEL.wrap(tx);

+ String storedHash = ttx.get().row("uri:" + document.getURI()).col(DOC_HASH_COL).toString();

+

+ if (storedHash == null || !storedHash.equals(document.getHash())) {

+

+ ttx.mutate().row("uri:" + document.getURI()).col(DOC_HASH_COL).set(document.getHash());

+

+ Integer refCount =

+ ttx.get().row("doc:" + document.getHash()).col(DOC_REF_COUNT_COL).toInteger();

+ if (refCount == null) {

+ // this document was never seen before

+ addNewDocument(ttx, document);

+ } else {

+ setRefCount(ttx, document.getHash(), refCount, refCount + 1);

+ }

+

+ if (storedHash != null) {

+ decrementRefCount(ttx, refCount, storedHash);

+ }

+ }

+ }

+

+ private void setRefCount(TypedTransactionBase tx, String hash, Integer prevRc, int rc) {

+ tx.mutate().row("doc:" + hash).col(DOC_REF_COUNT_COL).set(rc);

+

+ if (rc == 0 || (rc == 1 && (prevRc == null || prevRc == 0))) {

+ // setting this triggers DocumentObserver

+ tx.mutate().row("doc:" + hash).col(INDEX_CHECK_COL).set();

+ }

+ }

+

+ private void decrementRefCount(TypedTransactionBase tx, Integer prevRc, String hash) {

+ int rc = tx.get().row("doc:" + hash).col(DOC_REF_COUNT_COL).toInteger();

+ setRefCount(tx, hash, prevRc, rc - 1);

+ }

+

+ private void addNewDocument(TypedTransactionBase tx, Document doc) {

+ setRefCount(tx, doc.getHash(), null, 1);

+ tx.mutate().row("doc:" + doc.getHash()).col(DOC_CONTENT_COL).set(doc.getContent());

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/DocumentObserver.java b/phrasecount/src/main/java/phrasecount/DocumentObserver.java

new file mode 100644

index 0000000..1c50bfc

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/DocumentObserver.java

@@ -0,0 +1,102 @@

+package phrasecount;

+

+import java.util.HashMap;

+import java.util.Map;

+import java.util.Map.Entry;

+

+import org.apache.fluo.api.client.TransactionBase;

+import org.apache.fluo.api.data.Bytes;

+import org.apache.fluo.api.data.Column;

+import org.apache.fluo.api.observer.AbstractObserver;

+import org.apache.fluo.recipes.core.map.CollisionFreeMap;

+import org.apache.fluo.recipes.core.types.TypedTransactionBase;

+import phrasecount.pojos.Counts;

+import phrasecount.pojos.Document;

+

+import static phrasecount.Constants.DOC_CONTENT_COL;

+import static phrasecount.Constants.DOC_REF_COUNT_COL;

+import static phrasecount.Constants.INDEX_CHECK_COL;

+import static phrasecount.Constants.INDEX_STATUS_COL;

+import static phrasecount.Constants.PCM_ID;

+import static phrasecount.Constants.TYPEL;

+

+/**

+ * An Observer that updates phrase counts when a document is added or removed.

+ */

+public class DocumentObserver extends AbstractObserver {

+

+ private CollisionFreeMap<String, Counts> pcMap;

+

+ private enum IndexStatus {

+ INDEXED, UNINDEXED

+ }

+

+ @Override

+ public void init(Context context) throws Exception {

+ pcMap = CollisionFreeMap.getInstance(PCM_ID, context.getAppConfiguration());

+ }

+

+ @Override

+ public void process(TransactionBase tx, Bytes row, Column col) throws Exception {

+

+ TypedTransactionBase ttx = TYPEL.wrap(tx);

+

+ IndexStatus status = getStatus(ttx, row);

+ int refCount = ttx.get().row(row).col(DOC_REF_COUNT_COL).toInteger(0);

+

+ if (status == IndexStatus.UNINDEXED && refCount > 0) {

+ updatePhraseCounts(ttx, row, 1);

+ ttx.mutate().row(row).col(INDEX_STATUS_COL).set(IndexStatus.INDEXED.name());

+ } else if (status == IndexStatus.INDEXED && refCount == 0) {

+ updatePhraseCounts(ttx, row, -1);

+ deleteDocument(ttx, row);

+ }

+

+ // TODO modifying the trigger is currently broken, enable more than one observer to commit for a

+ // notification

+ // tx.delete(row, col);

+

+ }

+

+ @Override

+ public ObservedColumn getObservedColumn() {

+ return new ObservedColumn(INDEX_CHECK_COL, NotificationType.STRONG);

+ }

+

+ private void deleteDocument(TypedTransactionBase tx, Bytes row) {

+ // TODO it would probably be useful to have a deleteRow method on Transaction... this method

+ // could start off w/ a simple implementation and later be

+ // optimized... or could have a delete range option

+

+ // TODO this is brittle, this code assumes it knows all possible columns

+ tx.delete(row, DOC_CONTENT_COL);

+ tx.delete(row, DOC_REF_COUNT_COL);

+ tx.delete(row, INDEX_STATUS_COL);

+ }

+

+ private void updatePhraseCounts(TypedTransactionBase ttx, Bytes row, int multiplier) {

+ String content = ttx.get().row(row).col(Constants.DOC_CONTENT_COL).toString();

+

+ // this makes the assumption that the implementation of getPhrases is invariant. This is

+ // probably a bad assumption. A possible way to make this more robust

+ // is to store the output of getPhrases when indexing and use the stored output when unindexing.

+ // Alternatively, could store the version of Document used for

+ // indexing.

+ Map<String, Integer> phrases = new Document(null, content).getPhrases();

+ Map<String, Counts> updates = new HashMap<>(phrases.size());

+ for (Entry<String, Integer> entry : phrases.entrySet()) {

+ updates.put(entry.getKey(), new Counts(multiplier, entry.getValue() * multiplier));

+ }

+

+ pcMap.update(ttx, updates);

+ }

+

+ private IndexStatus getStatus(TypedTransactionBase tx, Bytes row) {

+ String status = tx.get().row(row).col(INDEX_STATUS_COL).toString();

+

+ if (status == null)

+ return IndexStatus.UNINDEXED;

+

+ return IndexStatus.valueOf(status);

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/PhraseExporter.java b/phrasecount/src/main/java/phrasecount/PhraseExporter.java

new file mode 100644

index 0000000..5aec44a

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/PhraseExporter.java

@@ -0,0 +1,24 @@

+package phrasecount;

+

+import java.util.function.Consumer;

+

+import org.apache.accumulo.core.data.Mutation;

+import org.apache.fluo.recipes.accumulo.export.AccumuloExporter;

+import org.apache.fluo.recipes.core.export.SequencedExport;

+import phrasecount.pojos.Counts;

+import phrasecount.query.PhraseCountTable;

+

+/**

+ * Export code that converts {@link Counts} objects from the export queue to Mutations that are

+ * written to Accumulo.

+ */

+public class PhraseExporter extends AccumuloExporter<String, Counts> {

+

+ @Override

+ protected void translate(SequencedExport<String, Counts> export, Consumer<Mutation> consumer) {

+ String phrase = export.getKey();

+ long seq = export.getSequence();

+ Counts counts = export.getValue();

+ consumer.accept(PhraseCountTable.createMutation(phrase, seq, counts));

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/PhraseMap.java b/phrasecount/src/main/java/phrasecount/PhraseMap.java

new file mode 100644

index 0000000..01c3bfb

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/PhraseMap.java

@@ -0,0 +1,63 @@

+package phrasecount;

+

+import java.util.Iterator;

+import java.util.Optional;

+

+import com.google.common.collect.Iterators;

+import org.apache.fluo.api.client.TransactionBase;

+import org.apache.fluo.api.observer.Observer.Context;

+import org.apache.fluo.recipes.core.export.Export;

+import org.apache.fluo.recipes.core.export.ExportQueue;

+import org.apache.fluo.recipes.core.map.CollisionFreeMap;

+import org.apache.fluo.recipes.core.map.Combiner;

+import org.apache.fluo.recipes.core.map.Update;

+import org.apache.fluo.recipes.core.map.UpdateObserver;

+import phrasecount.pojos.Counts;

+

+import static phrasecount.Constants.EXPORT_QUEUE_ID;

+

+/**

+ * This class contains all of the code related to the {@link CollisionFreeMap} that keeps track of

+ * phrase counts.

+ */

+public class PhraseMap {

+

+ /**

+ * A combiner for the {@link CollisionFreeMap} that stores phrase counts. The

+ * {@link CollisionFreeMap} calls this combiner when it lazily updates the counts for a phrase.

+ */

+ public static class PcmCombiner implements Combiner<String, Counts> {

+

+ @Override

+ public Optional<Counts> combine(String key, Iterator<Counts> updates) {

+ Counts sum = new Counts(0, 0);

+ while (updates.hasNext()) {

+ sum = sum.add(updates.next());

+ }

+ return Optional.of(sum);

+ }

+ }

+

+ /**

+ * This class is notified when the {@link CollisionFreeMap} used to store phrase counts updates a

+ * phrase count. Updates are placed an Accumulo export queue to be exported to the table storing

+ * phrase counts for query.

+ */

+ public static class PcmUpdateObserver extends UpdateObserver<String, Counts> {

+

+ private ExportQueue<String, Counts> pcEq;

+

+ @Override

+ public void init(String mapId, Context observerContext) throws Exception {

+ pcEq = ExportQueue.getInstance(EXPORT_QUEUE_ID, observerContext.getAppConfiguration());

+ }

+

+ @Override

+ public void updatingValues(TransactionBase tx, Iterator<Update<String, Counts>> updates) {

+ Iterator<Export<String, Counts>> exports =

+ Iterators.transform(updates, u -> new Export<>(u.getKey(), u.getNewValue().get()));

+ pcEq.addAll(tx, exports);

+ }

+ }

+

+}

diff --git a/phrasecount/src/main/java/phrasecount/cmd/Load.java b/phrasecount/src/main/java/phrasecount/cmd/Load.java

new file mode 100644

index 0000000..82e4e75

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/cmd/Load.java

@@ -0,0 +1,51 @@

+package phrasecount.cmd;

+

+import java.io.File;

+

+import com.google.common.base.Charsets;

+import com.google.common.io.Files;

+import org.apache.fluo.api.client.FluoClient;

+import org.apache.fluo.api.client.FluoFactory;

+import org.apache.fluo.api.client.LoaderExecutor;

+import org.apache.fluo.api.config.FluoConfiguration;

+import phrasecount.DocumentLoader;

+import phrasecount.pojos.Document;

+

+public class Load {

+

+ public static void main(String[] args) throws Exception {

+

+ if (args.length != 2) {

+ System.err.println("Usage : " + Load.class.getName() + " <fluo props file> <txt file dir>");

+ System.exit(-1);

+ }

+

+ FluoConfiguration config = new FluoConfiguration(new File(args[0]));

+ config.setLoaderThreads(20);

+ config.setLoaderQueueSize(40);

+

+ try (FluoClient fluoClient = FluoFactory.newClient(config);

+ LoaderExecutor le = fluoClient.newLoaderExecutor()) {

+ File[] files = new File(args[1]).listFiles();

+

+ if (files == null) {

+ System.out.println("Text file dir does not exist: " + args[1]);

+ } else {

+ for (File txtFile : files) {

+ if (txtFile.getName().endsWith(".txt")) {

+ String uri = txtFile.toURI().toString();

+ String content = Files.toString(txtFile, Charsets.UTF_8);

+

+ System.out.println("Processing : " + txtFile.toURI());

+ le.execute(new DocumentLoader(new Document(uri, content)));

+ } else {

+ System.out.println("Ignoring : " + txtFile.toURI());

+ }

+ }

+ }

+ }

+

+ // TODO figure what threads are hanging around

+ System.exit(0);

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/cmd/Mini.java b/phrasecount/src/main/java/phrasecount/cmd/Mini.java

new file mode 100644

index 0000000..e43c1f5

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/cmd/Mini.java

@@ -0,0 +1,97 @@

+package phrasecount.cmd;

+

+import java.io.File;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+

+import com.beust.jcommander.JCommander;

+import com.beust.jcommander.Parameter;

+import com.beust.jcommander.ParameterException;

+import org.apache.accumulo.core.conf.Property;

+import org.apache.accumulo.minicluster.MemoryUnit;

+import org.apache.accumulo.minicluster.MiniAccumuloCluster;

+import org.apache.accumulo.minicluster.MiniAccumuloConfig;

+import org.apache.accumulo.minicluster.ServerType;

+import org.apache.fluo.api.client.FluoAdmin.InitializationOptions;

+import org.apache.fluo.api.client.FluoFactory;

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.fluo.api.mini.MiniFluo;

+import phrasecount.Application;

+

+public class Mini {

+

+ static class Parameters {

+ @Parameter(names = {"-m", "--moreMemory"}, description = "Use more memory")

+ boolean moreMemory = false;

+

+ @Parameter(names = {"-w", "--workerThreads"}, description = "Number of worker threads")

+ int workerThreads = 5;

+

+ @Parameter(names = {"-t", "--tabletServers"}, description = "Number of tablet servers")

+ int tabletServers = 2;

+

+ @Parameter(names = {"-z", "--zookeeperPort"}, description = "Port to use for zookeeper")

+ int zookeeperPort = 0;

+

+ @Parameter(description = "<MAC dir> <output props file>")

+ List<String> args;

+ }

+

+ public static void main(String[] args) throws Exception {

+

+ Parameters params = new Parameters();

+ JCommander jc = new JCommander(params);

+

+ try {

+ jc.parse(args);

+ if (params.args == null || params.args.size() != 2)

+ throw new ParameterException("Expected two arguments");

+ } catch (ParameterException pe) {

+ System.out.println(pe.getMessage());

+ jc.setProgramName(Mini.class.getSimpleName());

+ jc.usage();

+ System.exit(-1);

+ }

+

+ MiniAccumuloConfig cfg = new MiniAccumuloConfig(new File(params.args.get(0)), "secret");

+ cfg.setZooKeeperPort(params.zookeeperPort);

+ cfg.setNumTservers(params.tabletServers);

+ if (params.moreMemory) {

+ cfg.setMemory(ServerType.TABLET_SERVER, 2, MemoryUnit.GIGABYTE);

+ Map<String, String> site = new HashMap<>();

+ site.put(Property.TSERV_DATACACHE_SIZE.getKey(), "768M");

+ site.put(Property.TSERV_INDEXCACHE_SIZE.getKey(), "256M");

+ cfg.setSiteConfig(site);

+ }

+

+ MiniAccumuloCluster cluster = new MiniAccumuloCluster(cfg);

+ cluster.start();

+

+ FluoConfiguration fluoConfig = new FluoConfiguration();

+

+ fluoConfig.setMiniStartAccumulo(false);

+ fluoConfig.setAccumuloInstance(cluster.getInstanceName());

+ fluoConfig.setAccumuloUser("root");

+ fluoConfig.setAccumuloPassword("secret");

+ fluoConfig.setAccumuloZookeepers(cluster.getZooKeepers());

+ fluoConfig.setInstanceZookeepers(cluster.getZooKeepers() + "/fluo");

+

+ fluoConfig.setAccumuloTable("data");

+ fluoConfig.setWorkerThreads(params.workerThreads);

+

+ fluoConfig.setApplicationName("phrasecount");

+

+ Application.configure(fluoConfig, new Application.Options(17, 17, cluster.getInstanceName(),

+ cluster.getZooKeepers(), "root", "secret", "pcExport"));

+

+ FluoFactory.newAdmin(fluoConfig).initialize(new InitializationOptions());

+

+ MiniFluo miniFluo = FluoFactory.newMiniFluo(fluoConfig);

+

+ miniFluo.getClientConfiguration().save(new File(params.args.get(1)));

+

+ System.out.println();

+ System.out.println("Wrote : " + params.args.get(1));

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/cmd/Print.java b/phrasecount/src/main/java/phrasecount/cmd/Print.java

new file mode 100644

index 0000000..79819b2

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/cmd/Print.java

@@ -0,0 +1,55 @@

+package phrasecount.cmd;

+

+import java.io.File;

+

+import com.google.common.collect.Iterables;

+import org.apache.fluo.api.client.FluoClient;

+import org.apache.fluo.api.client.FluoFactory;

+import org.apache.fluo.api.client.Snapshot;

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.fluo.api.data.Column;

+import org.apache.fluo.api.data.Span;

+import phrasecount.Constants;

+import phrasecount.pojos.PhraseAndCounts;

+import phrasecount.query.PhraseCountTable;

+

+public class Print {

+

+ public static void main(String[] args) throws Exception {

+ if (args.length != 2) {

+ System.err

+ .println("Usage : " + Print.class.getName() + " <fluo props file> <export table name>");

+ System.exit(-1);

+ }

+

+ FluoConfiguration fluoConfig = new FluoConfiguration(new File(args[0]));

+

+ PhraseCountTable pcTable = new PhraseCountTable(fluoConfig, args[1]);

+ for (PhraseAndCounts phraseCount : pcTable) {

+ System.out.printf("%7d %7d '%s'\n", phraseCount.docPhraseCount, phraseCount.totalPhraseCount,

+ phraseCount.phrase);

+ }

+

+ try (FluoClient fluoClient = FluoFactory.newClient(fluoConfig);

+ Snapshot snap = fluoClient.newSnapshot()) {

+

+ // TODO could precompute this using observers

+ int uriCount = count(snap, "uri:", Constants.DOC_HASH_COL);

+ int documentCount = count(snap, "doc:", Constants.DOC_REF_COUNT_COL);

+ int numIndexedDocs = count(snap, "doc:", Constants.INDEX_STATUS_COL);

+

+ System.out.println();

+ System.out.printf("# uris : %,d\n", uriCount);

+ System.out.printf("# unique documents : %,d\n", documentCount);

+ System.out.printf("# processed documents : %,d\n", numIndexedDocs);

+ System.out.println();

+ }

+

+ // TODO figure what threads are hanging around

+ System.exit(0);

+ }

+

+ private static int count(Snapshot snap, String prefix, Column col) {

+ return Iterables.size(snap.scanner().over(Span.prefix(prefix)).fetch(col).byRow().build());

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/cmd/Setup.java b/phrasecount/src/main/java/phrasecount/cmd/Setup.java

new file mode 100644

index 0000000..9d27917

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/cmd/Setup.java

@@ -0,0 +1,38 @@

+package phrasecount.cmd;

+

+import java.io.File;

+

+import org.apache.accumulo.core.client.Connector;

+import org.apache.accumulo.core.client.TableNotFoundException;

+import org.apache.accumulo.core.client.ZooKeeperInstance;

+import org.apache.accumulo.core.client.security.tokens.PasswordToken;

+import org.apache.fluo.api.config.FluoConfiguration;

+import phrasecount.Application;

+import phrasecount.Application.Options;

+

+public class Setup {

+

+ public static void main(String[] args) throws Exception {

+ FluoConfiguration config = new FluoConfiguration(new File(args[0]));

+

+ String exportTable = args[1];

+

+ Connector conn =

+ new ZooKeeperInstance(config.getAccumuloInstance(), config.getAccumuloZookeepers())

+ .getConnector("root", new PasswordToken("secret"));

+ try {

+ conn.tableOperations().delete(exportTable);

+ } catch (TableNotFoundException e) {

+ // ignore if table not found

+ }

+

+ conn.tableOperations().create(exportTable);

+

+ Options opts = new Options(103, 103, config.getAccumuloInstance(), config.getAccumuloZookeepers(),

+ config.getAccumuloUser(), config.getAccumuloPassword(), exportTable);

+

+ FluoConfiguration observerConfig = new FluoConfiguration();

+ Application.configure(observerConfig, opts);

+ observerConfig.save(System.out);

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/cmd/Split.java b/phrasecount/src/main/java/phrasecount/cmd/Split.java

new file mode 100644

index 0000000..cc9d145

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/cmd/Split.java

@@ -0,0 +1,40 @@

+package phrasecount.cmd;

+

+import java.io.File;

+import java.util.SortedSet;

+import java.util.TreeSet;

+

+import org.apache.accumulo.core.client.Connector;

+import org.apache.accumulo.core.client.ZooKeeperInstance;

+import org.apache.accumulo.core.client.security.tokens.PasswordToken;

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.hadoop.io.Text;

+

+/**

+ * Utility to add splits to the Accumulo table used by Fluo.

+ */

+public class Split {

+ public static void main(String[] args) throws Exception {

+ if (args.length != 2) {

+ System.err.println("Usage : " + Split.class.getName() + " <fluo props file> <table name>");

+ System.exit(-1);

+ }

+

+ FluoConfiguration fluoConfig = new FluoConfiguration(new File(args[0]));

+ ZooKeeperInstance zki =

+ new ZooKeeperInstance(fluoConfig.getAccumuloInstance(), fluoConfig.getAccumuloZookeepers());

+ Connector conn = zki.getConnector(fluoConfig.getAccumuloUser(),

+ new PasswordToken(fluoConfig.getAccumuloPassword()));

+

+ SortedSet<Text> splits = new TreeSet<>();

+

+ for (char c = 'b'; c < 'z'; c++) {

+ splits.add(new Text("phrase:" + c));

+ }

+

+ conn.tableOperations().addSplits(args[1], splits);

+

+ // TODO figure what threads are hanging around

+ System.exit(0);

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/pojos/Counts.java b/phrasecount/src/main/java/phrasecount/pojos/Counts.java

new file mode 100644

index 0000000..d8e0829

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/pojos/Counts.java

@@ -0,0 +1,44 @@

+package phrasecount.pojos;

+

+import com.google.common.base.Objects;

+

+public class Counts {

+ // number of documents a phrase was seen in

+ public final long docPhraseCount;

+ // total times a phrase was seen in all documents

+ public final long totalPhraseCount;

+

+ public Counts() {

+ docPhraseCount = 0;

+ totalPhraseCount = 0;

+ }

+

+ public Counts(long docPhraseCount, long totalPhraseCount) {

+ this.docPhraseCount = docPhraseCount;

+ this.totalPhraseCount = totalPhraseCount;

+ }

+

+ public Counts add(Counts other) {

+ return new Counts(this.docPhraseCount + other.docPhraseCount, this.totalPhraseCount + other.totalPhraseCount);

+ }

+

+ @Override

+ public boolean equals(Object o) {

+ if (o instanceof Counts) {

+ Counts opc = (Counts) o;

+ return opc.docPhraseCount == docPhraseCount && opc.totalPhraseCount == totalPhraseCount;

+ }

+

+ return false;

+ }

+

+ @Override

+ public int hashCode() {

+ return (int) (993 * totalPhraseCount + 17 * docPhraseCount);

+ }

+

+ @Override

+ public String toString() {

+ return Objects.toStringHelper(this).add("documents", docPhraseCount).add("total", totalPhraseCount).toString();

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/pojos/Document.java b/phrasecount/src/main/java/phrasecount/pojos/Document.java

new file mode 100644

index 0000000..5fc0e70

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/pojos/Document.java

@@ -0,0 +1,59 @@

+package phrasecount.pojos;

+

+import java.util.HashMap;

+import java.util.Map;

+

+import com.google.common.hash.Hasher;

+import com.google.common.hash.Hashing;

+

+public class Document {

+ // the location where the document came from. This is needed inorder to detect when a document

+ // changes.

+ private String uri;

+

+ // the text of a document.

+ private String content;

+

+ private String hash = null;

+

+ public Document(String uri, String content) {

+ this.content = content;

+ this.uri = uri;

+ }

+

+ public String getURI() {

+ return uri;

+ }

+

+ public String getHash() {

+ if (hash != null)

+ return hash;

+

+ Hasher hasher = Hashing.sha1().newHasher();

+ String[] tokens = content.toLowerCase().split("[^\\p{Alnum}]+");

+

+ for (String token : tokens) {

+ hasher.putString(token);

+ }

+

+ return hash = hasher.hash().toString();

+ }

+

+ public Map<String, Integer> getPhrases() {

+ String[] tokens = content.toLowerCase().split("[^\\p{Alnum}]+");

+

+ Map<String, Integer> phrases = new HashMap<>();

+ for (int i = 3; i < tokens.length; i++) {

+ String phrase = tokens[i - 3] + " " + tokens[i - 2] + " " + tokens[i - 1] + " " + tokens[i];

+ Integer old = phrases.put(phrase, 1);

+ if (old != null)

+ phrases.put(phrase, 1 + old);

+ }

+

+ return phrases;

+ }

+

+ public String getContent() {

+ return content;

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/pojos/PcKryoFactory.java b/phrasecount/src/main/java/phrasecount/pojos/PcKryoFactory.java

new file mode 100644

index 0000000..3158f00

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/pojos/PcKryoFactory.java

@@ -0,0 +1,13 @@

+package phrasecount.pojos;

+

+import com.esotericsoftware.kryo.Kryo;

+import com.esotericsoftware.kryo.pool.KryoFactory;

+

+public class PcKryoFactory implements KryoFactory {

+ @Override

+ public Kryo create() {

+ Kryo kryo = new Kryo();

+ kryo.register(Counts.class, 9);

+ return kryo;

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/pojos/PhraseAndCounts.java b/phrasecount/src/main/java/phrasecount/pojos/PhraseAndCounts.java

new file mode 100644

index 0000000..d6ddc33

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/pojos/PhraseAndCounts.java

@@ -0,0 +1,24 @@

+package phrasecount.pojos;

+

+public class PhraseAndCounts extends Counts {

+ public String phrase;

+

+ public PhraseAndCounts(String phrase, int docPhraseCount, int totalPhraseCount) {

+ super(docPhraseCount, totalPhraseCount);

+ this.phrase = phrase;

+ }

+

+ @Override

+ public boolean equals(Object o) {

+ if (o instanceof PhraseAndCounts) {

+ PhraseAndCounts op = (PhraseAndCounts) o;

+ return phrase.equals(op.phrase) && super.equals(op);

+ }

+ return false;

+ }

+

+ @Override

+ public int hashCode() {

+ return super.hashCode() + 31 * phrase.hashCode();

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/query/PhraseCountTable.java b/phrasecount/src/main/java/phrasecount/query/PhraseCountTable.java

new file mode 100644

index 0000000..f5f670a

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/query/PhraseCountTable.java

@@ -0,0 +1,107 @@

+package phrasecount.query;

+

+import java.util.Iterator;

+import java.util.Map.Entry;

+

+import com.google.common.collect.Iterators;

+import org.apache.accumulo.core.client.ClientConfiguration;

+import org.apache.accumulo.core.client.Connector;

+import org.apache.accumulo.core.client.RowIterator;

+import org.apache.accumulo.core.client.Scanner;

+import org.apache.accumulo.core.client.ZooKeeperInstance;

+import org.apache.accumulo.core.client.security.tokens.PasswordToken;

+import org.apache.accumulo.core.data.Key;

+import org.apache.accumulo.core.data.Mutation;

+import org.apache.accumulo.core.data.Range;

+import org.apache.accumulo.core.data.Value;

+import org.apache.accumulo.core.security.Authorizations;

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.hadoop.io.Text;

+import phrasecount.pojos.Counts;

+import phrasecount.pojos.PhraseAndCounts;

+

+/**

+ * All of the code for dealing with the Accumulo table that Fluo is exporting to

+ */

+public class PhraseCountTable implements Iterable<PhraseAndCounts> {

+

+ static final String STAT_CF = "stat";

+

+ //name of column qualifier used to store phrase count across all documents

+ static final String TOTAL_PC_CQ = "totalCount";

+

+ //name of column qualifier used to store number of documents containing a phrase

+ static final String DOC_PC_CQ = "docCount";

+

+ public static Mutation createMutation(String phrase, long seq, Counts pc) {

+ Mutation mutation = new Mutation(phrase);

+

+ // use the sequence number for the Accumulo timestamp, this will cause older updates to fall

+ // behind newer ones

+ if (pc.totalPhraseCount == 0)

+ mutation.putDelete(STAT_CF, TOTAL_PC_CQ, seq);

+ else

+ mutation.put(STAT_CF, TOTAL_PC_CQ, seq, pc.totalPhraseCount + "");

+

+ if (pc.docPhraseCount == 0)

+ mutation.putDelete(STAT_CF, DOC_PC_CQ, seq);

+ else

+ mutation.put(STAT_CF, DOC_PC_CQ, seq, pc.docPhraseCount + "");

+

+ return mutation;

+ }

+

+ private Connector conn;

+ private String table;

+

+ public PhraseCountTable(FluoConfiguration fluoConfig, String table) throws Exception {

+ ZooKeeperInstance zki = new ZooKeeperInstance(

+ new ClientConfiguration().withZkHosts(fluoConfig.getAccumuloZookeepers())

+ .withInstance(fluoConfig.getAccumuloInstance()));

+ this.conn = zki.getConnector(fluoConfig.getAccumuloUser(),

+ new PasswordToken(fluoConfig.getAccumuloPassword()));

+ this.table = table;

+ }

+

+ public PhraseCountTable(Connector conn, String table) {

+ this.conn = conn;

+ this.table = table;

+ }

+

+

+ public Counts getPhraseCounts(String phrase) throws Exception {

+ Scanner scanner = conn.createScanner(table, Authorizations.EMPTY);

+ scanner.setRange(new Range(phrase));

+

+ int sum = 0;

+ int docCount = 0;

+

+ for (Entry<Key, Value> entry : scanner) {

+ String cq = entry.getKey().getColumnQualifierData().toString();

+ if (cq.equals(TOTAL_PC_CQ)) {

+ sum = Integer.valueOf(entry.getValue().toString());

+ }

+

+ if (cq.equals(DOC_PC_CQ)) {

+ docCount = Integer.valueOf(entry.getValue().toString());

+ }

+ }

+

+ return new Counts(docCount, sum);

+ }

+

+ @Override

+ public Iterator<PhraseAndCounts> iterator() {

+ try {

+ Scanner scanner = conn.createScanner(table, Authorizations.EMPTY);

+ scanner.fetchColumn(new Text(STAT_CF), new Text(TOTAL_PC_CQ));

+ scanner.fetchColumn(new Text(STAT_CF), new Text(DOC_PC_CQ));

+

+ return Iterators.transform(new RowIterator(scanner), new RowTransform());

+ } catch (RuntimeException e) {

+ throw e;

+ } catch (Exception e) {

+ throw new RuntimeException(e);

+ }

+ }

+}

diff --git a/phrasecount/src/main/java/phrasecount/query/RowTransform.java b/phrasecount/src/main/java/phrasecount/query/RowTransform.java

new file mode 100644

index 0000000..e86439c

--- /dev/null

+++ b/phrasecount/src/main/java/phrasecount/query/RowTransform.java

@@ -0,0 +1,34 @@

+package phrasecount.query;

+

+import java.util.Iterator;

+import java.util.Map.Entry;

+

+import com.google.common.base.Function;

+import org.apache.accumulo.core.data.Key;

+import org.apache.accumulo.core.data.Value;

+import phrasecount.pojos.PhraseAndCounts;

+

+public class RowTransform implements Function<Iterator<Entry<Key, Value>>, PhraseAndCounts> {

+ @Override

+ public PhraseAndCounts apply(Iterator<Entry<Key, Value>> input) {

+ String phrase = null;

+

+ int totalPhraseCount = 0;

+ int docPhraseCount = 0;

+

+ while (input.hasNext()) {

+ Entry<Key, Value> colEntry = input.next();

+ String cq = colEntry.getKey().getColumnQualifierData().toString();

+

+ if (cq.equals(PhraseCountTable.TOTAL_PC_CQ))

+ totalPhraseCount = Integer.parseInt(colEntry.getValue().toString());

+ else

+ docPhraseCount = Integer.parseInt(colEntry.getValue().toString());

+

+ if (phrase == null)

+ phrase = colEntry.getKey().getRowData().toString();

+ }

+

+ return new PhraseAndCounts(phrase, docPhraseCount, totalPhraseCount);

+ }

+}

diff --git a/phrasecount/src/test/java/phrasecount/PhraseCounterTest.java b/phrasecount/src/test/java/phrasecount/PhraseCounterTest.java

new file mode 100644

index 0000000..5815883

--- /dev/null

+++ b/phrasecount/src/test/java/phrasecount/PhraseCounterTest.java

@@ -0,0 +1,215 @@

+package phrasecount;

+

+import java.util.Random;

+import java.util.concurrent.atomic.AtomicInteger;

+

+import org.apache.accumulo.core.client.Connector;

+import org.apache.accumulo.core.client.security.tokens.PasswordToken;

+import org.apache.accumulo.minicluster.MiniAccumuloCluster;

+import org.apache.accumulo.minicluster.MiniAccumuloConfig;

+import org.apache.fluo.api.client.FluoAdmin.InitializationOptions;

+import org.apache.fluo.api.client.FluoClient;

+import org.apache.fluo.api.client.FluoFactory;

+import org.apache.fluo.api.client.LoaderExecutor;

+import org.apache.fluo.api.config.FluoConfiguration;

+import org.apache.fluo.api.mini.MiniFluo;

+import org.apache.fluo.recipes.core.types.TypedSnapshot;

+import org.junit.After;

+import org.junit.AfterClass;

+import org.junit.Assert;

+import org.junit.Before;

+import org.junit.BeforeClass;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+import phrasecount.pojos.Counts;

+import phrasecount.pojos.Document;

+import phrasecount.query.PhraseCountTable;

+

+import static phrasecount.Constants.DOC_CONTENT_COL;

+import static phrasecount.Constants.DOC_REF_COUNT_COL;

+import static phrasecount.Constants.TYPEL;

+

+// TODO make this an integration test

+

+public class PhraseCounterTest {

+ public static TemporaryFolder folder = new TemporaryFolder();

+ public static MiniAccumuloCluster cluster;

+ private static FluoConfiguration props;

+ private static MiniFluo miniFluo;

+ private static final PasswordToken password = new PasswordToken("secret");

+ private static AtomicInteger tableCounter = new AtomicInteger(1);

+ private PhraseCountTable pcTable;

+

+ @BeforeClass

+ public static void setUpBeforeClass() throws Exception {

+ folder.create();

+ MiniAccumuloConfig cfg = new MiniAccumuloConfig(folder.newFolder("miniAccumulo"),

+ new String(password.getPassword()));

+ cluster = new MiniAccumuloCluster(cfg);

+ cluster.start();

+ }

+

+ @AfterClass

+ public static void tearDownAfterClass() throws Exception {

+ cluster.stop();

+ folder.delete();

+ }

+

+ @Before

+ public void setUpFluo() throws Exception {

+

+ // configure Fluo to use mini instance. Could avoid all of this code and let MiniFluo create a

+ // MiniAccumulo instance. However we need access to the MiniAccumulo instance inorder to create

+ // the export/query table.

+ props = new FluoConfiguration();

+ props.setMiniStartAccumulo(false);

+ props.setApplicationName("phrasecount");

+ props.setAccumuloInstance(cluster.getInstanceName());

+ props.setAccumuloUser("root");

+ props.setAccumuloPassword("secret");