[docs](images) Update Partial Optimized Images (#683)

diff --git a/docs/admin-manual/cluster-management/load-balancing.md b/docs/admin-manual/cluster-management/load-balancing.md

index a904c1d..9e1553d 100644

--- a/docs/admin-manual/cluster-management/load-balancing.md

+++ b/docs/admin-manual/cluster-management/load-balancing.md

@@ -514,22 +514,22 @@

Then add the following in it

```bash

-events {

-worker_connections 1024;

-}

-stream {

- upstream mysqld {

- hash $remote_addr consistent;

- server 172.31.7.119:9030 weight=1 max_fails=2 fail_timeout=60s;

- ##注意这里如果是多个FE,加载这里就行了

- }

- ###这里是配置代理的端口,超时时间等

- server {

- listen 6030;

- proxy_connect_timeout 300s;

- proxy_timeout 300s;

- proxy_pass mysqld;

- }

+events {

+worker_connections 1024;

+}

+stream {

+ upstream mysqld {

+ hash $remote_addr consistent;

+ server 172.31.7.119:9030 weight=1 max_fails=2 fail_timeout=60s;

+ ## Note: If there are multiple FEs, just load them here.

+ }

+ ### Configuration for proxy port, timeout, etc.

+ server {

+ listen 6030;

+ proxy_connect_timeout 300s;

+ proxy_timeout 300s;

+ proxy_pass mysqld;

+ }

}

```

diff --git a/docs/admin-manual/fe/node-action.md b/docs/admin-manual/fe/node-action.md

index 842d58c..70dd50f 100644

--- a/docs/admin-manual/fe/node-action.md

+++ b/docs/admin-manual/fe/node-action.md

@@ -24,7 +24,7 @@

under the License.

-->

-# Node Action

+

## Request

@@ -220,7 +220,8 @@

### Response

`GET /rest/v2/manager/node/configuration_name`

-```

+

+```json

{

"msg": "success",

"code": 0,

@@ -237,7 +238,8 @@

```

`GET /rest/v2/manager/node/node_list`

-```

+

+```json

{

"msg": "success",

"code": 0,

@@ -254,27 +256,28 @@

```

`POST /rest/v2/manager/node/configuration_info?type=fe`

-```

-{

- "msg": "success",

- "code": 0,

- "data": {

- "column_names": [

- "配置项",

- "节点",

- "节点类型",

- "配置值类型",

- "MasterOnly",

- "配置值",

- "可修改"

- ],

- "rows": [

- [

- ""

- ]

- ]

- },

- "count": 0

+

+```json

+{

+ "msg": "success",

+ "code": 0,

+ "data": {

+ "column_names": [

+ "Configuration Item",

+ "Node",

+ "Node Type",

+ "Configuration Value Type",

+ "MasterOnly",

+ "Configuration Value",

+ "Modifiable"

+ ],

+ "rows": [

+ [

+ ""

+ ]

+ ]

+ },

+ "count": 0

}

```

@@ -285,12 +288,12 @@

"code": 0,

"data": {

"column_names": [

- "配置项",

- "节点",

- "节点类型",

- "配置值类型",

- "配置值",

- "可修改"

+ "Configuration Item",

+ "Node",

+ "Node Type",

+ "Configuration Value Type",

+ "Configuration Value",

+ "Modifiable"

],

"rows": [

[

@@ -308,7 +311,8 @@

POST /rest/v2/manager/node/configuration_info?type=fe

body:

- ```

+

+ ```json

{

"conf_name":[

"agent_task_resend_wait_time_ms"

@@ -317,33 +321,34 @@

```

Response:

- ```

- {

- "msg": "success",

- "code": 0,

- "data": {

- "column_names": [

- "配置项",

- "节点",

- "节点类型",

- "配置值类型",

- "MasterOnly",

- "配置值",

- "可修改"

- ],

- "rows": [

- [

- "agent_task_resend_wait_time_ms",

- "127.0.0.1:8030",

- "FE",

- "long",

- "true",

- "50000",

- "true"

- ]

- ]

- },

- "count": 0

+

+ ```json

+ {

+ "msg": "success",

+ "code": 0,

+ "data": {

+ "column_names": [

+ "Configuration Item",

+ "Node",

+ "Node Type",

+ "Configuration Value Type",

+ "MasterOnly",

+ "Configuration Value",

+ "Modifiable"

+ ],

+ "rows": [

+ [

+ "agent_task_resend_wait_time_ms",

+ "127.0.0.1:8030",

+ "FE",

+ "long",

+ "true",

+ "50000",

+ "true"

+ ]

+ ]

+ },

+ "count": 0

}

```

@@ -358,7 +363,8 @@

Used to modify fe or be node configuration values

### Request body

-```

+

+```json

{

"config_name":{

"node":[

@@ -377,7 +383,8 @@

### Response

`GET /rest/v2/manager/node/configuration_name`

-```

+

+``` json

{

"msg": "",

"code": 0,

@@ -403,7 +410,8 @@

POST /rest/v2/manager/node/set_config/fe

body:

- ```

+

+ ```json

{

"agent_task_resend_wait_time_ms":{

"node":[

@@ -454,7 +462,8 @@

action:ADD/DROP/DECOMMISSION

### Request body

-```

+

+```json

{

"hostPorts": ["127.0.0.1:9050"],

"properties": {

@@ -467,7 +476,8 @@

```

### Response

-```

+

+```json

{

"msg": "Error",

"code": 1,

@@ -486,14 +496,16 @@

post /rest/v2/manager/node/ADD/be

Request body

- ```

+

+ ```json

{

"hostPorts": ["127.0.0.1:9050"]

}

```

Response

- ```

+

+ ```json

{

"msg": "success",

"code": 0,

@@ -506,14 +518,16 @@

post /rest/v2/manager/node/DROP/be

Request body

- ```

+

+ ```json

{

"hostPorts": ["127.0.0.1:9050"]

}

```

Response

- ```

+

+ ```json

{

"msg": "success",

"code": 0,

@@ -526,14 +540,14 @@

post /rest/v2/manager/node/DECOMMISSION/be

Request body

- ```

+ ```json

{

"hostPorts": ["127.0.0.1:9050"]

}

```

Response

- ```

+ ```json

{

"msg": "success",

"code": 0,

@@ -553,7 +567,7 @@

action:ADD/DROP

### Request body

-```

+```json

{

"role": "FOLLOWER",

"hostPort": "127.0.0.1:9030"

@@ -564,7 +578,7 @@

```

### Response

-```

+```json

{

"msg": "Error",

"code": 1,

@@ -583,7 +597,7 @@

post /rest/v2/manager/node/ADD/fe

Request body

- ```

+ ```json

{

"role": "FOLLOWER",

"hostPort": "127.0.0.1:9030"

@@ -591,7 +605,7 @@

```

Response

- ```

+ ```json

{

"msg": "success",

"code": 0,

@@ -604,7 +618,7 @@

post /rest/v2/manager/node/DROP/fe

Request body

- ```

+ ```json

{

"role": "FOLLOWER",

"hostPort": "127.0.0.1:9030"

@@ -612,7 +626,7 @@

```

Response

- ```

+ ```json

{

"msg": "success",

"code": 0,

diff --git a/docs/admin-manual/fe/query-profile-action.md b/docs/admin-manual/fe/query-profile-action.md

index bd0effc..5d3904a 100644

--- a/docs/admin-manual/fe/query-profile-action.md

+++ b/docs/admin-manual/fe/query-profile-action.md

@@ -24,7 +24,7 @@

under the License.

-->

-# Query Profile Action

+

## Request

@@ -71,75 +71,78 @@

### Response

-```

+```json

{

- "msg": "success",

- "code": 0,

- "data": {

- "column_names": [

- "Query ID",

- "FE节点",

- "查询用户",

- "执行数据库",

- "Sql",

- "查询类型",

- "开始时间",

- "结束时间",

- "执行时长",

- "状态"

- ],

- "rows": [

- [

- ...

- ]

- ]

- },

- "count": 0

+ "msg": "success",

+ "code": 0,

+ "data": {

+ "column_names": [

+ "Query ID",

+ "FE Node",

+ "Query User",

+ "Execution Database",

+ "Sql",

+ "Query Type",

+ "Start Time",

+ "End Time",

+ "Execution Duration",

+ "Status"

+ ],

+ "rows": [

+ [

+ ...

+ ]

+ ]

+ },

+ "count": 0

}

```

-<version since="1.2">

+:::info Note

-Admin 和 Root 用户可以查看所有 Query。普通用户仅能查看自己发送的 Query。

+Since Doris Version 1.2, Admin and Root users can view all queries. Regular users can only view their own submitted queries.

-</version>

+:::

+

+

### Examples

-```

+

+```json

GET /rest/v2/manager/query/query_info

{

- "msg": "success",

- "code": 0,

- "data": {

- "column_names": [

- "Query ID",

- "FE节点",

- "查询用户",

- "执行数据库",

- "Sql",

- "查询类型",

- "开始时间",

- "结束时间",

- "执行时长",

- "状态"

- ],

- "rows": [

- [

- "d7c93d9275334c35-9e6ac5f295a7134b",

- "127.0.0.1:8030",

- "root",

- "default_cluster:testdb",

- "select c.id, c.name, p.age, p.phone, c.date, c.cost from cost c join people p on c.id = p.id where p.age > 20 order by c.id",

- "Query",

- "2021-07-29 16:59:12",

- "2021-07-29 16:59:12",

- "109ms",

- "EOF"

- ]

- ]

- },

- "count": 0

+ "msg": "success",

+ "code": 0,

+ "data": {

+ "column_names": [

+ "Query ID",

+ "FE Node",

+ "Query User",

+ "Execution Database",

+ "Sql",

+ "Query Type",

+ "Start Time",

+ "End Time",

+ "Execution Duration",

+ "Status"

+ ],

+ "rows": [

+ [

+ "d7c93d9275334c35-9e6ac5f295a7134b",

+ "127.0.0.1:8030",

+ "root",

+ "default_cluster:testdb",

+ "select c.id, c.name, p.age, p.phone, c.date, c.cost from cost c join people p on c.id = p.id where p.age > 20 order by c.id",

+ "Query",

+ "2021-07-29 16:59:12",

+ "2021-07-29 16:59:12",

+ "109ms",

+ "EOF"

+ ]

+ ]

+ },

+ "count": 0

}

```

@@ -167,7 +170,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

@@ -176,11 +179,11 @@

}

```

-<version since="1.2">

+:::note Info

-Admin and Root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

+Since Doris version 1.2, admin and root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

-```

+```json

{

"msg": "Bad Request",

"code": 403,

@@ -188,8 +191,8 @@

"count": 0

}

```

+:::

-</version>

## Get the sql and text profile for the specified query

@@ -215,7 +218,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

@@ -226,7 +229,7 @@

}

```

-```

+```json

{

"msg": "success",

"code": 0,

@@ -237,11 +240,11 @@

}

```

-<version since="1.2">

+:::note Info

-Admin and Root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

+Since Doris version 1.2, admin and root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

-```

+```json

{

"msg": "Bad Request",

"code": 403,

@@ -250,13 +253,13 @@

}

```

-</version>

+:::

### Examples

1. get sql.

- ```

+ ```json

GET /rest/v2/manager/query/sql/d7c93d9275334c35-9e6ac5f295a7134b

Response:

@@ -275,7 +278,9 @@

`GET /rest/v2/manager/query/profile/fragments/{query_id}`

:::caution

+

Since 2.1.1, this API is deprecated. You can still download profile from http://<fe_ip>:<fe_http_port>/QueryProfile

+

:::

### Description

@@ -296,7 +301,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

@@ -316,11 +321,11 @@

}

```

-<version since="1.2">

+:::note Info

-Admin and Root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

+Since Doris version 1.2, admin and root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

-```

+```json

{

"msg": "Bad Request",

"code": 403,

@@ -328,12 +333,12 @@

"count": 0

}

```

+:::

-</version>

### Examples

-```

+```json

GET /rest/v2/manager/query/profile/fragments/d7c93d9275334c35-9e6ac5f295a7134b

Response:

@@ -408,7 +413,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

@@ -419,11 +424,11 @@

}

```

-<version since="1.2">

+:::note Info

-Admin and Root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

+Since Doris version 1.2, admin and root user can view all queries. Ordinary users can only view the Query sent by themselves. If the specified trace id does not exist or has no permission, it will return Bad Request:

-```

+```json

{

"msg": "Bad Request",

"code": 403,

@@ -431,8 +436,9 @@

"count": 0

}

```

+:::

-</version>

+

## Current running queries

@@ -452,7 +458,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

@@ -485,7 +491,7 @@

### Response

-```

+```json

{

"msg": "success",

"code": 0,

diff --git a/docs/admin-manual/fe/query-stats-action.md b/docs/admin-manual/fe/query-stats-action.md

index 9ef5035..d06b10c 100644

--- a/docs/admin-manual/fe/query-stats-action.md

+++ b/docs/admin-manual/fe/query-stats-action.md

@@ -24,20 +24,17 @@

under the License.

-->

-# Query Stats Action

-

-<version since="dev"></version>

## Request

```

-查看

-get api/query_stats/<catalog_name>

-get api/query_stats/<catalog_name>/<db_name>

-get api/query_stats/<catalog_name>/<db_name>/<tbl_name>

-

-清空

-delete api/query_stats/<catalog_name>/<db_name>

+View

+get api/query_stats/<catalog_name>

+get api/query_stats/<catalog_name>/<db_name>

+get api/query_stats/<catalog_name>/<db_name>/<tbl_name>

+

+Clear

+delete api/query_stats/<catalog_name>/<db_name>

delete api/query_stats/<catalog_name>/<db_name>/<tbl_name>

```

diff --git a/docs/admin-manual/maint-monitor/tablet-repair-and-balance.md b/docs/admin-manual/maint-monitor/tablet-repair-and-balance.md

index cdcb838..96ed613 100644

--- a/docs/admin-manual/maint-monitor/tablet-repair-and-balance.md

+++ b/docs/admin-manual/maint-monitor/tablet-repair-and-balance.md

@@ -571,31 +571,57 @@

The meanings of each line are as follows:

-* num of tablet check round: Tablet Checker 检查次数

-* cost of tablet check(ms): Tablet Checker 检查总耗时

-* num of tablet checked in tablet checker: Tablet Checker 检查过的 tablet 数量

-* num of unhealthy tablet checked in tablet checker: Tablet Checker 检查过的不健康的 tablet 数量

-* num of tablet being added to tablet scheduler: 被提交到 Tablet Scheduler 中的 tablet 数量

-* num of tablet schedule round: Tablet Scheduler 运行次数

-* cost of tablet schedule(ms): Tablet Scheduler 运行总耗时

-* num of tablet being scheduled: 被调度的 Tablet 总数量

-* num of tablet being scheduled succeeded: 被成功调度的 Tablet 总数量

-* num of tablet being scheduled failed: 调度失败的 Tablet 总数量

-* num of tablet being scheduled discard: 调度失败且被抛弃的 Tablet 总数量

-* num of tablet priority upgraded: 优先级上调次数

-* num of tablet priority downgraded: 优先级下调次数

-* num of clone task: number of clone tasks generated

-* num of clone task succeeded: clone 任务成功的数量

-* num of clone task failed: clone 任务失败的数量

-* num of clone task timeout: clone 任务超时的数量

-* num of replica missing error: the number of tablets whose status is checked is the missing copy

-* num of replica version missing error: 检查的状态为版本缺失的 tablet 的数量(该统计值包括了 num of replica relocating 和 num of replica missing in cluster error)

-*num of replica relocation *29366;* 24577;*replica relocation tablet *

-* num of replica redundant error: Number of tablets whose checked status is replica redundant

-* num of replica missing in cluster error: 检查的状态为不在对应 cluster 的 tablet 的数量

-* num of balance scheduled: 均衡调度的次数

+- num of tablet check round: Number of Tablet Checker inspections

-> Note: The above states are only historical accumulative values. We also print these statistics regularly in the FE logs, where the values in parentheses represent the number of changes in each statistical value since the last printing dependence of the statistical information.

+- cost of tablet check(ms): Total time consumed by Tablet Checker inspections (milliseconds)

+

+- num of tablet checked in tablet checker: Number of tablets checked by the Tablet Checker

+

+- num of unhealthy tablet checked in tablet checker: Number of unhealthy tablets checked by the Tablet Checker

+

+- num of tablet being added to tablet scheduler: Number of tablets submitted to the Tablet Scheduler

+

+- num of tablet schedule round: Number of Tablet Scheduler runs

+

+- cost of tablet schedule(ms): Total time consumed by Tablet Scheduler runs (milliseconds)

+

+- num of tablet being scheduled: Total number of tablets scheduled

+

+- num of tablet being scheduled succeeded: Total number of tablets successfully scheduled

+

+- num of tablet being scheduled failed: Total number of tablets that failed scheduling

+

+- num of tablet being scheduled discard: Total number of tablets discarded due to scheduling failures

+

+- num of tablet priority upgraded: Number of tablet priority upgrades

+

+- num of tablet priority downgraded: Number of tablet priority downgrades

+

+- num of clone task: Number of clone tasks generated

+

+- num of clone task succeeded: Number of successful clone tasks

+

+- num of clone task failed: Number of failed clone tasks

+

+- num of clone task timeout: Number of clone tasks that timed out

+

+- num of replica missing error: Number of tablets whose status is checked as missing replicas

+

+- num of replica version missing error: Number of tablets checked with missing version status (this statistic includes num of replica relocating and num of replica missing in cluster error)

+

+- num of replica relocation: Number of replica relocations

+

+- num of replica redundant error: Number of tablets whose checked status is replica redundant

+

+- num of replica missing in cluster error: Number of tablets checked with a status indicating they are missing from the corresponding cluster

+

+- num of balance scheduled: Number of balanced scheduling attempts

+

+:::info Note

+

+The above states are only historical accumulative values. We also print these statistics regularly in the FE logs, where the values in parentheses represent the number of changes in each statistical value since the last printing dependence of the statistical information.

+

+:::

## Relevant configuration instructions

diff --git a/docs/data-operate/import/routine-load-manual.md b/docs/data-operate/import/routine-load-manual.md

index 285e887..db5d561 100644

--- a/docs/data-operate/import/routine-load-manual.md

+++ b/docs/data-operate/import/routine-load-manual.md

@@ -62,7 +62,7 @@

The specific process of Routine Load is illustrated in the following diagram:

-

+

1. The Client submits a Routine Load job to the FE to establish a persistent Routine Load Job.

diff --git a/docs/data-operate/import/stream-load-manual.md b/docs/data-operate/import/stream-load-manual.md

index 7dff5c4..1dc57f9 100644

--- a/docs/data-operate/import/stream-load-manual.md

+++ b/docs/data-operate/import/stream-load-manual.md

@@ -60,7 +60,7 @@

The following figure shows the main flow of Stream load, omitting some import details.

-

+

1. The client submits a Stream Load import job request to the FE (Frontend).

2. The FE randomly selects a BE (Backend) as the Coordinator node, which is responsible for scheduling the import job, and then returns an HTTP redirect to the client.

diff --git a/docs/ecosystem/datax.md b/docs/ecosystem/datax.md

index aaa5ad8..047081a 100644

--- a/docs/ecosystem/datax.md

+++ b/docs/ecosystem/datax.md

@@ -381,13 +381,13 @@

2022-11-16 14:29:04.205 [job-0] INFO JobContainer - PerfTrace not enable!

2022-11-16 14:29:04.206 [job-0] INFO StandAloneJobContainerCommunicator - Total 2 records, 214 bytes | Speed 21B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 100.00%

2022-11-16 14:29:04.206 [job-0] INFO JobContainer -

-任务启动时刻 : 2022-11-16 14:28:53

-任务结束时刻 : 2022-11-16 14:29:04

-任务总计耗时 : 10s

-任务平均流量 : 21B/s

-记录写入速度 : 0rec/s

-读出记录总数 : 2

-读写失败总数 : 0

+Task Start Time : 2022-11-16 14:28:53

+Task End Time : 2022-11-16 14:29:04

+Total Task Duration : 10s

+Average Task Throughput : 21B/s

+Record Write Speed : 0rec/s

+Total Records Read : 2

+Total Read/Write Failures : 0

```

diff --git a/docs/ecosystem/kyuubi.md b/docs/ecosystem/kyuubi.md

index 10a2e10..d2c0afa 100644

--- a/docs/ecosystem/kyuubi.md

+++ b/docs/ecosystem/kyuubi.md

@@ -44,7 +44,7 @@

Download Apache Kyuubi from <https://kyuubi.apache.org/zh/releases.html>

-Get Apache Kyuubi 1.6.0 or above and extract it to folder。

+Get Apache Kyuubi 1.6.0 or above and extract it to folder.

### Config Doris as Kyuubi data source

@@ -95,21 +95,22 @@

Execute query statement `select * from demo.expamle_tbl;` with query results returned.

```shell

-0: jdbc:hive2://xxxx:10009/> select * from demo.example_tbl;

-

-2023-03-07 09:29:14.771 INFO org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[bdc59dd0-ceea-4c02-8c3a-23424323f5db]: PENDING_STATE -> RUNNING_STATE, statement:

-select * from demo.example_tbl

-2023-03-07 09:29:14.786 INFO org.apache.kyuubi.operation.ExecuteStatement: Query[bdc59dd0-ceea-4c02-8c3a-23424323f5db] in FINISHED_STATE

-2023-03-07 09:29:14.787 INFO org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[bdc59dd0-ceea-4c02-8c3a-23424323f5db]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.015 seconds

-+----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

-| user_id | date | city | age | sex | last_visit_date | cost | max_dwell_time | min_dwell_time |

-+----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

-| 10000 | 2017-10-01 | 北京 | 20 | 0 | 2017-10-01 07:00:00.0 | 70 | 10 | 2 |

-| 10001 | 2017-10-01 | 北京 | 30 | 1 | 2017-10-01 17:05:45.0 | 4 | 22 | 22 |

-| 10002 | 2017-10-02 | 上海 | 20 | 1 | 2017-10-02 12:59:12.0 | 400 | 5 | 5 |

-| 10003 | 2017-10-02 | 广州 | 32 | 0 | 2017-10-02 11:20:00.0 | 60 | 11 | 11 |

-| 10004 | 2017-10-01 | 深圳 | 35 | 0 | 2017-10-01 10:00:15.0 | 200 | 3 | 3 |

-| 10004 | 2017-10-03 | 深圳 | 35 | 0 | 2017-10-03 10:20:22.0 | 22 | 6 | 6 |

-+----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

+0: jdbc:hive2://xxxx:10009/> select * from demo.example_tbl;

+

+2023-03-07 09:29:14.771 INFO org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[bdc59dd0-ceea-4c02-8c3a-23424323f5db]: PENDING_STATE -> RUNNING_STATE, statement:

+select * from demo.example_tbl

+2023-03-07 09:29:14.786 INFO org.apache.kyuubi.operation.ExecuteStatement: Query[bdc59dd0-ceea-4c02-8c3a-23424323f5db] in FINISHED_STATE

+2023-03-07 09:29:14.787 INFO org.apache.kyuubi.operation.ExecuteStatement: Processing anonymous's query[bdc59dd0-ceea-4c02-8c3a-23424323f5db]: RUNNING_STATE -> FINISHED_STATE, time taken: 0.015 seconds

++----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

+| user_id | date | city | age | sex | last_visit_date | cost | max_dwell_time | min_dwell_time |

++----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

+| 10000 | 2017-10-01 | Beijing | 20 | 0 | 2017-10-01 07:00:00.0 | 70 | 10 | 2 |

+| 10001 | 2017-10-01 | Beijing | 30 | 1 | 2017-10-01 17:05:45.0 | 4 | 22 | 22 |

+| 10002 | 2017-10-02 | Shanghai| 20 | 1 | 2017-10-02 12:59:12.0 | 400 | 5 | 5 |

+| 10003 | 2017-10-02 | Guangzhou| 32 | 0 | 2017-10-02 11:20:00.0 | 60 | 11 | 11 |

+| 10004 | 2017-10-01 | Shenzhen| 35 | 0 | 2017-10-01 10:00:15.0 | 200 | 3 | 3 |

+| 10004 | 2017-10-03 | Shenzhen| 35 | 0 | 2017-10-03 10:20:22.0 | 22 | 6 | 6 |

++----------+-------------+-------+------+------+------------------------+-------+-----------------+-----------------+

6 rows selected (0.068 seconds)

+

```

diff --git a/docs/faq/lakehouse-faq.md b/docs/faq/lakehouse-faq.md

index 088f900..38d253e 100644

--- a/docs/faq/lakehouse-faq.md

+++ b/docs/faq/lakehouse-faq.md

@@ -194,7 +194,7 @@

Doris and hive currently query hudi differently. Doris needs to add partition fields to the avsc file of the hudi table structure. If not added, it will cause Doris to query partition_ Val is empty (even if home. datasource. live_sync. partition_fields=partition_val is set)

- ```

+ ```json

{

"type": "record",

"name": "record",

@@ -210,12 +210,12 @@

{

"name": "name",

"type": "string",

- "doc": "名称"

+ "doc": "Name"

},

{

"name": "create_time",

"type": "string",

- "doc": "创建时间"

+ "doc": "Creation time"

}

]

}

diff --git a/docs/get-starting/what-is-apache-doris.md b/docs/get-starting/what-is-apache-doris.md

index c5da504..a129c89 100644

--- a/docs/get-starting/what-is-apache-doris.md

+++ b/docs/get-starting/what-is-apache-doris.md

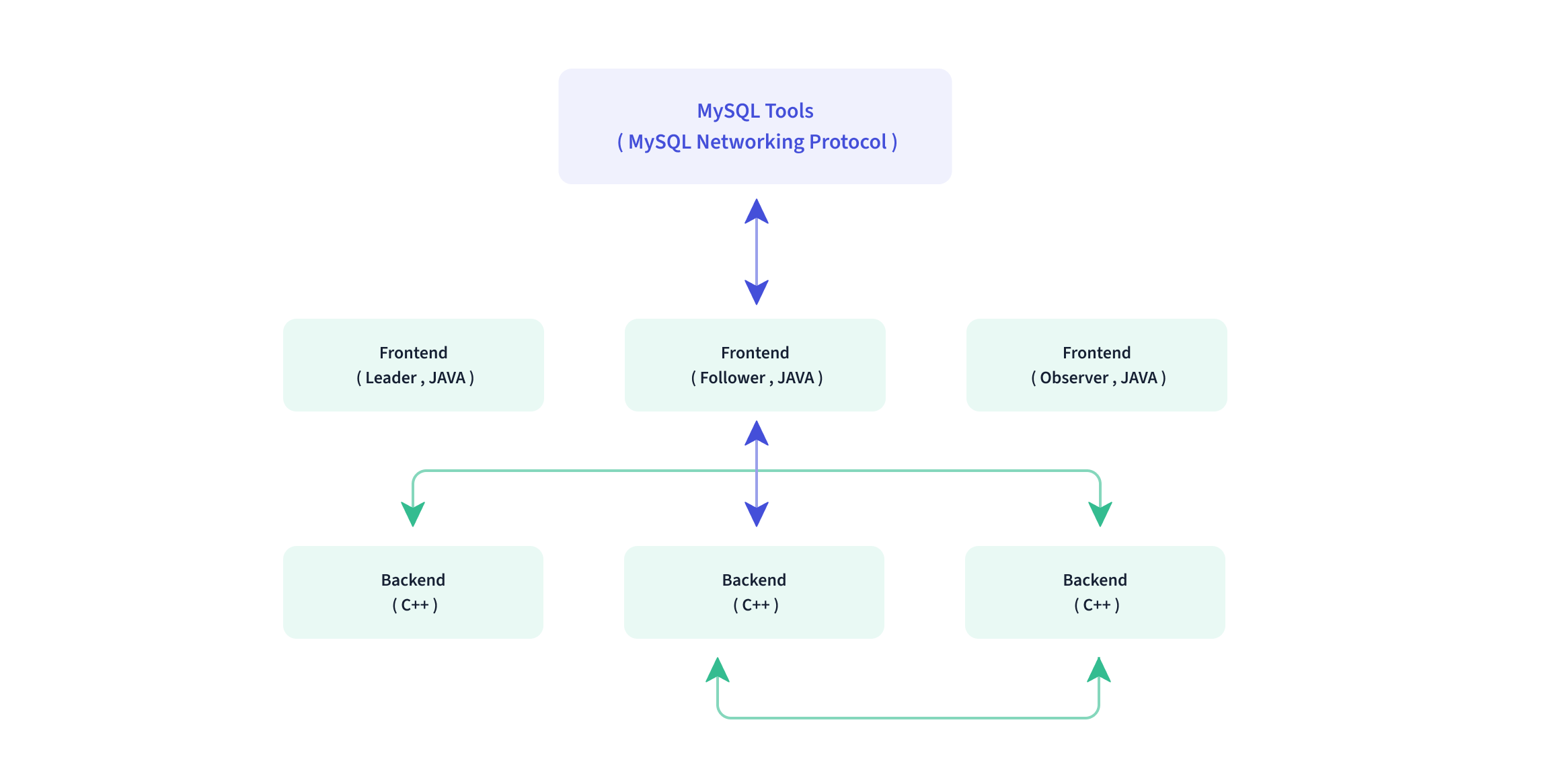

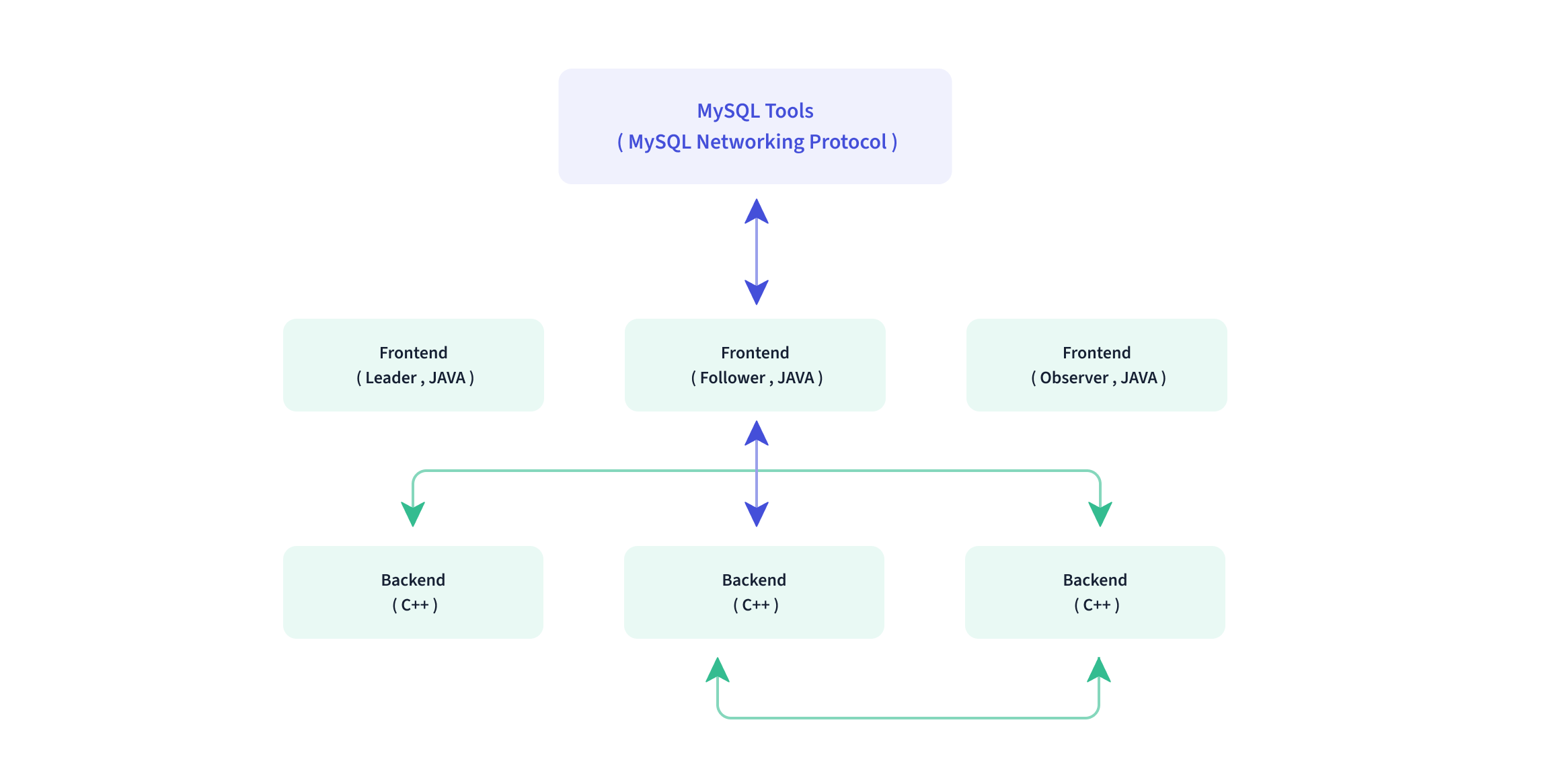

@@ -53,7 +53,7 @@

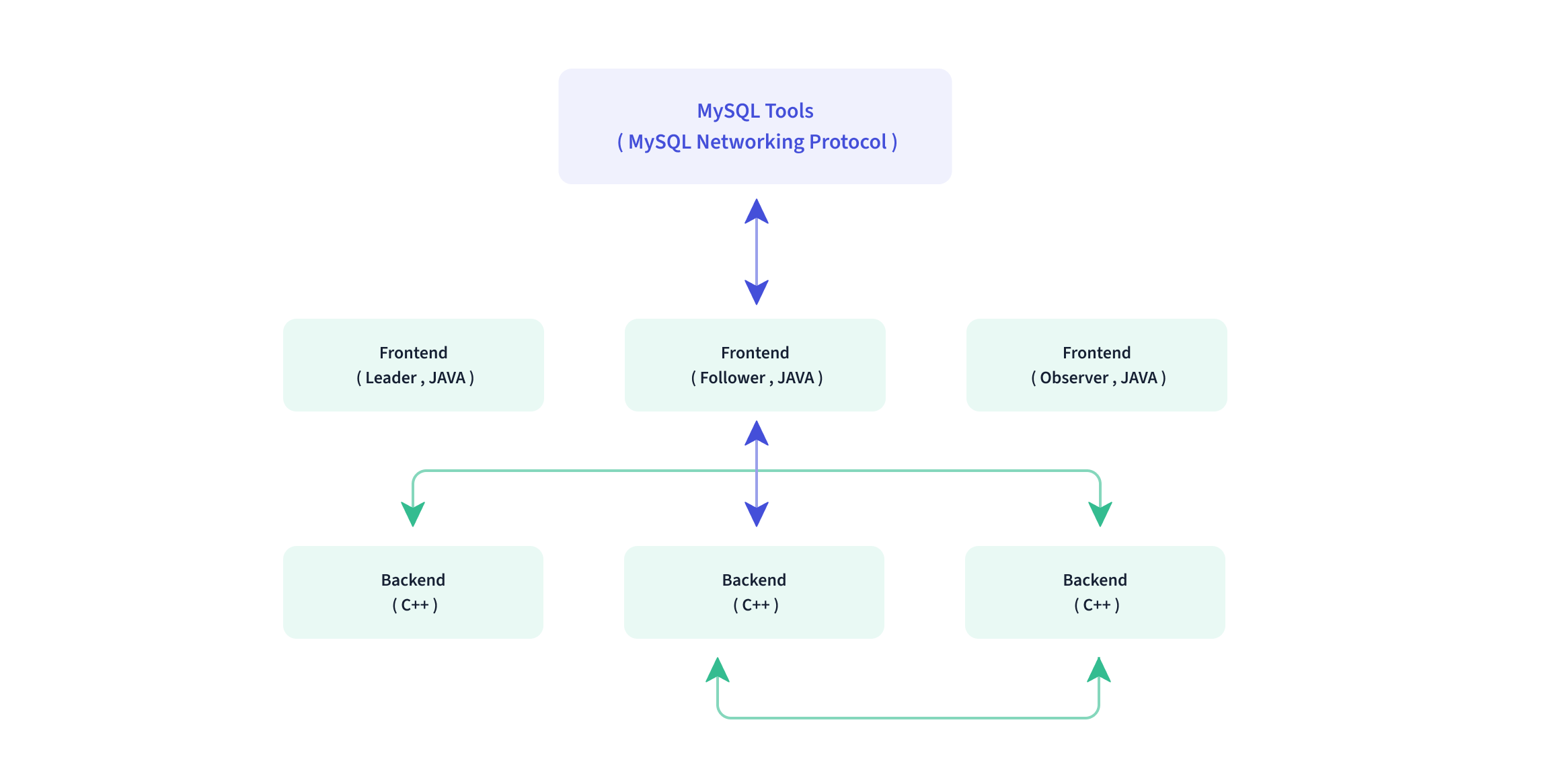

Both frontend and backend processes are scalable, supporting up to hundreds of machines and tens of petabytes of storage capacity in a single cluster. Both types of processes guarantee high service availability and high data reliability through consistency protocols. This highly integrated architecture design greatly reduces the operation and maintenance costs of a distributed system.

-

+

## Interface

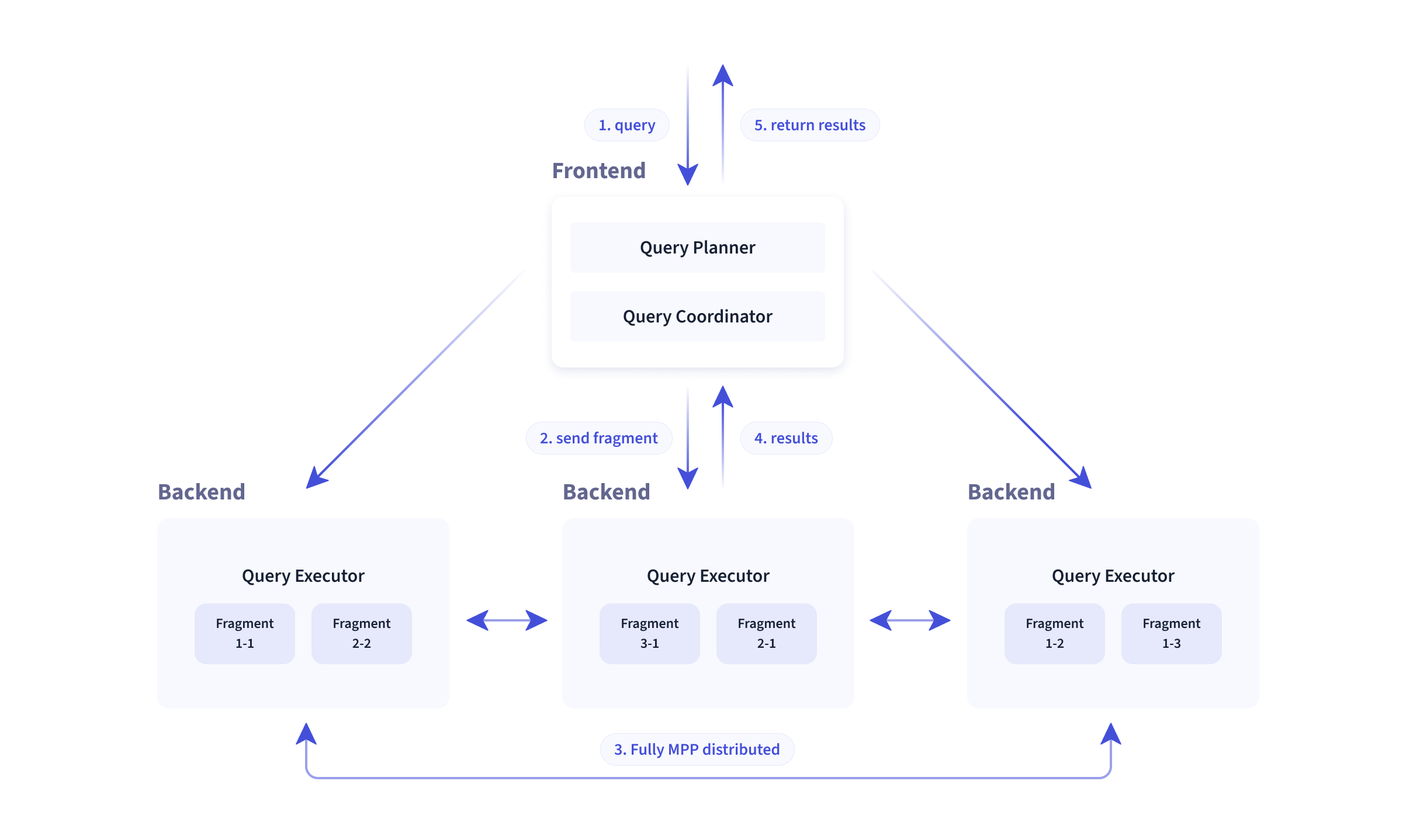

@@ -82,11 +82,11 @@

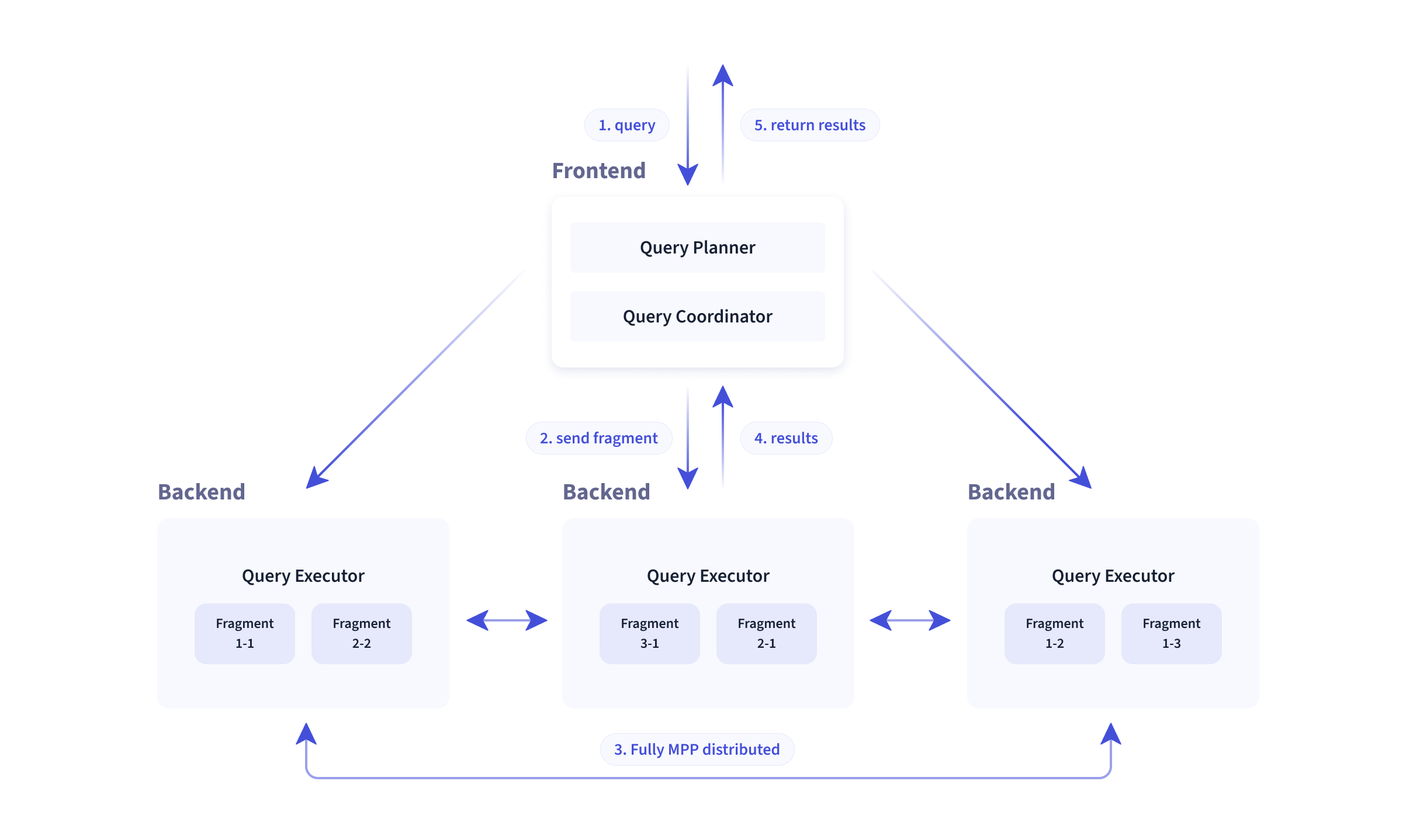

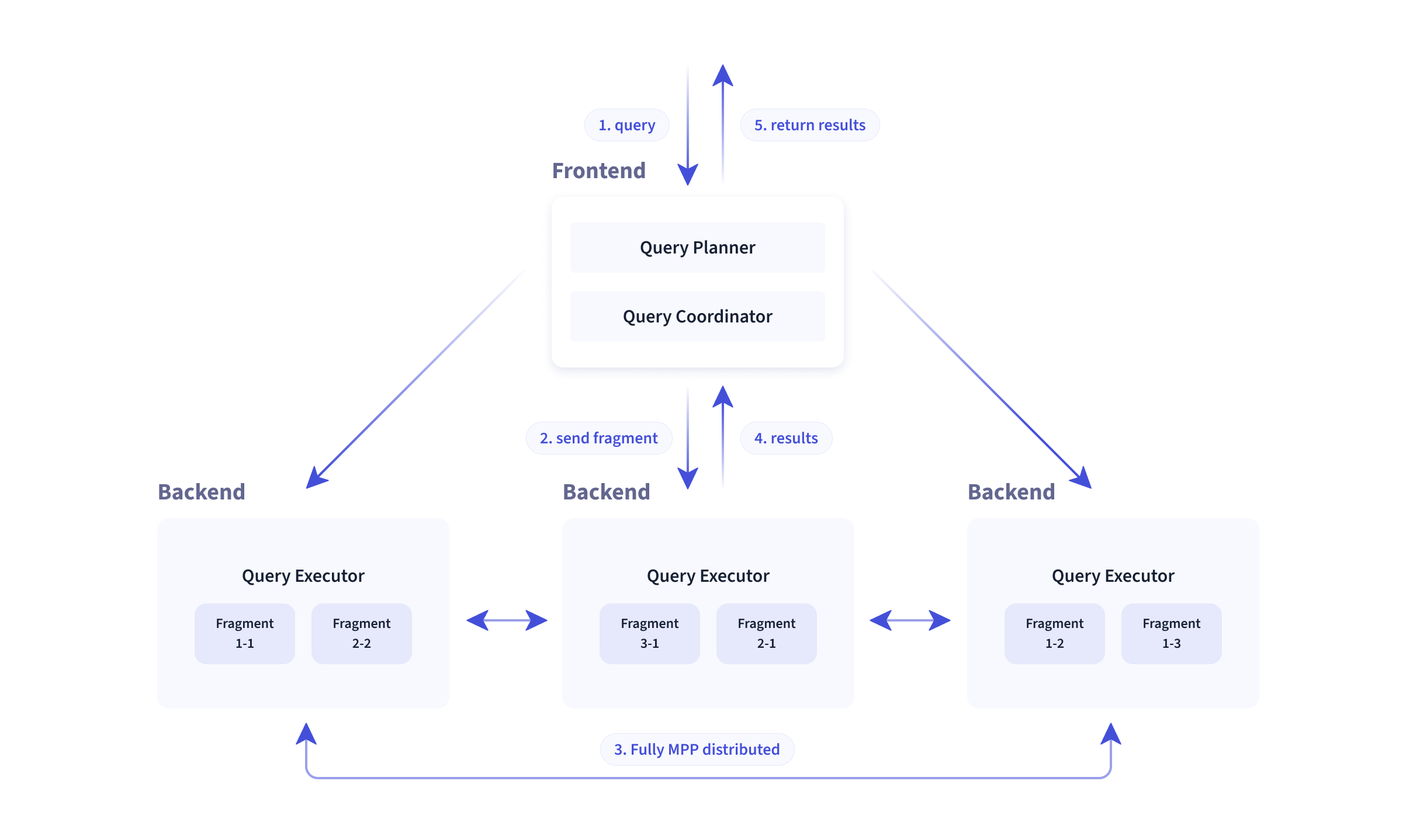

Doris has an MPP-based query engine for parallel execution between and within nodes. It supports distributed shuffle join for large tables to better handle complicated queries.

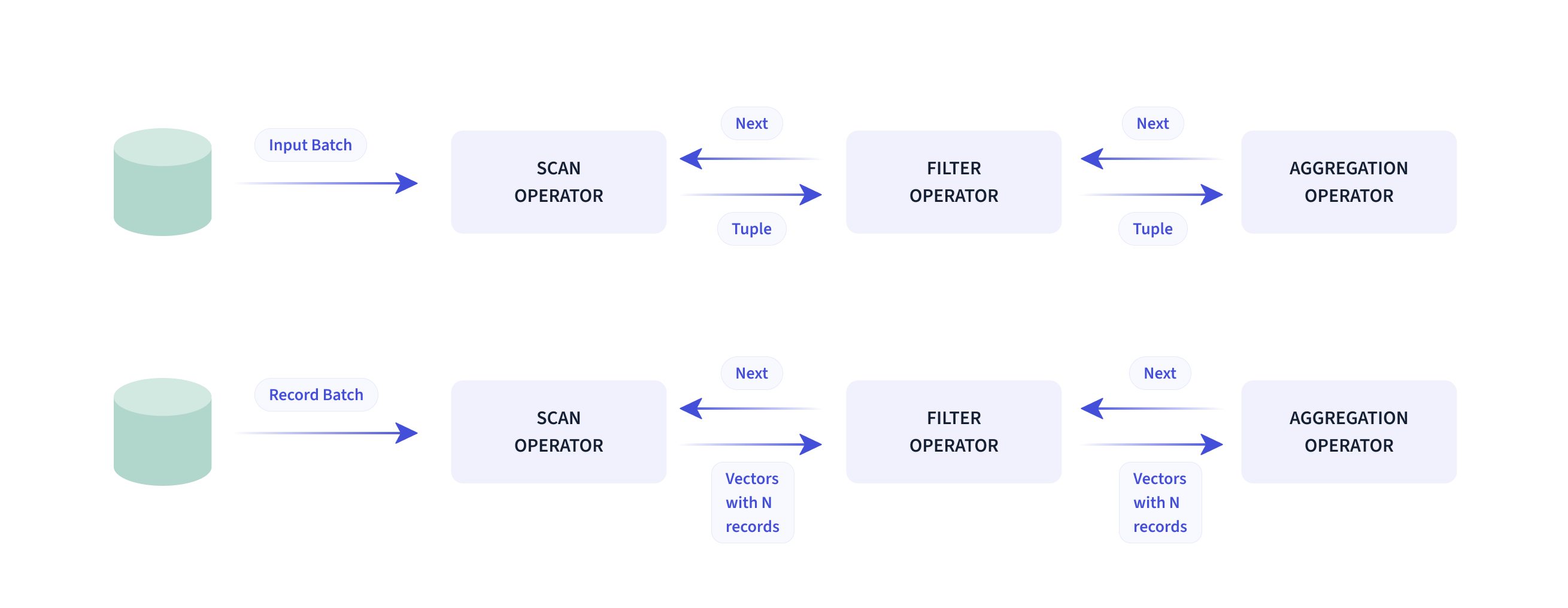

-

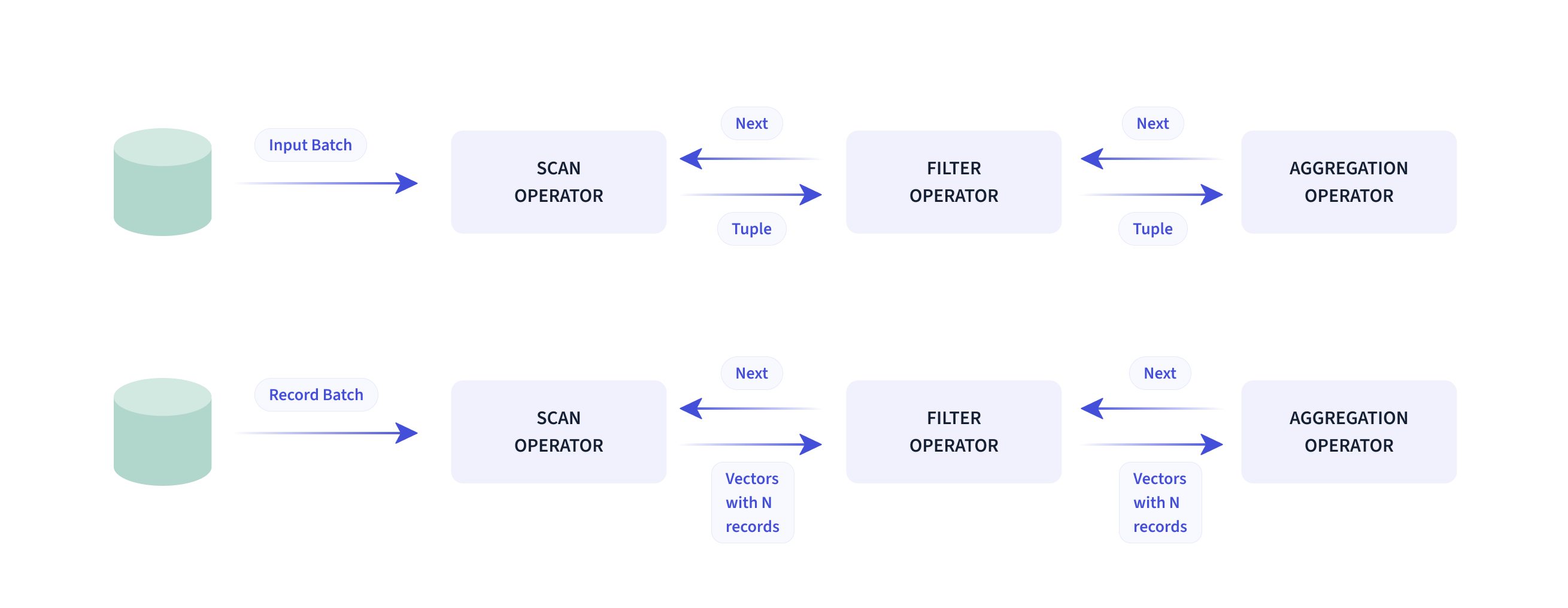

+

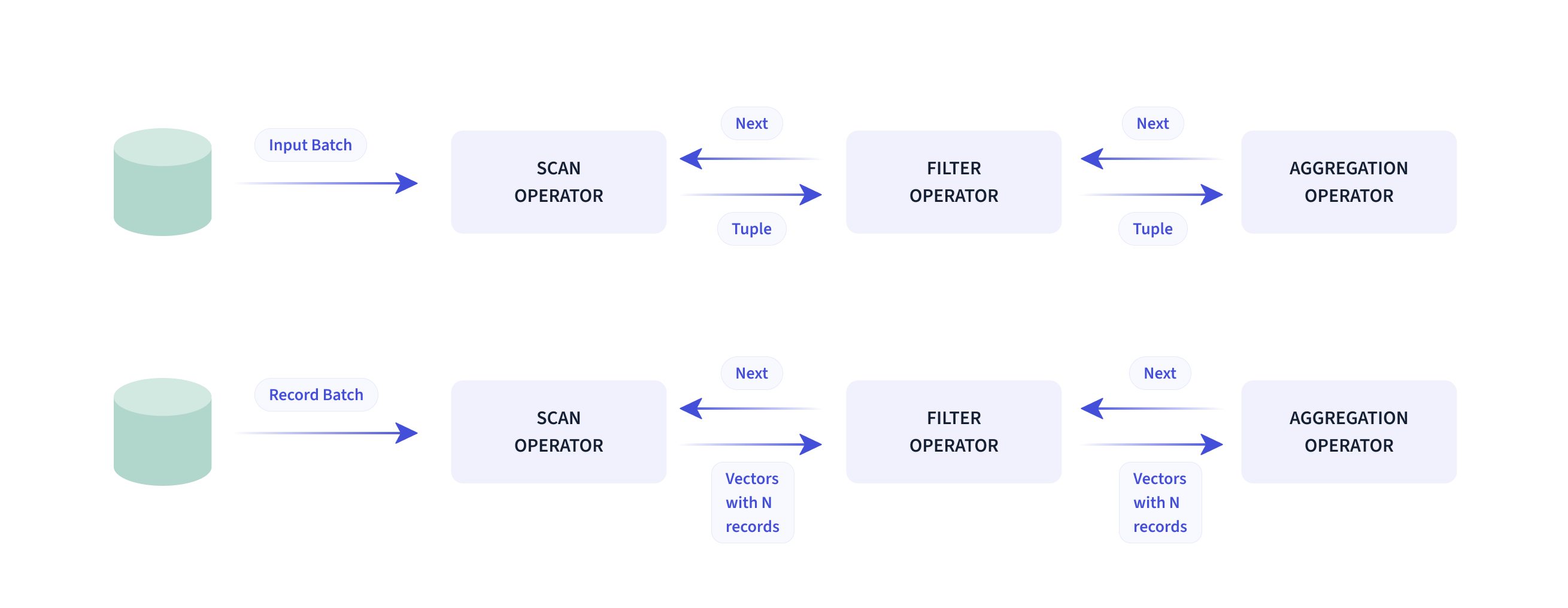

The Doris query engine is fully vectorized, with all memory structures laid out in a columnar format. This can largely reduce virtual function calls, increase cache hit rates, and make efficient use of SIMD instructions. Doris delivers a 5~10 times higher performance in wide table aggregation scenarios than non-vectorized engines.

-

+

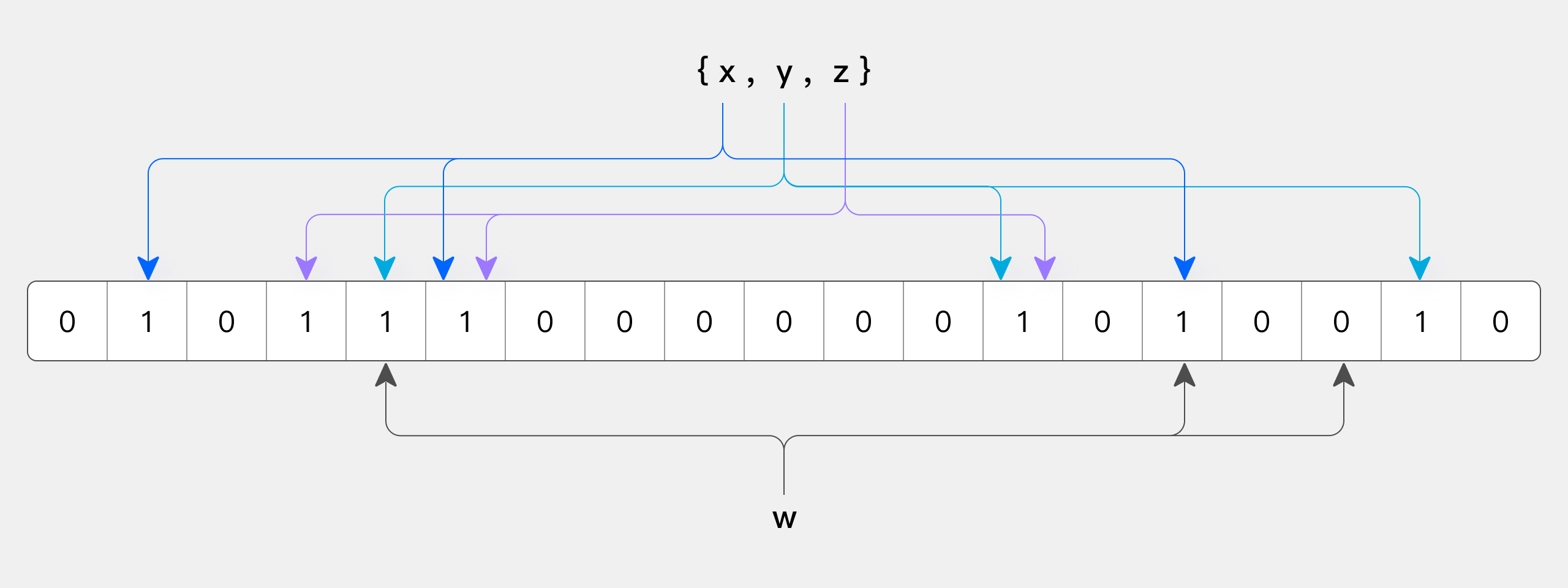

Doris uses **adaptive query execution** technology to dynamically adjust the execution plan based on runtime statistics. For example, it can generate a runtime filter and push it to the probe side. Specifically, it pushes the filters to the lowest-level scan node on the probe side, which largely reduces the data amount to be processed and increases join performance. The Doris runtime filter supports In/Min/Max/Bloom Filter.

diff --git a/docs/install/cluster-deployment/k8s-deploy/debug-crash.md b/docs/install/cluster-deployment/k8s-deploy/debug-crash.md

index 5e8de55..2c96041 100644

--- a/docs/install/cluster-deployment/k8s-deploy/debug-crash.md

+++ b/docs/install/cluster-deployment/k8s-deploy/debug-crash.md

@@ -64,7 +64,7 @@

-## 注意事项

+## Notes

**After entering the pod, you need to modify the port information of the configuration file before you can manually start the corresponding Doris component.**

diff --git a/docs/lakehouse/lakehouse-overview.md b/docs/lakehouse/lakehouse-overview.md

index 0dfbec5..5644bd6 100644

--- a/docs/lakehouse/lakehouse-overview.md

+++ b/docs/lakehouse/lakehouse-overview.md

@@ -27,7 +27,9 @@

Before the integration of data lake and data warehouse, the history of data analysis went through three eras: database, data warehouse, and data lake analytics.

- Database, the fundamental concept, was primarily responsible for online transaction processing and providing basic data analysis capabilities.

+

- As data volumes grew, data warehouses emerged. They store valuable data that has been cleansed, processed, and modeled, providing analytics capabilities for business.

+

- The advent of data lakes was to serve the needs of enterprises for storing, managing, and reprocessing raw data. They required low-cost storage for structured, semi-structured, and even unstructured data, and they also needed an integrated solution encompassing data processing, data management, and data governance.

Data warehouses addresses the need for fast data analysis, while data lakes are good at data storage and management. The integration of them, known as "lakehouse", is to facilitate the seamless integration and free flow of data between the data lake and data warehouse. It enables users to leverage the analytic capabilities of the data warehouse while harnessing the data management power of the data lake.

@@ -37,8 +39,12 @@

We design the Doris lakehouse solution for the following four applicable scenarios:

- Lakehouse query acceleration: As a highly efficient OLAP query engine, Doris has excellent MPP-based vectorized distributed query capabilities. Data lake analysis with Doris will benefit from the efficient query engine.

+

- Unified data analysis gateway: Doris provides data query and writing capabilities for various and heterogeneous data sources. Users can unify these external data sources onto Doris' data mapping structure, allowing for a consistent query experience when accessing external data sources through Doris.

+

- Unified data integration: Doris, through its data lake integration capabilities, enables incremental or full data synchronization from multiple data sources to Doris. Doris processes the synchronized data and makes it available for querying. The processed data can also be exported to downstream systems as full or incremental data services. Using Doris, you can have less reliance on external tools and enable end-to-end connectivity from ata synchronization to data processing.

+

+

- More open data platform: Many data warehouses have their own storage formats. They require external data to be imported into themselve before the data queryable. This creates a closed ecosystem where data in the data warehouse can only be accessed by the data warehouse itself. In this case, users might concern if data will be locked into a specific data warehouse or wonder if there are any other easy way for data export. The Doris lakehouse solution provides open data formats, such as Parquet/ORC, to allow data access by various external systems. Additionally, just like Iceberg and Hudi providing open metadata management capabilities, metadata, whether stored in Doris, Hive Metastore, or other unified metadata centers, can be accessed through publicly available APIs. An open data ecosystem makes it easy for enterprises to migrate to new data management systems and reduces the costs and risks in this process.

## Doris-based data lakehouse architecture

@@ -50,8 +56,11 @@

**Data access steps:**

1. Create metadata mapping: Apache Doris fetches metadata via Catalog and caches it for metadata management. For metadata mapping, it supports JDBC username-password authentication, Kerberos/Ranger-based authentication, and KMS-based data encryption.

+

2. Launch query request: When the user launches a query request from the Doris frontend (FE), Doris generates a query plan based on its cached metadata. Then, it utilizes the Native Reader to fetch data from external storage (HDFS, S3) for data computation and analysis. During query execution, it caches the hot data to prepare for similar queries in the future.

+

3. Return result: When a query is finished, it returns the query result on the frontend.

+

4. Write result to data lake: For users who need to write the result back to the data lake instead of returning it on the frontend, Doris supports result writeback in CSV, Parquet, and ORC formats via the export method to the data lake.

## Core technologies

@@ -63,6 +72,7 @@

The data connection framework in Apache Doris includes metadata connection and data reading.

- Metadata connection: Metadata connection is conducted in the frontend of Doris. The MetaData Manager in the frontend can access and manage metadata from Hive Metastore, JDBC, and data files.

+

- Data reading: Apache Doris has a NativeReader for efficient data reading from HDFS and object storage. It supports Parquet, ORC, and text data. You can also connect Apache Doris to the Java big data ecosystem via its JNI Connector.

@@ -80,7 +90,9 @@

**Data caching**

- File caching: Apache Doris caches hot data from the data lake onto its local disks to reduce data transfer via the network and increase data access efficiency.

+

- Cache distribution: Apache Doris distributes the cached data across all backend nodes via consistent hashing to avoid cache expiration caused by cluster scaling.

+

- Cache eviction(update): When Apache Doris detects changes in the metadata of a data file, it promptly updates its cached data to ensure data consistency.

@@ -88,6 +100,7 @@

**Query result caching & partition caching**

- Query result caching: Apache Doris caches the results of previous SQL queries, so it can reuse them when similar queries are launched. It will read the corresponding result from the cache directly and return it to the client. This increases query efficiency and concurrency.

+

- Partition caching: Apache Doris allows you to cache part of your data partitions in the backend to increase query efficiency. For example, if you need the data from the past 7 days (counting today), you can cache the data from the previous 6 days and merge it with today's data. This can largely reduce real-time computation burden and increase speed.

@@ -95,6 +108,7 @@

### Native Reader

- Self-developed Native Reader to avoid data conversion: Apache Doris has its own columnar storage format, which is different from Parquet and ORC. To avoid overheads caused by data format conversion, we have built our own Native Reader for Parquet and ORC files.

+

- Lazy materialization: The Native Reader can utilize indexes and filters to improve data reading. For example, when the user needs to do filtering based on the ID column, what the Native Reader does is to read and filter the ID column, take note of the relevant row numbers, and then read the corresponding rows of the other columns based on the row numbers recorded. This reduces data scanning and speeds up data reading.

@@ -134,10 +148,15 @@

With Multi-Catalog, Doris now has a new three-tiered metadata hierarchy (catalog -> database -> table), which means users can connect to external data at the catalog level directly. Currently it supports external catalogs including:

- Apache Hive

+

- Apache Iceberg

+

- Apache Hudi

+

- Elasticsearch

+

- JDBC

+

- Apache Paimon(Incubating)

Multi-Catalog works as an additional and enhanced external table connection method. It helps users conduct multi-catalog federated queries quickly.

@@ -188,8 +207,9 @@

Syntax help: [CREATE CATALOG](../sql-manual/sql-statements/Data-Definition-Statements/Create/CREATE-CATALOG/)

-1. View Catalog

-2. View existing Catalogs via the `SHOW CATALOGS` command:

+2. View Catalog

+

+3. View existing Catalogs via the `SHOW CATALOGS` command:

```Plain

mysql> SHOW CATALOGS;

@@ -202,10 +222,12 @@

```

- [SHOW CATALOGS](../sql-manual/sql-statements/Show-Statements/SHOW-CATALOGS/)

+

- You can view the CREATE CATALOG statement via [SHOW CREATE CATALOG](../sql-manual/sql-statements/Show-Statements/SHOW-CREATE-CATALOG).

+

- You can modify the Catalog PROPERTIES via [ALTER CATALOG](../sql-manual/sql-statements/Data-Definition-Statements/Alter/ALTER-CATALOG).

-1. Switch Catalog

+4. Switch Catalog

Switch to the Hive Catalog using the `SWITCH` command, and view the databases in it:

@@ -228,7 +250,7 @@

Syntax help: [SWITCH](../sql-manual/sql-statements/Utility-Statements/SWITCH/)

-1. Use the Catalog

+5. Use the Catalog

After switching to the Hive Catalog, you can use the relevant features.

@@ -320,7 +342,9 @@

```

- The table is identified in the format of `catalog.database.table`. For example, `internal.db1.part` in the above snippet.

+

- If the target table is in the current Database of the current Catalog, `catalog` and `database` in the format can be omitted.

+

- You can use the `INSERT INTO` command to insert table data from the Hive Catalog into a table in the Internal Catalog. This is how you can import data from External Catalogs to the Internal Catalog:

```Plain

@@ -384,16 +408,18 @@

Users can refresh metadata in the following ways.

-#### Manual refresh

+**Manual refresh**

Users need to manually refresh the metadata through the [REFRESH](../sql-manual/sql-statements/Utility-Statements/REFRESH) command.

-#### Regular refresh

+**Regular refresh**

When creating the catalog, specify the refresh time parameter `metadata_refresh_interval_sec` in the properties. It is measured in seconds. If this is set when creating the catalog, the FE master node will refresh the catalog regularly accordingly. Currently, Doris supports regular refresh for three types of catalogs:

- hms: Hive MetaStore

+

- es: Elasticsearch

+

- Jdbc: standard interface for database access (JDBC)

```Plain

@@ -405,6 +431,6 @@

);

```

-#### Auto Refresh

+**Auto Refresh**

Currently Doris supports auto-refresh for [Hive](../lakehouse/datalake-analytics/hive) Catalog.

diff --git a/docs/query/duplicate/using-hll.md b/docs/query/duplicate/using-hll.md

index 2f98f52..2d91aa7 100644

--- a/docs/query/duplicate/using-hll.md

+++ b/docs/query/duplicate/using-hll.md

@@ -113,17 +113,17 @@

-H "columns:dt,id,name,province,os, pv=hll_hash(id)" -T test_hll.csv http://fe_IP:8030/api/demo/test_hll/_stream_load

```

- The sample data is as follows(test_hll.csv):

+ The sample data is as follows (test_hll.csv):

- ```

- 2022-05-05,10001,测试01,北京,windows

- 2022-05-05,10002,测试01,北京,linux

- 2022-05-05,10003,测试01,北京,macos

- 2022-05-05,10004,测试01,河北,windows

- 2022-05-06,10001,测试01,上海,windows

- 2022-05-06,10002,测试01,上海,linux

- 2022-05-06,10003,测试01,江苏,macos

- 2022-05-06,10004,测试01,陕西,windows

+ ```text

+ 2022-05-05,10001,Testing01,Beijing,Windows

+ 2022-05-05,10002,Testing01,Beijing,Linux

+ 2022-05-05,10003,Testing01,Beijing,MacOS

+ 2022-05-05,10004,Testing01,Hebei,Windows

+ 2022-05-06,10001,Testing01,Shanghai,Windows

+ 2022-05-06,10002,Testing01,Shanghai,Linux

+ 2022-05-06,10003,Testing01,Jiangsu,MacOS

+ 2022-05-06,10004,Testing01,Shaanxi,Windows

```

The import result is as follows:

diff --git a/docs/query/join-optimization/bucket-shuffle-join.md b/docs/query/join-optimization/bucket-shuffle-join.md

index 4a1775d..7f452b7 100644

--- a/docs/query/join-optimization/bucket-shuffle-join.md

+++ b/docs/query/join-optimization/bucket-shuffle-join.md

@@ -40,19 +40,19 @@

## Principle

The conventional distributed join methods supported by Doris is: `Shuffle Join, Broadcast Join`. Both of these join will lead to some network overhead.

-For example, there are join queries for table A and table B. the join method is hashjoin. The cost of different join types is as follows:

+For example, there are join queries for table A and table B. the join method is hashjoin. The cost of different join types is as follows:

* **Broadcast Join**: If table a has three executing hashjoinnodes according to the data distribution, table B needs to be sent to the three HashJoinNode. Its network overhead is `3B `, and its memory overhead is `3B`.

* **Shuffle Join**: Shuffle join will distribute the data of tables A and B to the nodes of the cluster according to hash calculation, so its network overhead is `A + B` and memory overhead is `B`.

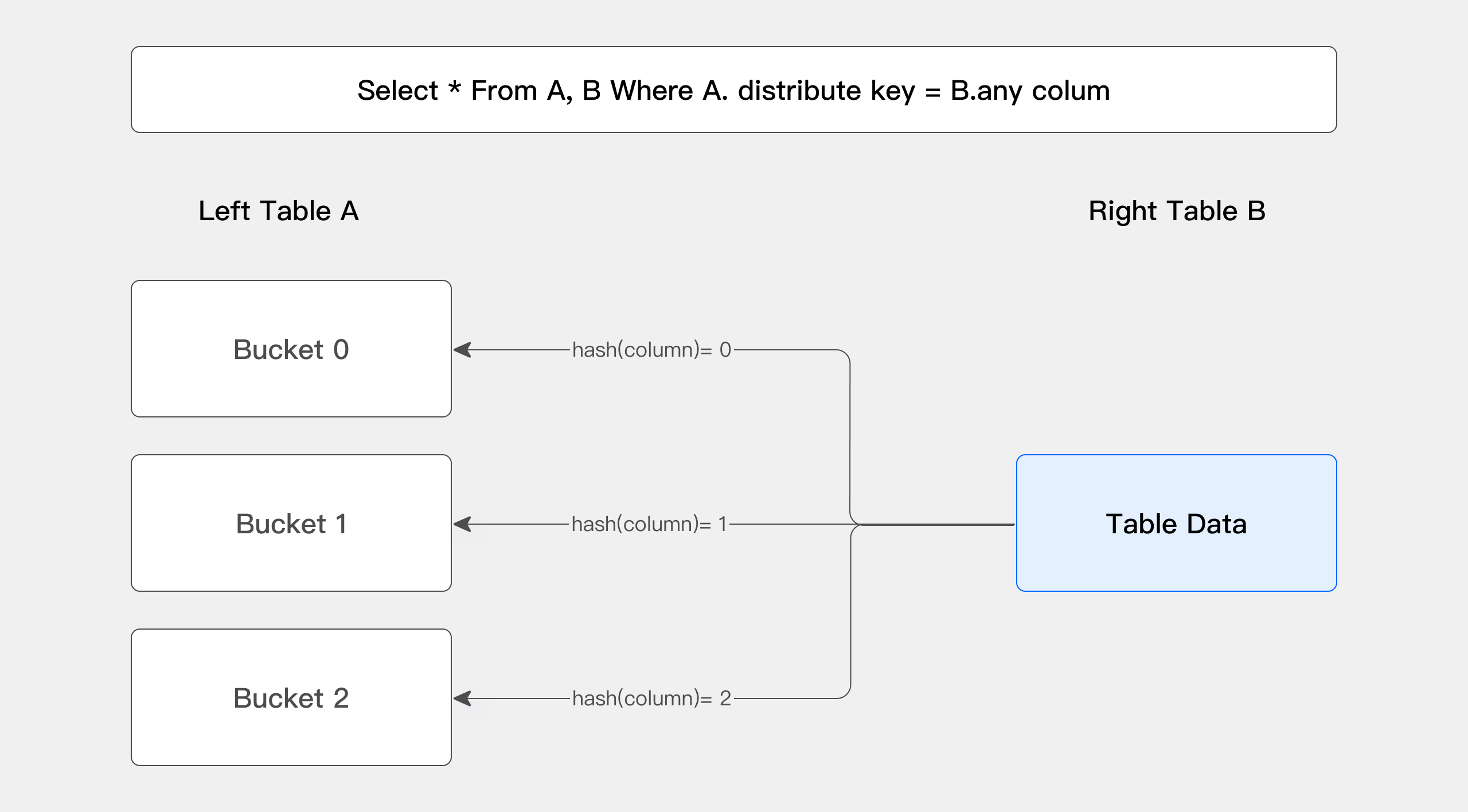

The data distribution information of each Doris table is saved in FE. If the join statement hits the data distribution column of the left table, we should use the data distribution information to reduce the network and memory overhead of the join query. This is the source of the idea of bucket shuffle join.

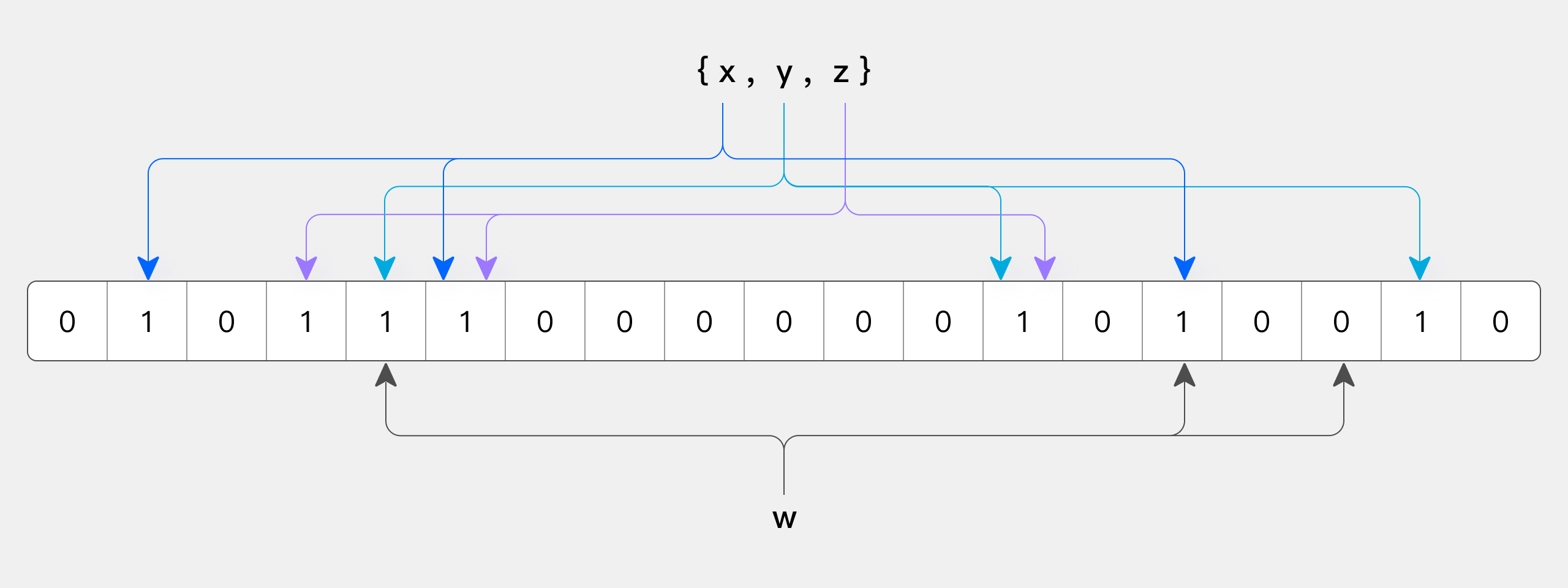

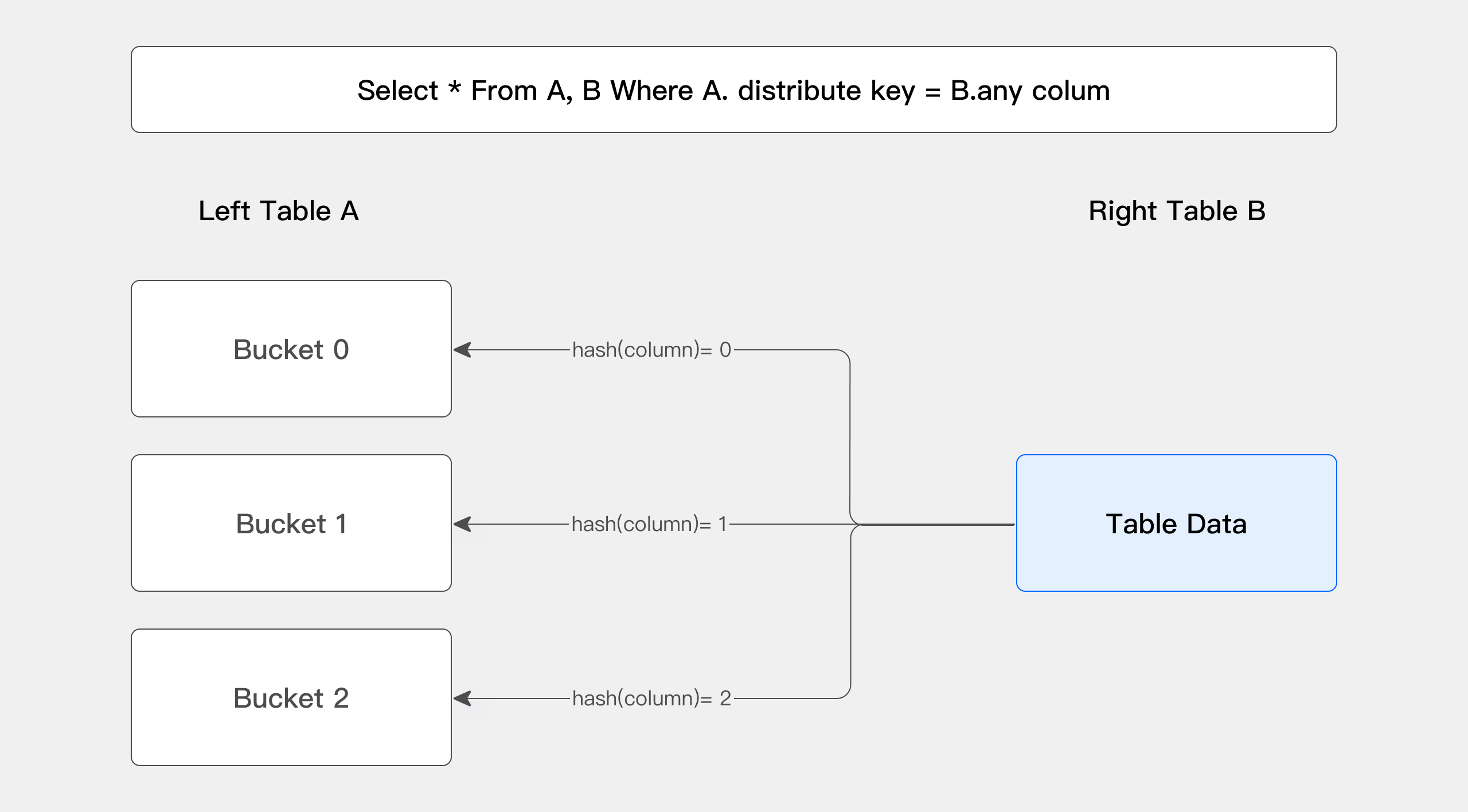

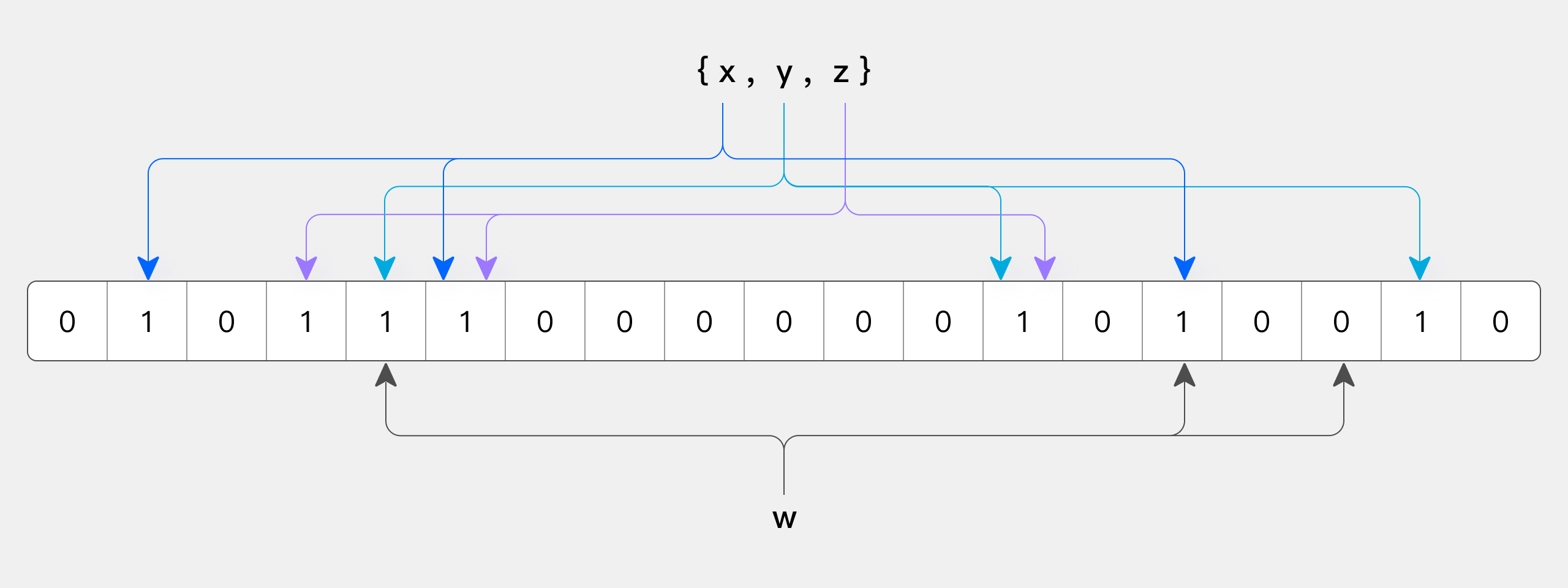

-

+

The picture above shows how the Bucket Shuffle Join works. The SQL query is A table join B table. The equivalent expression of join hits the data distribution column of A. According to the data distribution information of table A. Bucket Shuffle Join sends the data of table B to the corresponding data storage and calculation node of table A. The cost of Bucket Shuffle Join is as follows:

-* network cost: ``` B < min(3B, A + B) ```

+* network cost: ``` B < min(3B, A + B) ```

-* memory cost: ``` B <= min(3B, B) ```

+* memory cost: ``` B <= min(3B, B) ```

Therefore, compared with Broadcast Join and Shuffle Join, Bucket shuffle join has obvious performance advantages. It reduces the time-consuming of data transmission between nodes and the memory cost of join. Compared with Doris's original join method, it has the following advantages

@@ -91,7 +91,7 @@

| | equal join conjunct: `test`.`k1` = `baseall`.`k1`

```

-The join type indicates that the join method to be used is:`BUCKET_SHUFFLE`。

+The join type indicates that the join method to be used is:`BUCKET_SHUFFLE`。

## Planning rules of Bucket Shuffle Join

diff --git a/docs/query/join-optimization/doris-join-optimization.md b/docs/query/join-optimization/doris-join-optimization.md

index 2b7d461..12e8def 100644

--- a/docs/query/join-optimization/doris-join-optimization.md

+++ b/docs/query/join-optimization/doris-join-optimization.md

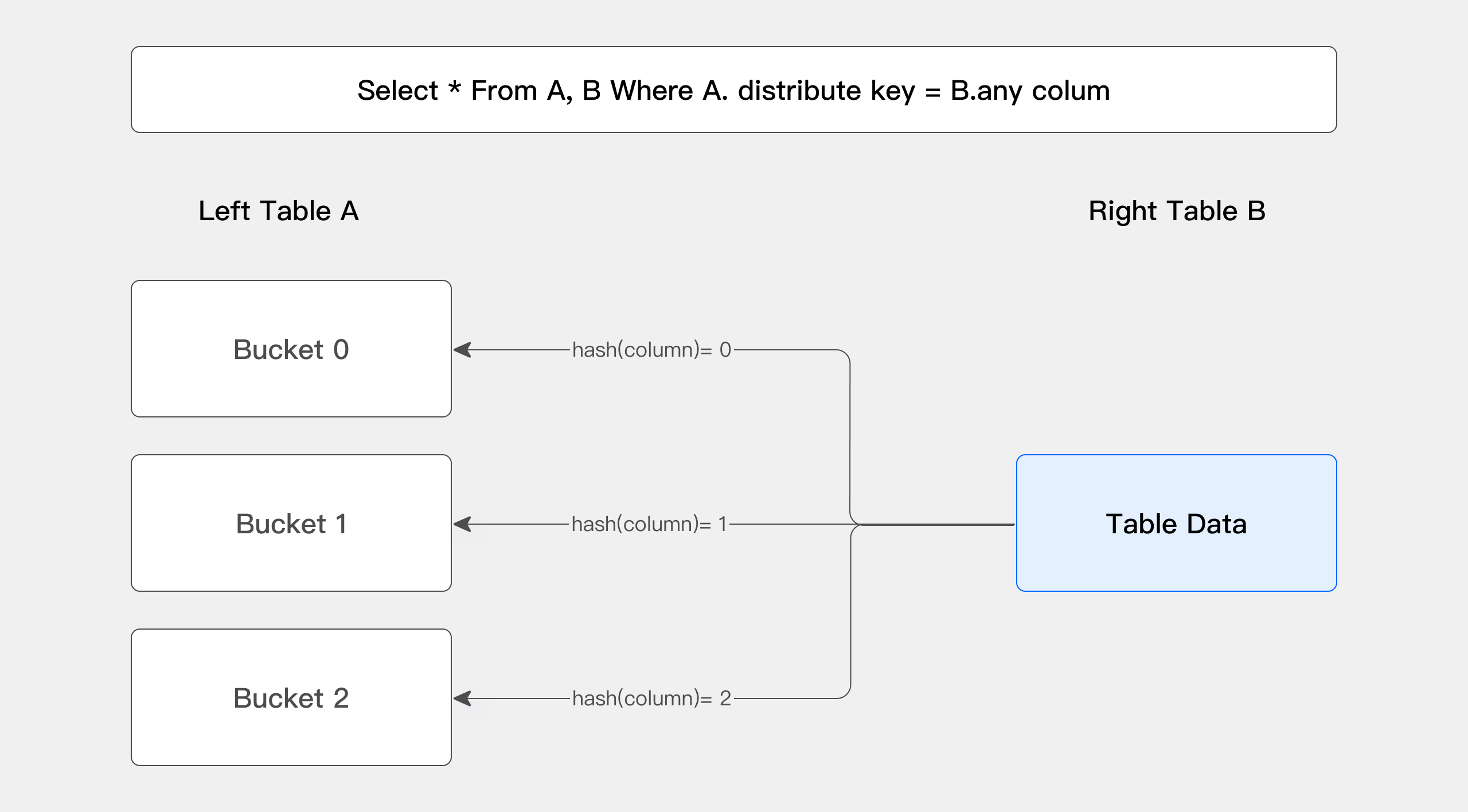

@@ -32,15 +32,15 @@

## Doris Shuffle way

-1. Doris supports 4 Shuffle methods

+Doris supports 4 Shuffle methods

- 1. BroadCast Join

+1. BroadCast Join

- It requires the full data of the right table to be sent to the left table, that is, each node participating in Join has the full data of the right table, that is, T(R).

+ It requires the full data of the right table to be sent to the left table, that is, each node participating in Join has the full data of the right table, that is, T(R).

- Its applicable scenarios are more general, and it can support Hash Join and Nest loop Join at the same time, and its network overhead is N \* T(R).

+ Its applicable scenarios are more general, and it can support Hash Join and Nest loop Join at the same time, and its network overhead is N \* T(R).

-

+

The data in the left table is not moved, and the data in the right table is sent to the scanning node of the data in the left table.

@@ -50,7 +50,7 @@

Its network overhead is: T(S) + T(R), but it can only support Hash Join, because it also calculates buckets according to the conditions of Join.

-

+

The left and right table data are sent to different partition nodes according to the partition, and the calculated demerits are sent.

@@ -60,7 +60,7 @@

Its network overhead is: T(R) is equivalent to only Shuffle the data in the right table.

-

+

The data in the left table does not move, and the data in the right table is sent to the node that scans the table in the left table according to the result of the partition calculation.

@@ -68,7 +68,7 @@

It is similar to Bucket Shuffle Join, which means that the data has been shuffled according to the preset Join column scenario when data is imported. Then the join calculation can be performed directly without considering the Shuffle problem of the data during the actual query.

-

+

The data has been pre-partitioned, and the Join calculation is performed directly locally

@@ -96,12 +96,15 @@

Currently Doris supports three types of RuntimeFilter

- One is IN-IN, which is well understood, and pushes a hashset down to the data scanning node.

+

- The second is BloomFilter, which uses the data of the hash table to construct a BloomFilter, and then pushes the BloomFilter down to the scanning node that queries the data. .

+

- The last one is MinMax, which is a Range range. After the Range range is determined by the data in the right table, it is pushed down to the data scanning node.

There are two requirements for the applicable scenarios of Runtime Filter:

- The first requirement is that the right table is large and the left table is small, because building a Runtime Filter needs to bear the computational cost, including some memory overhead.

+

- The second requirement is that there are few results from the join of the left and right tables, indicating that this join can filter out most of the data in the left table.

When the above two conditions are met, turning on the Runtime Filter can achieve better results

@@ -112,31 +115,39 @@

### Runtime Filter Type

-- Doris provides three different Runtime Filter types:

- - The advantage of **IN** is that the effect filtering effect is obvious and fast. Its shortcomings are: First, it only applies to BroadCast. Second, when the right table exceeds a certain amount of data, it will fail. The current Doris configuration is 1024, that is, if the right table is larger than 1024, the Runtime Filter of IN will directly failed.

- - The advantage of **MinMax** is that the overhead is relatively small. Its disadvantage is that it has a relatively good effect on numeric columns, but basically no effect on non-numeric columns.

- - The feature of **Bloom Filter** is that it is universal, suitable for various types, and the effect is better. The disadvantage is that its configuration is more complicated and the calculation is high.

+Doris provides three different Runtime Filter types:

+- The advantage of **IN** is that the effect filtering effect is obvious and fast. Its shortcomings are: First, it only applies to BroadCast. Second, when the right table exceeds a certain amount of data, it will fail. The current Doris configuration is 1024, that is, if the right table is larger than 1024, the Runtime Filter of IN will directly failed.

-## Join Reader

+- The advantage of **MinMax** is that the overhead is relatively small. Its disadvantage is that it has a relatively good effect on numeric columns, but basically no effect on non-numeric columns.

+

+- The feature of **Bloom Filter** is that it is universal, suitable for various types, and the effect is better. The disadvantage is that its configuration is more complicated and the calculation is high.

+

+## Join Reorder

Once the database involves multi-table Join, the order of Join has a great impact on the performance of the entire Join query. Assuming that there are three tables to join, refer to the following picture, the left is the a table and the b table to do the join first, the intermediate result has 2000 rows, and then the c table is joined.

Next, look at the picture on the right and adjust the order of Join. Join the a table with the c table first, the intermediate result generated is only 100, and then finally join with the b table for calculation. The final join result is the same, but the intermediate result it generates has a 20x difference, which results in a big performance diff.

-

+

-- Doris currently supports the rule-based Join Reorder algorithm. Its logic is:

- - Make joins with large tables and small tables as much as possible, and the intermediate results it generates are as small as possible.

- - Put the conditional join table forward, that is to say, try to filter the conditional join table

- - Hash Join has higher priority than Nest Loop Join, because Hash Join itself is much faster than Nest Loop Join.

+Doris currently supports the rule-based Join Reorder algorithm. Its logic is:

+

+- Make joins with large tables and small tables as much as possible, and the intermediate results it generates are as small as possible.

+

+- Put the conditional join table forward, that is to say, try to filter the conditional join table

+

+- Hash Join has higher priority than Nest Loop Join, because Hash Join itself is much faster than Nest Loop Join.

## Doris Join optimization method

Doris Join tuning method:

- Use the Profile provided by Doris itself to locate the bottleneck of the query. Profile records various information in Doris' entire query, which is first-hand information for performance tuning. .

+

- Understand the Join mechanism of Doris, which is also the content shared with you in the second part. Only by knowing why and understanding its mechanism can we analyze why it is slow.

+

- Use Session variables to change some behaviors of Join, so as to realize the tuning of Join.

+

- Check the Query Plan to analyze whether this tuning is effective.

The above 4 steps basically complete a standard Join tuning process, and then it is to actually query and verify it to see what the effect is.

@@ -209,7 +220,11 @@

Finally, we summarize four suggestions for optimization and tuning of Doris Join:

- The first point: When doing Join, try to select columns of the same type or simple type. If the same type is used, reduce its data cast, and the simple type itself joins the calculation quickly.

+

- The second point: try to choose the Key column for Join. The reason is also introduced in the Runtime Filter. The Key column can play a better effect on delayed materialization.

+

- The third point: Join between large tables, try to make it Co-location, because the network overhead between large tables is very large, if you need to do Shuffle, the cost is very high.

+

- Fourth point: Use Runtime Filter reasonably, which is very effective in scenarios with high join filtering rate. But it is not a panacea, but has certain side effects, so it needs to be switched according to the granularity of specific SQL.

+

- Finally: When it comes to multi-table Join, it is necessary to judge the rationality of Join. Try to ensure that the left table is a large table and the right table is a small table, and then Hash Join will be better than Nest Loop Join. If necessary, you can use SQL Rewrite to adjust the order of Join using Hint.

diff --git a/docs/query/nereids/nereids.md b/docs/query/nereids/nereids.md

index 2e09e30..9895f1a 100644

--- a/docs/query/nereids/nereids.md

+++ b/docs/query/nereids/nereids.md

@@ -24,9 +24,7 @@

under the License.

-->

-# Nereids-the Brand New Planner

-<version since="dev"></version>

## R&D background

@@ -42,13 +40,13 @@

TPC-H SF100 query speed comparison. The environment is 3BE, the new optimizer uses the original SQL, and the statistical information is collected before executing the SQL. Old optimizers use hand-tuned SQL. It can be seen that the new optimizer does not need to manually optimize the query, and the overall query time is similar to that of the old optimizer after manual optimization.

-

+

-### more robust

+### More robust

All optimization rules of the new optimizer are completed on the logical execution plan tree. After the query syntax and semantic analysis is completed, it will be transformed into a tree structure. Compared with the internal data structure of the old optimizer, it is more reasonable and unified. Taking subquery processing as an example, the new optimizer is based on a new data structure, which avoids separate processing of subqueries by many rules in the old optimizer. In turn, the possibility of logic errors in optimization rules is reduced.

-### more flexible

+### More flexible

The architectural design of the new optimizer is more reasonable and modern. Optimization rules and processing stages can be easily extended. Can more quickly respond to user needs.

@@ -70,17 +68,24 @@

## Known issues and temporarily unsupported features

-### temporarily unsupported features

+### Temporarily unsupported features

-> If automatic fallback is enabled, it will automatically fall back to the old optimizer execution

+:::info Note

+If automatic fallback is enabled, it will automatically fall back to the old optimizer execution

+:::

-- Json、Array、Map and Struct types: The table in the query contains the above types, or the expressions in the query outputs the above types

+- Json, Array, Map and Struct types: The table in the query contains the above types, or the expressions in the query outputs the above types

+

- DML: Only support below DML statements: Insert Into Select, Update and Delete

+

- Matrialized view with predicates

+

- Function alias

+

- Java UDF and HDFS UDF

+

- High concurrent point query optimize

-### known issues

+### Known issues

- Cannot use partition cache to accelarate query

diff --git a/docs/query/udf/java-user-defined-function.md b/docs/query/udf/java-user-defined-function.md

index f1faffc..7108769 100644

--- a/docs/query/udf/java-user-defined-function.md

+++ b/docs/query/udf/java-user-defined-function.md

@@ -143,7 +143,7 @@

When writing a UDAF using Java code, there are some required functions (marked as required) and an inner class State that must be implemented. Below is a specific example to illustrate.

-#### Example 1

+**Example 1**

The following SimpleDemo will implement a simple function similar to sum, with the input parameter being INT and the output parameter being INT.

@@ -233,120 +233,121 @@

```

-#### Example 2

+**Example 2**

```java

-package org.apache.doris.udf.demo;

-

-

-import java.io.DataInputStream;

-import java.io.DataOutputStream;

-import java.math.BigDecimal;

-import java.util.Arrays;

-import java.util.logging.Logger;

-

-/*UDAF 计算中位数*/

-public class MedianUDAF {

- Logger log = Logger.getLogger("MedianUDAF");

-

- //状态存储

- public static class State {

- //返回结果的精度

- int scale = 0;

- //是否是某一个 tablet 下的某个聚合条件下的数据第一次执行 add 方法

- boolean isFirst = true;

- //数据存储

- public StringBuilder stringBuilder;

- }

-

- //状态初始化

- public State create() {

- State state = new State();

- //根据每个 tablet 下的聚合条件需要聚合的数据量大小,预先初始化,增加性能

- state.stringBuilder = new StringBuilder(1000);

- return state;

- }

-

-

- //处理执行单位处理各自 tablet 下的各自聚合条件下的每个数据

- public void add(State state, Double val, int scale) {

- try {

- if (val != null && state.isFirst) {

- state.stringBuilder.append(scale).append(",").append(val).append(",");

- state.isFirst = false;

- } else if (val != null) {

- state.stringBuilder.append(val).append(",");

- }

- } catch (Exception e) {

- //如果不能保证一定不会异常,建议每个方法都最大化捕获异常,因为目前不支持处理 java 抛出的异常

- log.info("获取数据异常:" + e.getMessage());

- }

- }

-

- //处理数据完需要输出等待聚合

- public void serialize(State state, DataOutputStream out) {

- try {

- //目前暂时只提供 DataOutputStream,如果需要序列化对象可以考虑拼接字符串,转换 json,序列化成字节数组等方式

- //如果要序列化 State 对象,可能需要自己将 State 内部类实现序列化接口

- //最终都是要通过 DataOutputStream 传输

- out.writeUTF(state.stringBuilder.toString());

- } catch (Exception e) {

- log.info("序列化异常:" + e.getMessage());

- }

- }

-

- //获取处理数据执行单位输出的数据

- public void deserialize(State state, DataInputStream in) {

- try {

- String string = in.readUTF();

- state.scale = Integer.parseInt(String.valueOf(string.charAt(0)));

- StringBuilder stringBuilder = new StringBuilder(string.substring(2));

- state.stringBuilder = stringBuilder;

- } catch (Exception e) {

- log.info("反序列化异常:" + e.getMessage());

- }

- }

-

- //聚合执行单位按照聚合条件合并某一个键下数据的处理结果 ,每个键第一次合并时,state1 参数是初始化的实例

- public void merge(State state1, State state2) {

- try {

- state1.scale = state2.scale;

- state1.stringBuilder.append(state2.stringBuilder.toString());

- } catch (Exception e) {

- log.info("合并结果异常:" + e.getMessage());

- }

- }

-

- //对每个键合并后的数据进行并输出最终结果

- public Double getValue(State state) {

- try {

- String[] strings = state.stringBuilder.toString().split(",");

- double[] doubles = new double[strings.length + 1];

- doubles = Arrays.stream(strings).mapToDouble(Double::parseDouble).toArray();

-

- Arrays.sort(doubles);

- double n = doubles.length - 1;

- double index = n * 0.5;

-

- int low = (int) Math.floor(index);

- int high = (int) Math.ceil(index);

-

- double value = low == high ? (doubles[low] + doubles[high]) * 0.5 : doubles[high];

-

- BigDecimal decimal = new BigDecimal(value);

- return decimal.setScale(state.scale, BigDecimal.ROUND_HALF_UP).doubleValue();

- } catch (Exception e) {

- log.info("计算异常:" + e.getMessage());

- }

- return 0.0;

- }

-

- //每个执行单位执行完都会执行

- public void destroy(State state) {

- }

-

+package org.apache.doris.udf.demo;

+

+import java.io.DataInputStream;

+import java.io.DataOutputStream;

+import java.math.BigDecimal;

+import java.util.Arrays;

+import java.util.logging.Logger;

+

+/* UDAF to calculate the median */

+public class MedianUDAF {

+ Logger log = Logger.getLogger("MedianUDAF");

+

+ // State storage

+ public static class State {

+ // Precision of the return result

+ int scale = 0;

+ // Whether it is the first time to execute the add method for a certain aggregation condition under a certain tablet

+ boolean isFirst = true;

+ // Data storage

+ public StringBuilder stringBuilder;

+ }

+

+ // Initialize the state

+ public State create() {

+ State state = new State();

+ // Pre-initialize based on the amount of data that needs to be aggregated under each aggregation condition of each tablet to increase performance

+ state.stringBuilder = new StringBuilder(1000);

+ return state;

+ }

+

+ // Process each data under respective aggregation conditions for each tablet

+ public void add(State state, Double val, int scale) {

+ try {

+ if (val != null && state.isFirst) {

+ state.stringBuilder.append(scale).append(",").append(val).append(",");

+ state.isFirst = false;

+ } else if (val != null) {

+ state.stringBuilder.append(val).append(",");

+ }

+ } catch (Exception e) {

+ // If it cannot be guaranteed that there will be no exceptions, it is recommended to maximize exception capture in each method, as handling of exceptions thrown by Java is currently not supported

+ log.info("Data acquisition exception: " + e.getMessage());

+ }

+ }

+

+ // Data needs to be output for aggregation after processing

+ public void serialize(State state, DataOutputStream out) {

+ try {

+ // Currently, only DataOutputStream is provided. If serialization of objects is required, methods such as concatenating strings, converting to JSON, or serializing into byte arrays can be considered

+ // If the State object needs to be serialized, it may be necessary to implement a serialization interface for the State inner class

+ // Ultimately, everything needs to be transmitted via DataOutputStream

+ out.writeUTF(state.stringBuilder.toString());

+ } catch (Exception e) {

+ log.info("Serialization exception: " + e.getMessage());

+ }

+ }

+

+ // Obtain the output data from the data processing execution unit

+ public void deserialize(State state, DataInputStream in) {

+ try {

+ String string = in.readUTF();

+ state.scale = Integer.parseInt(String.valueOf(string.charAt(0)));

+ StringBuilder stringBuilder = new StringBuilder(string.substring(2));

+ state.stringBuilder = stringBuilder;

+ } catch (Exception e) {

+ log.info("Deserialization exception: " + e.getMessage());

+ }

+ }

+

+ // The aggregation execution unit merges the processing results of data under certain aggregation conditions for a given key. The state1 parameter is the initialized instance during the first merge of each key

+ public void merge(State state1, State state2) {

+ try {

+ state1.scale = state2.scale;

+ state1.stringBuilder.append(state2.stringBuilder.toString());

+ } catch (Exception e) {

+ log.info("Merge result exception: " + e.getMessage());

+ }

+ }

+

+ // Output the final result after merging the data for each key

+ public Double getValue(State state) {

+ try {

+ String[] strings = state.stringBuilder.toString().split(",");

+ double[] doubles = new double[strings.length];

+ for (int i = 0; i < strings.length - 1; i++) {

+ doubles[i] = Double.parseDouble(strings[i + 1]);

+ }

+

+ Arrays.sort(doubles);

+ double n = doubles.length;

+ if (n == 0) {

+ return 0.0;

+ }

+ double index = (n - 1) / 2.0;

+

+ int low = (int) Math.floor(index);

+ int high = (int) Math.ceil(index);

+

+ double value = low == high ? (doubles[low] + doubles[high]) / 2 : doubles[high];

+

+ BigDecimal decimal = new BigDecimal(value);

+ return decimal.setScale(state.scale, BigDecimal.ROUND_HALF_UP).doubleValue();

+ } catch (Exception e) {

+ log.info("Calculation exception: " + e.getMessage());

+ }

+ return 0.0;

+ }

+

+ // Executed after each execution unit completes

+ public void destroy(State state) {

+ }

}

-

```

### UDTF

diff --git a/docs/table-design/data-partition.md b/docs/table-design/data-partition.md

index 901b2da..5805199 100644

--- a/docs/table-design/data-partition.md

+++ b/docs/table-design/data-partition.md

@@ -24,44 +24,51 @@

under the License.

-->

-# Data Partition

+This document mainly introduces table creation and data partitioning in Doris, as well as potential problems and solutions encountered during table creation operations.

-This topic is about table creation and data partitioning in Doris, including the common problems in table creation and their solutions.

+## Basic concepts

-## Basic Concepts

-

-In Doris, data is logically described in the form of table.

+In Doris, data is logically described in the form of tables.

### Row & Column

-A table contains rows and columns.

+A table consists of rows and columns:

-Row refers to a row of user data. Column is used to describe different fields in a row of data.

+- Row: Represents a single line of user data;

-Columns can be divided into two categories: Key and Value. From a business perspective, Key and Value correspond to dimension columns and metric columns, respectively. The key column of Doris is the column specified in the table creation statement. The column after the keyword 'unique key' or 'aggregate key' or 'duplicate key' in the table creation statement is the key column, and the rest except the key column is the value column. In the Aggregate Model, rows with the same values in Key columns will be aggregated into one row. The way how Value columns are aggregated is specified by the user when the table is built. For more information about the Aggregate Model, please see the [Data Model](../table-design/data-model/overview.md).

+- Column: Used to describe different fields in a row of data;

-### Tablet & Partition

+- Columns can be divided into two types: Key and Value. From a business perspective, Key and Value can correspond to dimension columns and metric columns, respectively. The key columns in Doris are those specified in the table creation statement, which are the columns following the keywords `unique key`, `aggregate key`, or `duplicate key`. The remaining columns are value columns. From the perspective of the aggregation model, rows with the same Key columns will be aggregated into a single row. The aggregation method for value columns is specified by the user during table creation. For more information on aggregation models, refer to the Doris [Data Model](../table-design/data-model/overview).

-In the Doris storage engine, user data are horizontally divided into data tablets (also known as data buckets). Each tablet contains several rows of data. The data between the individual tablets do not intersect and is physically stored independently.

+### Partition & Tablet

-Tablets are logically attributed to different Partitions. One Tablet belongs to only one Partition, and one Partition contains several Tablets. Since the tablets are physically stored independently, the partitions can be seen as physically independent, too. Tablet is the smallest physical storage unit for data operations such as movement and replication.

+Doris supports two levels of data partitioning. The first level is Partitioning, which supports Range and List partition. The second level is Bucket (also known as Tablet), which supports Hash and Random . If no partitioning is established during table creation, Doris generates a default partition that is transparent to the user. When using the default partition, only Bucket is supported.

-A Table is formed of multiple Partitions. Partition can be thought of as the smallest logical unit of management. Data import and deletion can be performed on only one Partition.

+In the Doris storage engine, data is horizontally partitioned into several tablets. Each tablet contains several rows of data. There is no overlap between the data in different tablets, and they are stored physically independently.

-## Data Partitioning

+Multiple tablets logically belong to different partitions. A single tablet belongs to only one partition, while a partition contains several tablets. Because tablets are stored physically independently, partitions can also be considered physically independent. The tablet is the smallest physical storage unit for operations such as data movement and replication.

-The following illustrates on data partitioning in Doris using the example of a CREATE TABLE operation.

+Several partitions compose a table. The partition can be considered the smallest logical management unit.

-CREATE TABLE in Doris is a synchronous command. It returns results after the SQL execution is completed. Successful returns indicate successful table creation. For more information on the syntax, please refer to [CREATE TABLE](../sql-manual/sql-statements/Data-Definition-Statements/Create/CREATE-TABLE.md), or input the `HELP CREATE TABLE;` command.

+Benefits of Two-Level data partitioning:

-This section introduces how to create tables in Doris.

+- For dimensions with time or similar ordered values, such dimension columns can be used as partitioning columns. The partition granularity can be evaluated based on import frequency and partition data volume.

+

+- Historical data deletion requirements: If there is a need to delete historical data (such as retaining only the data for the most recent several days), composite partition can be used to achieve this goal by deleting historical partitions. Alternatively, DELETE statements can be sent within specified partitions to delete data.

+

+- Solving data skew issues: Each partition can specify the number of buckets independently. For example, when partitioning by day and there are significant differences in data volume between days, the number of buckets for each partition can be specified to reasonably distribute data across different partitions. It is recommended to choose a column with high distinctiveness as the bucketing column.

+

+### Example of creating a table

+

+CREATE TABLE in Doris is a synchronous command. It returns results after the SQL execution is completed. Successful returns indicate successful table creation. For more information, please refer to [CREATE TABLE](../sql-manual/sql-statements/Data-Definition-Statements/Create/CREATE-TABLE), or input the `HELP CREATE TABLE;` command.

+

+This section introduces how to create tables in Doris by range partiton and hash buckets.

```sql

-- Range Partition

-

-CREATE TABLE IF NOT EXISTS example_db.example_range_tbl

+CREATE TABLE IF NOT EXISTS example_range_tbl

(

- `user_id` LARGEINT NOT NULL COMMENT "User ID",

+ `user_id` LARGEINT NOT NULL COMMENT "User ID",

`date` DATE NOT NULL COMMENT "Date when the data are imported",

`timestamp` DATETIME NOT NULL COMMENT "Timestamp when the data are imported",

`city` VARCHAR(20) COMMENT "User location city",

@@ -70,436 +77,992 @@

`last_visit_date` DATETIME REPLACE DEFAULT "1970-01-01 00:00:00" COMMENT "User last visit time",

`cost` BIGINT SUM DEFAULT "0" COMMENT "Total user consumption",

`max_dwell_time` INT MAX DEFAULT "0" COMMENT "Maximum user dwell time",

- `min_dwell_time` INT MIN DEFAULT "99999" COMMENT "Minimum user dwell time"

+ `min_dwell_time` INT MIN DEFAULT "99999" COMMENT "Minimum user dwell time"

)

-ENGINE=olap

+ENGINE=OLAP

AGGREGATE KEY(`user_id`, `date`, `timestamp`, `city`, `age`, `sex`)

PARTITION BY RANGE(`date`)

(

- PARTITION `p201701` VALUES LESS THAN ("2017-02-01"),

- PARTITION `p201702` VALUES LESS THAN ("2017-03-01"),

- PARTITION `p201703` VALUES LESS THAN ("2017-04-01"),

- PARTITION `p2018` VALUES [("2018-01-01"), ("2019-01-01"))

+ PARTITION `p201701` VALUES [("2017-01-01"), ("2017-02-01")),

+ PARTITION `p201702` VALUES [("2017-02-01"), ("2017-03-01")),

+ PARTITION `p201703` VALUES [("2017-03-01"), ("2017-04-01"))

)

DISTRIBUTED BY HASH(`user_id`) BUCKETS 16

PROPERTIES

(

- "replication_num" = "3",

- "storage_medium" = "SSD",

- "storage_cooldown_time" = "2018-01-01 12:00:00"

+ "replication_num" = "1"

);

+```

+Here use the AGGREGATE KEY data model as an example. In the AGGREGATE KEY data model, all columns that are specified with an aggregation type (SUM, REPLACE, MAX, or MIN) are Value columns. The rest are the Key columns.

--- List Partition

+In the PROPERTIES at the end of the CREATE TABLE statement, you can find detailed information about the relevant parameters that can be set in PROPERTIES by referring to the documentation on [CREATE TABLE](../sql-manual/sql-statements/Data-Definition-Statements/Create/CREATE-TABLE).

-CREATE TABLE IF NOT EXISTS example_db.example_list_tbl

+The default type of ENGINE is OLAP. In Doris, only this OLAP ENGINE type is responsible for data management and storage by Doris itself. Other ENGINE types, such as mysql, broker, es, etc., are essentially just mappings to tables in other external databases or systems, allowing Doris to read this data. However, Doris itself does not create, manage, or store any tables or data for non-OLAP ENGINE types.

+

+`IF NOT EXISTS` indicates that if the table has not been created before, it will be created. Note that this only checks if the table name exists and does not check if the schema of the new table is the same as the schema of an existing table. Therefore, if there is a table with the same name but a different schema, this command will also return successfully, but it does not mean that a new table and a new schema have been created.

+

+### View partition

+

+You can use the `show create table` command to view the partition information of a table.

+

+```sql

+> show create table example_range_tbl

++-------------------+---------------------------------------------------------------------------------------------------------+

+| Table | Create Table |

++-------------------+---------------------------------------------------------------------------------------------------------+

+| example_range_tbl | CREATE TABLE `example_range_tbl` ( |

+| | `user_id` largeint(40) NOT NULL COMMENT 'User ID', |

+| | `date` date NOT NULL COMMENT 'Date when the data are imported', |

+| | `timestamp` datetime NOT NULL COMMENT 'Timestamp when the data are imported', |

+| | `city` varchar(20) NULL COMMENT 'User location city', |

+| | `age` smallint(6) NULL COMMENT 'User age', |

+| | `sex` tinyint(4) NULL COMMENT 'User gender', |

+| | `last_visit_date` datetime REPLACE NULL DEFAULT "1970-01-01 00:00:00" COMMENT 'User last visit time', |

+| | `cost` bigint(20) SUM NULL DEFAULT "0" COMMENT 'Total user consumption', |

+| | `max_dwell_time` int(11) MAX NULL DEFAULT "0" COMMENT 'Maximum user dwell time', |

+| | `min_dwell_time` int(11) MIN NULL DEFAULT "99999" COMMENT 'Minimum user dwell time' |

+| | ) ENGINE=OLAP |

+| | AGGREGATE KEY(`user_id`, `date`, `timestamp`, `city`, `age`, `sex`) |

+| | COMMENT 'OLAP' |

+| | PARTITION BY RANGE(`date`) |

+| | (PARTITION p201701 VALUES [('0000-01-01'), ('2017-02-01')), |

+| | PARTITION p201702 VALUES [('2017-02-01'), ('2017-03-01')), |

+| | PARTITION p201703 VALUES [('2017-03-01'), ('2017-04-01'))) |

+| | DISTRIBUTED BY HASH(`user_id`) BUCKETS 16 |

+| | PROPERTIES ( |

+| | "replication_allocation" = "tag.location.default: 1", |

+| | "is_being_synced" = "false", |

+| | "storage_format" = "V2", |

+| | "light_schema_change" = "true", |

+| | "disable_auto_compaction" = "false", |

+| | "enable_single_replica_compaction" = "false" |

+| | ); |

++-------------------+---------------------------------------------------------------------------------------------------------+

+```

+

+You can use `show partitions from your_table` command to view the partition information of a table.

+

+```

+> show partitions from example_range_tbl

++-------------+---------------+----------------+---------------------+--------+--------------+--------------------------------------------------------------------------------+-----------------+---------+----------------+---------------

++---------------------+---------------------+--------------------------+----------+------------+-------------------------+-----------+

+| PartitionId | PartitionName | VisibleVersion | VisibleVersionTime | State | PartitionKey | Range | DistributionKey | Buckets | ReplicationNum | StorageMedium

+| CooldownTime | RemoteStoragePolicy | LastConsistencyCheckTime | DataSize | IsInMemory | ReplicaAllocation | IsMutable |

++-------------+---------------+----------------+---------------------+--------+--------------+--------------------------------------------------------------------------------+-----------------+---------+----------------+---------------

++---------------------+---------------------+--------------------------+----------+------------+-------------------------+-----------+

+| 28731 | p201701 | 1 | 2024-01-25 10:50:51 | NORMAL | date | [types: [DATEV2]; keys: [0000-01-01]; ..types: [DATEV2]; keys: [2017-02-01]; ) | user_id | 16 | 1 | HDD

+| 9999-12-31 23:59:59 | | | 0.000 | false | tag.location.default: 1 | true |

+| 28732 | p201702 | 1 | 2024-01-25 10:50:51 | NORMAL | date | [types: [DATEV2]; keys: [2017-02-01]; ..types: [DATEV2]; keys: [2017-03-01]; ) | user_id | 16 | 1 | HDD

+| 9999-12-31 23:59:59 | | | 0.000 | false | tag.location.default: 1 | true |