| commit | d371d4b19d4047bd1a0f4d8d3a4999812770a4e2 | [log] [tgz] |

|---|---|---|

| author | Zack Chase Lipton <zachary.chase@gmail.com> | Wed Apr 12 17:22:43 2017 -0700 |

| committer | Eric Junyuan Xie <piiswrong@users.noreply.github.com> | Wed Apr 12 17:22:43 2017 -0700 |

| tree | ca06976ee9c84179201cca665951c475faa81d5e | |

| parent | c1641cd46317e9dc5f8c5fd96db9d405cad4a6f9 [diff] |

New docs (#5803) * python -> Python (fixed capitalization typo) * flavours -> flavors for internal consistency with American spellings. Changed 'good flabors' to specify imperative and symbolic programming, better not to tease the reader * Improved writing of 'History' section * Improved writing of 'History' section * fixed symbol.md doc which previously used deprecated / incorrect mxnet.sym.var instead of the real/working mxnet.sym.Variable * fixed typos and readability in parts of the README.md * removed awkward phrasing about 'flavours' * typo cleanup * Update NEWS.md Fixed typos * adding eval function for combining with , makes for quicker introspection in interactive interpreters * fixed docstrig * fixed docstring, cleaned up whitespace * clean up * Changed code to work with **kwargs, doesn't require dictionary explicitly. This also removes need for clumsy code to handle the case where there are no arguments * fixed too-long lines in the docstring * Update symbol.py * heavily revised docs in symbol.py * lightly revised content in ndarray.py * Update symbol.py * heavier pass for language on ndarray.py * fixed docstring line lengths per lint * fixed string continuation error * Improved docs for (alphabetically) model.py through visualization.py * fixing line-too-long pylint bugs * fixes pylint line length error * improved documentation further by making cosmetic edits to all other files in the Python API * edited module .py documents, also modified all .py docs to comply with numpy doc standard and to use double backticks around True, False, and None values * adding a few files that escaped last commit * Update name.py fixed speciry -> specify * changing binded to bound everywhere, warning for using old attribute name * debugging indentation error in base_module and Non-ASCII character in rtc.py * fixed pylint line-too-long errors * fixing docstring line in bucketing module * fixing docstring line in bucketing module * changed 'bound' back to 'binded' in code so that doc changes could be merged in without breaking code * Rewrote these pages to not contain an overwhelming amount of mostly rendundant, confusing information. Also eliminated a couple redundant tutorials * removed a nonsense line from how-tos

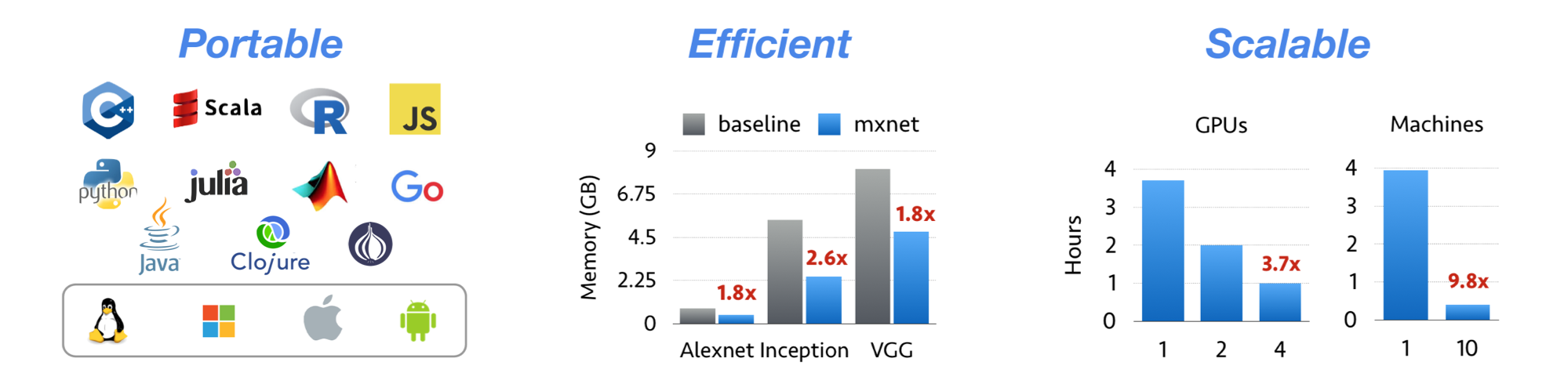

MXNet is a deep learning framework designed for both efficiency and flexibility. It allows you to mix symbolic and imperative programming to maximize efficiency and productivity. At its core, MXNet contains a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient. MXNet is portable and lightweight, scaling effectively to multiple GPUs and multiple machines.

MXNet is also more than a deep learning project. It is also a collection of blue prints and guidelines for building deep learning systems, and interesting insights of DL systems for hackers.

© Contributors, 2015-2017. Licensed under an Apache-2.0 license.

Tianqi Chen, Mu Li, Yutian Li, Min Lin, Naiyan Wang, Minjie Wang, Tianjun Xiao, Bing Xu, Chiyuan Zhang, and Zheng Zhang. MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. In Neural Information Processing Systems, Workshop on Machine Learning Systems, 2015

MXNet emerged from a collaboration by the authors of cxxnet, minerva, and purine2. The project reflects what we have learned from the past projects. MXNet combines aspects of each of these projects to achieve flexibility, speed, and memory efficiency.