| commit | d6aa6758a23e6cde483828f084436e663d94ba7c | [log] [tgz] |

|---|---|---|

| author | starocean999 <40539150+starocean999@users.noreply.github.com> | Thu Aug 03 22:34:59 2023 +0800 |

| committer | GitHub <noreply@github.com> | Thu Aug 03 22:34:59 2023 +0800 |

| tree | cdc3f8f831e9ac80478dceba3c4cf94f33e36836 | |

| parent | 4010d68f273c845e4cb51285a1b673ef85d4d44b [diff] |

[fix](nereids) pick several pr for bug fix (#22575)

pick several pr from master for bugfix:

#22034

#22168

#22197

#21469

#21727

#22150

#22254

* [refactor](Nereids) push down all non-slot order key in sort and prune them upper sort (#22034)

According the implementation in execution engine, all order keys

in SortNode will be output. We must normalize LogicalSort follow

by it.

We push down all non-slot order key in sort to materialize them

behind sort. So, all order key will be slot and do not need do

projection by SortNode itself.

This will simplify translation of SortNode by avoid to generate

resolvedTupleExprs and sortTupleDesc.

* [fix](Nereids) translate partition topn order key on wrong tuple (#22168)

partition key should on child tuple, sort key should on partition top's tuple

* [fix](Nereids) translate failed when enable topn two phase opt (#22197)

1. should not add rowid slot to reslovedTupleExprs

2. should set notMaterialize to sort's tuple when do two phase opt

* [opt](nereids) update CTEConsumer's stats when CTEProducer's stats updated (#21469)

* [refactor](Nereids) refactor cte analyze, rewrite and reuse code (#21727)

REFACTOR:

1. Generate CTEAnchor, CTEProducer, CTEConsumer when analyze.

For example, statement `WITH cte1 AS (SELECT * FROM t) SELECT * FROM cte1`.

Before this PR, we got analyzed plan like this:

```

logicalCTE(LogicalSubQueryAlias(cte1))

+-- logicalProject()

+-- logicalCteConsumer()

```

we only have LogicalCteConsumer on the plan, but not LogicalCteProducer.

This is not a valid plan, and should not as the final result of analyze.

After this PR, we got analyzed plan like this:

```

logicalCteAnchor()

|-- logicalCteProducer()

+-- logicalProject()

+-- logicalCteConsumer()

```

This is a valid plan with LogicalCteProducer and LogicalCteConsumer

2. Replace re-analyze unbound plan with deepCopy plan when do CTEInline

Because we generate LogicalCteAnchor and LogicalCteProducer when analyze.

So, we could not do re-analyze to gnerate CTE inline plan anymore.

The another reason is, we reuse relation id between unbound and bound relation.

So, if we do re-analyze on unresloved CTE plan, we will get two relation

with same RelationId. This is wrong, because we use RelationId to distinguish

two different relations.

This PR implement two helper class to deep copy a new plan from CTEProducer.

`LogicalPlanDeepCopier` and `ExpressionDeepCopier`

3. New rewrite framework to ensure do CTEInline in right way.

Before this PR, we do CTEInline before apply any rewrite rule.

But sometimes, some CteConsumer could be eliminated after rewrite.

After this PR, we do CTEInline after the plans relaying on CTEProducer have

been rewritten. So we could do CTEInline if some the count of CTEConsumer

decrease under the threshold of CTEInline.

4. add relation id to all relation plan node

5. let all relation generated from table implement trait CatalogRelation

6. reuse relation id between unbound relation and relation after bind

ENHANCEMENT:

1. Pull up CTEAnchor before RBO to avoid break other rules' pattern

Before this PR, we will generate CTEAnchor and LogicalCTE in the middle of plan.

So all rules should process LogicalCTEAnchor, otherwise will generate unexpected plan.

For example, push down filter and push down project should add pattern like:

```

logicalProject(logicalCTE)

...

logicalFilter(logicalCteAnchor)

...

```

project and filter must be push through these virtual plan node to ensure all project

and filter could be merged togather and get right order of them. for Example:

```

logicalProject

+-- logicalFilter

+-- logicalCteAnchor

+-- logicalProject

+-- logicalFilter

+-- logicalOlapScan

```

upper plan will lead to translation error. because we could not do twice filter and

project on bottom logicalOlapScan.

BUGFIX:

1. Recursive analyze LogicalCTE to avoid bind outer relation on inner CTE

For example

```sql

SELECT * FROM (WITH cte1 AS (SELECT * FROM t1) SELECT * FROM cte1)v1, cte1 v2;

```

Before this PR, we will use nested cte name to bind outer plan.

So the outer cte1 with alias v2 will bound on the inner cte1.

After this PR, the sql will throw Table not exists exception when binding.

2. Use right way do withChildren in CTEProducer and remove projects in it

Before this PR, we add an attr named projects in CTEProducer to represent the output

of it. This is because we cannot get right output of it by call `getOutput` method on it.

The root reason of that is the wrong implementation of computeOutput of LogicalCteProducer.

This PR fix this problem and remove projects attr of CTEProducer.

3. Adjust nullable rule update CTEConsumer's output by CTEProducer's output

This PR process nullable on LogicalCteConsumer to ensure CteConsumer's output with right

nullable info, if the CteProducer's output nullable has been adjusted.

4. Bind set operation expression should not change children's output's nullable

This PR use fix a problem introduced by prvious PR #21168. The nullable info of

SetOperation's children should not changed after binding SetOperation.

* [refactor](Nereids) add sink interface and abstract class (#22150)

1. add trait Sink

2. add abstract class LogicalSink and PhysicalSink

3. replace some sink visitor by visitLogicalSink and visitPhysicalSink

* [refactor](Nereids) add result sink node (#22254)

use ResultSink as query root node to let plan of query statement

has the same pattern with insert statement

* [fix](nereids)pick several pr for bug fix

---------

Co-authored-by: morrySnow <101034200+morrySnow@users.noreply.github.com>

Co-authored-by: AKIRA <33112463+Kikyou1997@users.noreply.github.com>Apache Doris is an easy-to-use, high-performance and real-time analytical database based on MPP architecture, known for its extreme speed and ease of use. It only requires a sub-second response time to return query results under massive data and can support not only high-concurrent point query scenarios but also high-throughput complex analysis scenarios.

All this makes Apache Doris an ideal tool for scenarios including report analysis, ad-hoc query, unified data warehouse, and data lake query acceleration. On Apache Doris, users can build various applications, such as user behavior analysis, AB test platform, log retrieval analysis, user portrait analysis, and order analysis.

🎉 Version 2.0-beta version released now. The 2.0 beta version already has a better user experience in terms of functional integrity and system stability than 2.0 Alpha. We welcome all users who have requirements for the new features of the 2.0 version to deploy and upgrade. Check out the 🔗Release Notes here.

🎉 Version 1.2.5 released now! It is fully evolved release and all users are encouraged to upgrade to this release. Check out the 🔗Release Notes here.

🎉 Version 1.1.5 released now. It is a stability improvement and bugfix release based on version 1.1. Check out the 🔗Release Notes here.

👀 Have a look at the 🔗Official Website for a comprehensive list of Apache Doris's core features, blogs and user cases.

As shown in the figure below, after various data integration and processing, the data sources are usually stored in the real-time data warehouse Apache Doris and the offline data lake or data warehouse (in Apache Hive, Apache Iceberg or Apache Hudi).

Apache Doris is widely used in the following scenarios:

Reporting Analysis

Ad-Hoc Query. Analyst-oriented self-service analytics with irregular query patterns and high throughput requirements. XiaoMi has built a growth analytics platform (Growth Analytics, GA) based on Doris, using user behavior data for business growth analysis, with an average query latency of 10 seconds and a 95th percentile query latency of 30 seconds or less, and tens of thousands of SQL queries per day.

Unified Data Warehouse Construction. Apache Doris allows users to build a unified data warehouse via one single platform and save the trouble of handling complicated software stacks. Chinese hot pot chain Haidilao has built a unified data warehouse with Doris to replace its old complex architecture consisting of Apache Spark, Apache Hive, Apache Kudu, Apache HBase, and Apache Phoenix.

Data Lake Query. Apache Doris avoids data copying by federating the data in Apache Hive, Apache Iceberg, and Apache Hudi using external tables, and thus achieves outstanding query performance.

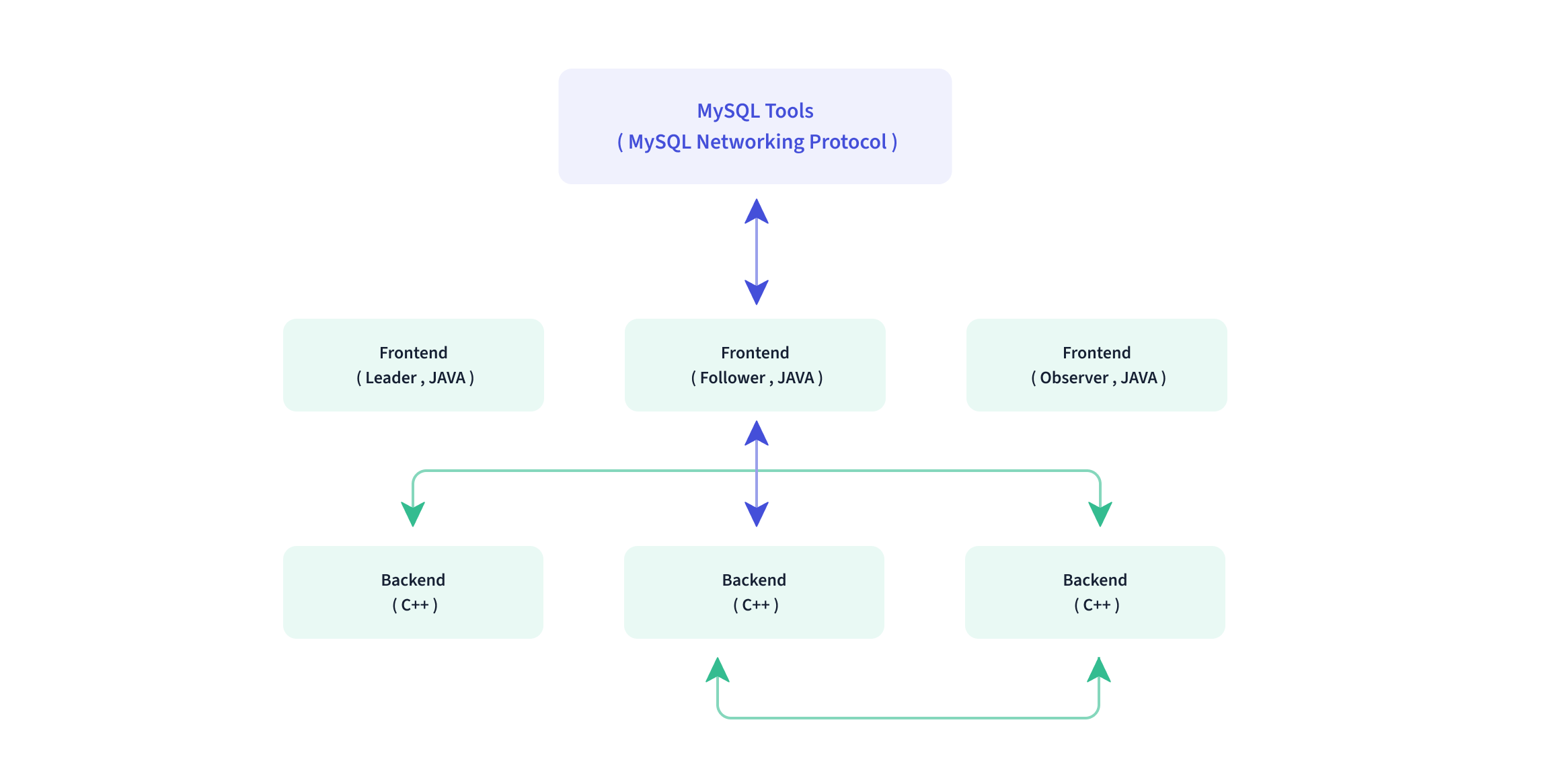

The overall architecture of Apache Doris is shown in the following figure. The Doris architecture is very simple, with only two types of processes.

Frontend (FE): user request access, query parsing and planning, metadata management, node management, etc.

Backend (BE): data storage and query plan execution

Both types of processes are horizontally scalable, and a single cluster can support up to hundreds of machines and tens of petabytes of storage capacity. And these two types of processes guarantee high availability of services and high reliability of data through consistency protocols. This highly integrated architecture design greatly reduces the operation and maintenance cost of a distributed system.

In terms of interfaces, Apache Doris adopts MySQL protocol, supports standard SQL, and is highly compatible with MySQL dialect. Users can access Doris through various client tools and it supports seamless connection with BI tools.

Doris uses a columnar storage engine, which encodes, compresses, and reads data by column. This enables a very high compression ratio and largely reduces irrelavant data scans, thus making more efficient use of IO and CPU resources. Doris supports various index structures to minimize data scans:

Doris supports a variety of storage models and has optimized them for different scenarios:

Aggregate Key Model: able to merge the value columns with the same keys and significantly improve performance

Unique Key Model: Keys are unique in this model and data with the same key will be overwritten to achieve row-level data updates.

Duplicate Key Model: This is a detailed data model capable of detailed storage of fact tables.

Doris also supports strongly consistent materialized views. Materialized views are automatically selected and updated, which greatly reduces maintenance costs for users.

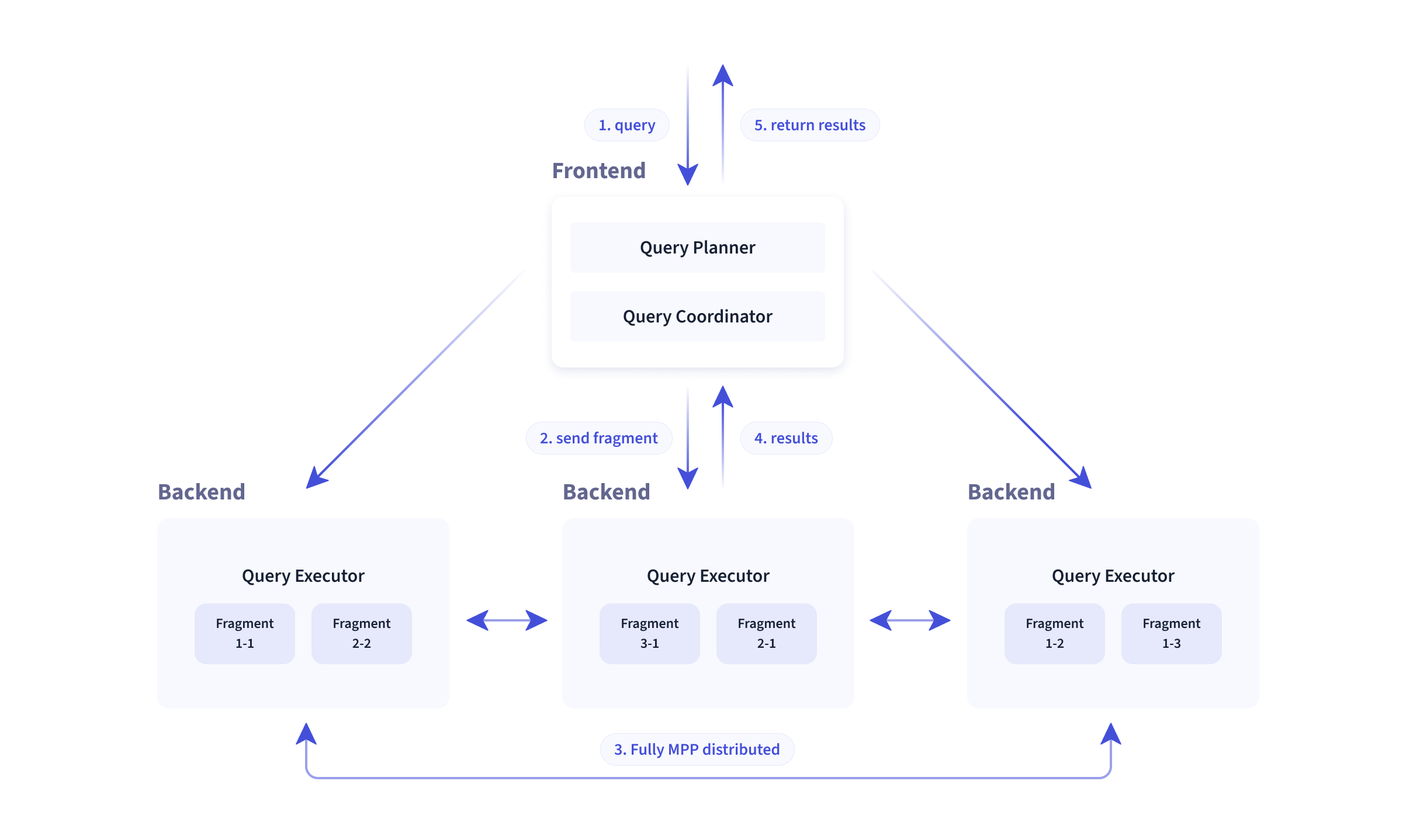

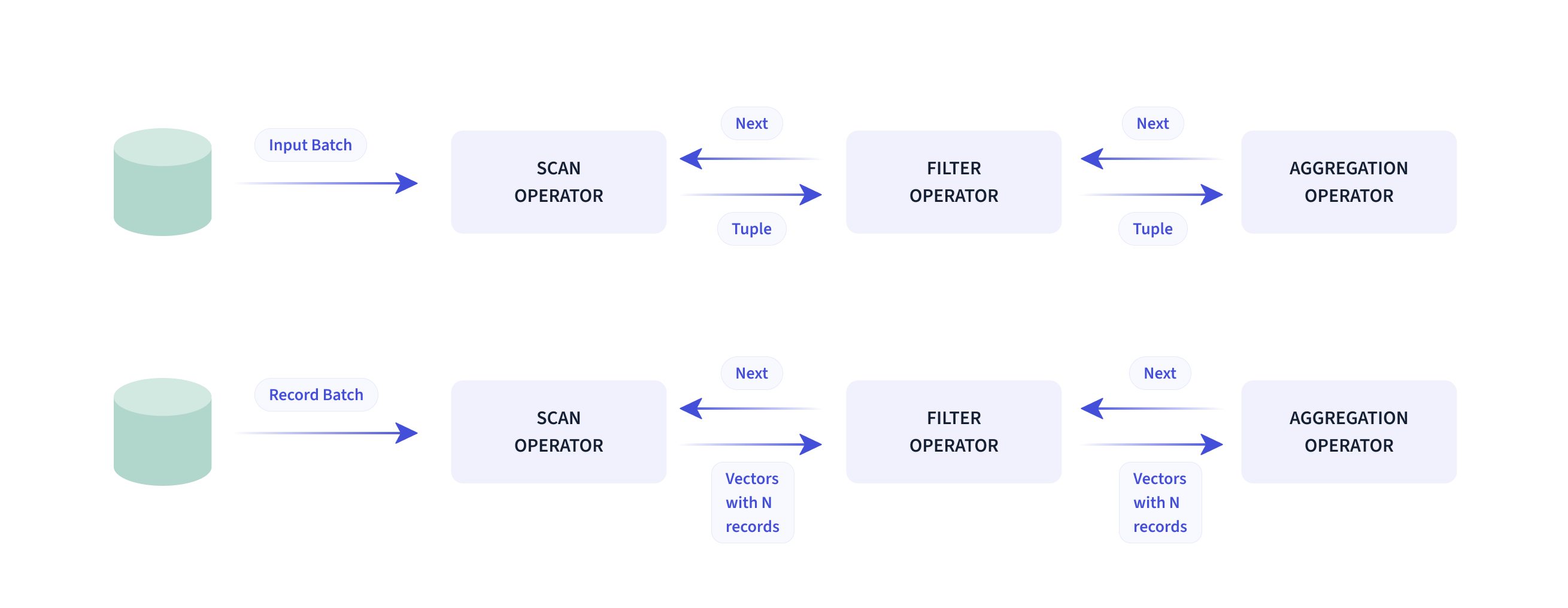

Doris adopts the MPP model in its query engine to realize parallel execution between and within nodes. It also supports distributed shuffle join for multiple large tables so as to handle complex queries.

The Doris query engine is vectorized, with all memory structures laid out in a columnar format. This can largely reduce virtual function calls, improve cache hit rates, and make efficient use of SIMD instructions. Doris delivers a 5–10 times higher performance in wide table aggregation scenarios than non-vectorized engines.

Apache Doris uses Adaptive Query Execution technology to dynamically adjust the execution plan based on runtime statistics. For example, it can generate runtime filter, push it to the probe side, and automatically penetrate it to the Scan node at the bottom, which drastically reduces the amount of data in the probe and increases join performance. The runtime filter in Doris supports In/Min/Max/Bloom filter.

In terms of optimizers, Doris uses a combination of CBO and RBO. RBO supports constant folding, subquery rewriting, predicate pushdown and CBO supports Join Reorder. The Doris CBO is under continuous optimization for more accurate statistical information collection and derivation, and more accurate cost model prediction.

Technical Overview: 🔗Introduction to Apache Doris

🎯 Easy to Use: Two processes, no other dependencies; online cluster scaling, automatic replica recovery; compatible with MySQL protocol, and using standard SQL.

🚀 High Performance: Extremely fast performance for low-latency and high-throughput queries with columnar storage engine, modern MPP architecture, vectorized query engine, pre-aggregated materialized view and data index.

🖥️ Single Unified: A single system can support real-time data serving, interactive data analysis and offline data processing scenarios.

⚛️ Federated Querying: Supports federated querying of data lakes such as Hive, Iceberg, Hudi, and databases such as MySQL and Elasticsearch.

⏩ Various Data Import Methods: Supports batch import from HDFS/S3 and stream import from MySQL Binlog/Kafka; supports micro-batch writing through HTTP interface and real-time writing using Insert in JDBC.

🚙 Rich Ecology: Spark uses Spark-Doris-Connector to read and write Doris; Flink-Doris-Connector enables Flink CDC to implement exactly-once data writing to Doris; DBT Doris Adapter is provided to transform data in Doris with DBT.

Apache Doris has graduated from Apache incubator successfully and become a Top-Level Project in June 2022.

Currently, the Apache Doris community has gathered more than 400 contributors from nearly 200 companies in different industries, and the number of active contributors is close to 100 per month.

We deeply appreciate 🔗community contributors for their contribution to Apache Doris.

Apache Doris now has a wide user base in China and around the world, and as of today, Apache Doris is used in production environments in thousands of companies worldwide. More than 80% of the top 50 Internet companies in China in terms of market capitalization or valuation have been using Apache Doris for a long time, including Baidu, Meituan, Xiaomi, Jingdong, Bytedance, Tencent, NetEase, Kwai, Sina, 360, Mihoyo, and Ke Holdings. It is also widely used in some traditional industries such as finance, energy, manufacturing, and telecommunications.

The users of Apache Doris: 🔗Users

Add your company logo at Apache Doris Website: 🔗Add Your Company

All Documentation 🔗Docs

All release and binary version 🔗Download

See how to compile 🔗Compilation

See how to install and deploy 🔗Installation and deployment

Doris provides support for Spark/Flink to read data stored in Doris through Connector, and also supports to write data to Doris through Connector.

Mail List is the most recognized form of communication in Apache community. See how to 🔗Subscribe Mailing Lists

If you meet any questions, feel free to file a 🔗GitHub Issue or post it in 🔗GitHub Discussion and fix it by submitting a 🔗Pull Request

We welcome your suggestions, comments (including criticisms), comments and contributions. See 🔗How to Contribute and 🔗Code Submission Guide

🔗Doris Improvement Proposal (DSIP) can be thought of as A Collection of Design Documents for all Major Feature Updates or Improvements.

🔗 Backend C++ Coding Specification should be strictly followed, which will help us achieve better code quality.

Contact us through the following mailing list.

| Name | Scope | |||

|---|---|---|---|---|

| dev@doris.apache.org | Development-related discussions | Subscribe | Unsubscribe | Archives |

Note Some licenses of the third-party dependencies are not compatible with Apache 2.0 License. So you need to disable some Doris features to be complied with Apache 2.0 License. For details, refer to the

thirdparty/LICENSE.txt